Understanding Aggregate Trends for Apple Intelligence Using Differential Privacy

Apple Intelligence

Apple's ecosystem has always placed a strong emphasis on user privacy. With the introduction of Apple Intelligence, a suite of AI features integrated across its devices, this commitment to privacy remains paramount. To enhance these intelligent features by understanding user behavior and trends without compromising individual privacy, Apple is leveraging a sophisticated technique called differential privacy.

Differential privacy is a system that allows for the analysis of datasets to reveal group patterns while mathematically guaranteeing the privacy of individuals within that dataset. It achieves this by adding a carefully calibrated amount of statistical noise to the data or the results of queries. This noise ensures that the presence or absence of any single individual's data does not significantly alter the overall findings.

How Apple Intelligence Utilizes Differential Privacy

Apple employs differential privacy in several key aspects of Apple Intelligence to understand aggregate trends:

1. Identifying Trends and Prompts (such as Genmoji): For features like Genmoji, where users input prompts to generate custom emojis, Apple utilizes differential privacy to identify popular prompts and recurring patterns. Devices randomly report whether they have encountered specific prompt fragments when users opt in to share Device Analytics. This reporting is done anonymously and with added noise, ensuring that individual prompts cannot be linked back to specific users. By aggregating these noisy signals from numerous devices, Apple can discern which types of Genmoji prompts are most common and improve the model's ability to generate relevant and high-quality results for these popular requests.

For instance, the differentially private analysis will reveal this trend without Apple ever knowing which specific user created that particular Genmoji if many users frequently include the prompt "cat with sunglasses." 2. Improving Text Generation (e.g., Email Summarization, Writing Tools):

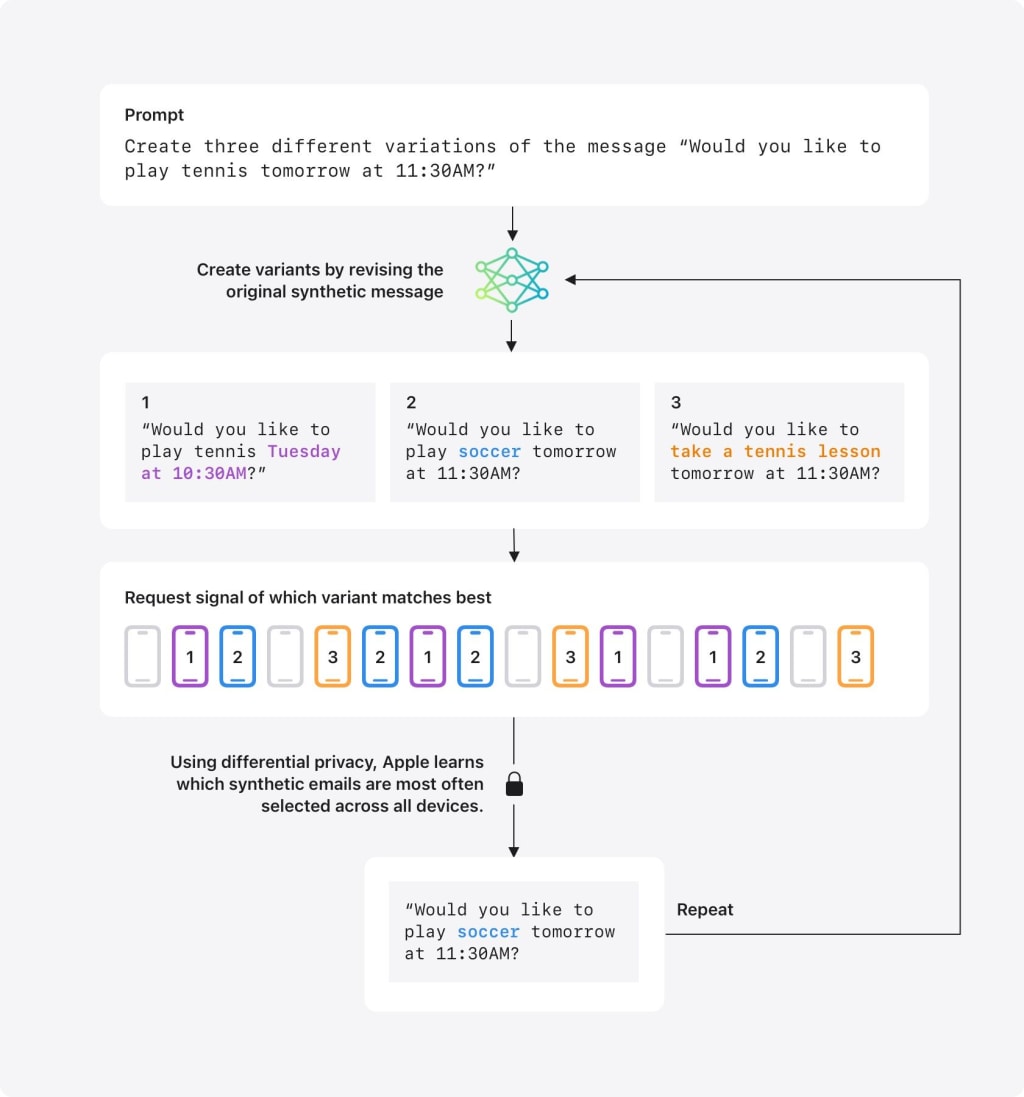

For features that operate on longer text inputs like email summarization and writing tools, Apple employs a slightly different approach using synthetic data in conjunction with differential privacy.

Synthetic Data Generation: First, Apple creates a large dataset of examples of artificial text, such as emails, that cover a wide range of subjects and writing styles. This synthetic data is generated without accessing any actual user content.

Embedding and Comparison: These synthetic text examples are then converted into mathematical representations called "embeddings," capturing key characteristics like language, topic, and length. These embeddings are sent to a small group of opt-in users' devices.

Local Comparison: On the device, these synthetic embeddings are compared against a sample of the user's local data (e.g., recent emails). The device then identifies which synthetic embedding is most similar to the local data sample.

Differentially Private Aggregation: The device sends an anonymous, noisy signal back to Apple, indicating which synthetic embedding was the closest match. Using differential privacy techniques, Apple aggregates these signals from all participating devices. This allows Apple to determine which types of synthetic text are most representative of real user communication patterns, without ever seeing the actual content of user emails.

Model Refinement: The insights gained from this process help Apple refine the topics and language of its synthetic data generation, which in turn improves the training of the underlying AI models for features like email summarization and writing assistance.

Example: By understanding through differentially private analysis that users frequently receive emails about scheduling meetings, Apple can generate more relevant synthetic email examples related to this topic, leading to better summarization capabilities for those types of emails.

Privacy Protections in Detail

Apple's implementation of differential privacy for Apple Intelligence incorporates several key safeguards:

Opt-in Participation: Only users who explicitly consent to share Device Analytics contribute to this process.

On-Device Processing: In many cases, the initial comparison and analysis happen directly on the user's device, minimizing the amount of data shared with Apple.

Anonymization and Noise Addition: All data shared with Apple is anonymized and includes statistical noise, making it impossible to trace back to individual users or their specific data.

Limited Information Sharing: Devices only send signals indicating general trends or the best-matching synthetic data, not the actual user data itself.

Aggregation: Apple only analyzes aggregated data from many users, focusing on overall patterns rather than individual behavior.

Synthetic Data as a Proxy: For more sensitive data like email content, Apple uses synthetic data as an intermediary to understand trends without ever accessing the real data.

Benefits of This Approach

Using differential privacy to understand aggregate trends for Apple Intelligence offers several advantages:

Enhanced AI Features: Apple can improve the accuracy, relevance, and overall quality of features like Genmoji, email summarization, writing tools, and others by learning about common usage patterns and language. Strong Guarantees of Privacy: Differential privacy guarantees mathematically that individual user data will remain private even when aggregate trends are being analyzed. Building User Trust: Apple reinforces its commitment to user privacy by employing privacy-preserving techniques in its AI development.

Competitive Advantage: In an era where data privacy is a growing concern, Apple's privacy-centric approach to AI can be a significant differentiator.

Challenges and Future Directions

Although differential privacy is an effective tool, it does not come without its drawbacks: Privacy-Utility Trade-off: Adding more noise increases privacy but can potentially reduce the accuracy of the aggregate insights. Finding the right balance is crucial.

Complexity of Implementation: Designing and implementing differential privacy mechanisms effectively requires specialized expertise.

Evolving Techniques: The field of differential privacy is constantly evolving, and Apple needs to stay at the forefront of research to ensure the effectiveness of its methods.

Looking ahead, Apple is likely to continue investing in and refining its privacy-preserving AI techniques. As Apple Intelligence expands with new features and capabilities, differential privacy will likely play an even more critical role in understanding user needs while upholding Apple's strong commitment to user privacy. The integration of synthetic data generation alongside differential privacy demonstrates a novel approach to training AI models in a privacy-conscious manner, potentially setting a new standard for the industry.

About the Creator

Mahdi Rahman

I'm a story writer with a passion for crafting narratives that transport readers to different worlds. I love creating compelling characters and twisting plots that keep people on the edge of their seats.

Comments

There are no comments for this story

Be the first to respond and start the conversation.