Computer Development Over Time Computer development has redefined the modern world in a remarkable journey of innovation and transformation. Computers have undergone a lot of development, from simple mechanical devices used for calculations to powerful machines that control satellites, medical diagnostics, and artificial intelligence. From the earliest computing tools to the advanced systems we use today, the key stages of computer evolution are examined in this article. Early Mechanical Devices

Long before the digital era, humans developed tools to assist with arithmetic operations. The abacus was created in ancient Mesopotamia around 2300 BCE and is considered to be one of the earliest known computing devices. It was used for basic arithmetic and remained in use for centuries.

In the 17th century, inventors like Blaise Pascal and Gottfried Wilhelm Leibniz developed mechanical calculators. Pascal’s calculator (Pascaline) could perform addition and subtraction, while Leibniz's device could multiply and divide. These devices laid the groundwork for automated calculation, despite being primitive by today's standards. The Engine for Analysis In the 19th century, Charles Babbage, an English mathematician, conceptualized the Analytical Engine. Although it was never finished during his lifetime, its design included memory, an arithmetic logic unit, and conditional branching to control flow. Ada Lovelace, a mathematician and writer, worked with Babbage and is credited with writing the first algorithm intended for a machine, making her the world’s first computer programmer.

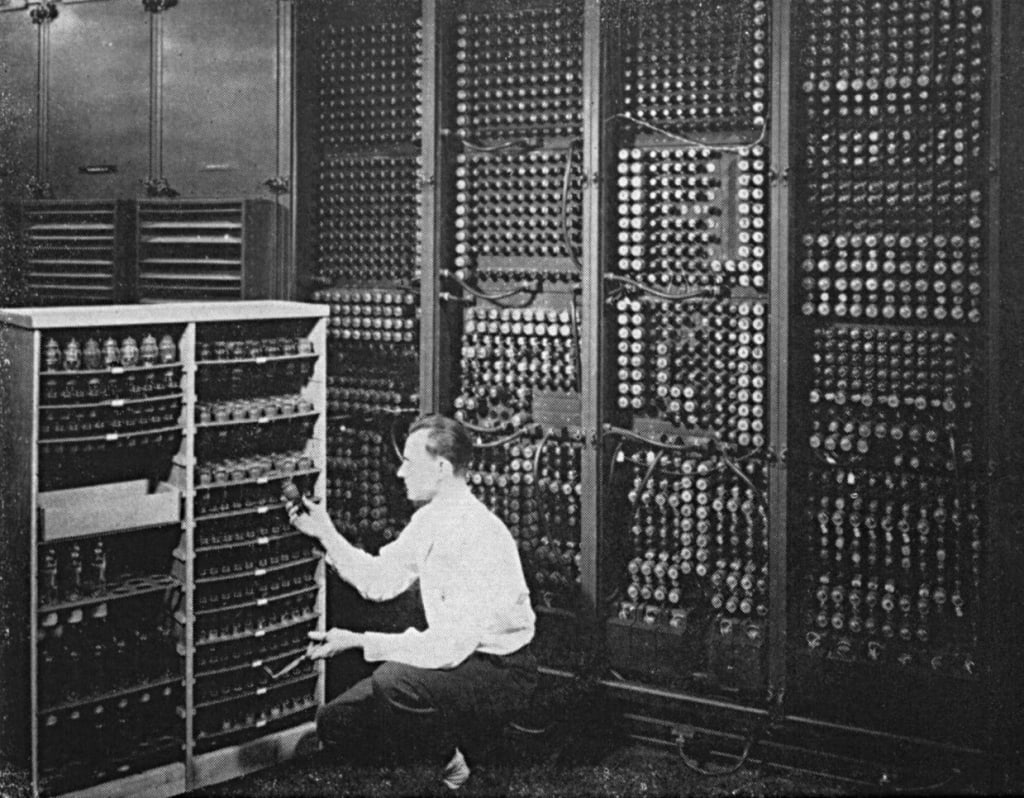

The First Generation: Vacuum Tubes (1940s–1950s)

Vacuum tubes were used for the circuitry and magnetic drums were used for memory in the first generation of computers. These machines were huge, costly, and used a lot of energy. A notable example is the ENIAC (Electronic Numerical Integrator and Computer), completed in 1945. It could perform thousands of calculations per second, a huge advancement at the time.

However, vacuum tube computers were unreliable and generated a lot of heat. Their programming was also tedious, involving manual rewiring and switch flipping.

The Second Generation: Transistors (1950s–1960s)

The invention of the transistor in 1947 revolutionized computing. Transistors replaced vacuum tubes, making computers smaller, faster, more reliable, and energy-efficient. Second-generation computers used assembly language, which allowed programmers to write instructions using symbols instead of binary code.

This era saw the development of commercial computers like the IBM 1401 and the UNIVAC II, which were used in business and government operations. It became common practice to store data on magnetic tape and use punch cards for input. The Third Generation: Integrated Circuits (1960s–1970s)

The 1960s introduced integrated circuits (ICs), which placed multiple transistors on a single silicon chip. The third generation of computers, which were more powerful, smaller, and more affordable, resulted from this innovation. Computers started using keyboards and monitors instead of punch cards around this time. Programming languages like COBOL and FORTRAN gained popularity, and operating systems were developed to manage hardware and software resources efficiently.

The Fourth Generation: Microprocessors (1970s–Present)

The creation of the microprocessor in the early 1970s marked the next significant development in computer technology. A microprocessor is a single chip that houses all of a central processing unit's components. Personal computers (PCs) were made possible by this breakthrough. Intel introduced the Intel 4004 in 1971, the first commercially available microprocessor. This era saw the rise of companies like Apple, Microsoft, and IBM, which made computers accessible to homes, schools, and small businesses. Graphical user interfaces (GUIs), the mouse, and software like Microsoft Windows transformed how people interacted with machines.

The fourth generation continues to this day, with massive improvements in processing power, memory, graphics, and networking capabilities. Devices became smaller, cheaper, and more portable, leading to laptops, tablets, and smartphones.

Artificial Intelligence: The Fifth Generation from the 1980s to the Present Fifth-generation computing is characterized by the integration of artificial intelligence (AI) and machine learning. This era, in contrast to previous generations that were more concerned with speed and efficiency, aims to develop intelligent systems that are capable of reasoning, learning, and self-correction. Modern computers and systems use AI in various domains—from voice assistants like Siri and Alexa to autonomous vehicles, robotics, facial recognition, and predictive analytics. Technologies like natural language processing (NLP) and neural networks have enabled machines to understand and generate human language, translate languages, and even write code.

Quantum computing, although still in the experimental stage, is another frontier in computer evolution. It aims to solve complex problems far beyond the reach of classical computers, using quantum bits (qubits) to process information in multiple states simultaneously.

Society's Impact of Computer Evolution Nearly every aspect of modern life has been profoundly altered by the development of computers: Communication: Email, video calls, and social media have revolutionized how people connect globally.

Education: Digital classrooms and online learning platforms make knowledge accessible from anywhere. Healthcare: Computers assist in diagnostics, robotic surgery, and patient management systems.

Business and Economy: Automation, data analysis, and online transactions have streamlined operations.

Computers have reimagined leisure activities, from video games to streaming services. Future of Computers

As technology continues to advance, the future of computing looks even more promising. Emerging trends include:

Quantum computing provides unprecedented processing power for encryption and intricate simulations. Edge computing is the process of bringing computation closer to data sources in order to cut down on latency and make decisions more quickly. Bio-computing is the study of how biological systems process information. Brain-computer interfaces: Creating direct communication pathways between the brain and machines.

These innovations will likely bring new opportunities and ethical challenges related to privacy, employment, and control over intelligent systems.

Conclusion

The journey of computer evolution is a testament to human ingenuity. From ancient counting tools to intelligent machines, computers have become integral to our existence. Understanding the history and development of computers enables us to appreciate their profound impact and prepare for the future as we move toward an even more interconnected and AI-driven world.

Comments (1)

You rising