Reclaiming AI

How I Built a Local Answer Engine Without the Cloud

When I first tried Perplexity AI, it felt like magic. Real-time answers, reference links, and powerful reasoning all in one place. But the deeper I went, the more I wondered: what if I could build something like this myself? And more importantly, what if it could run entirely on my own laptop?

That question led me to discover a new wave of local AI tools, and eventually, I created a full answer engine—capable of responding to complex, context-heavy queries without touching the cloud. No API calls. No usage caps. Just me, my laptop, and an open-source AI stack.

This is the story of that journey. And what it taught me about autonomy in the age of artificial intelligence. I started by identifying the building blocks. At the heart of my setup is something called Ollama. It’s a framework for running large language models locally. Unlike traditional setups that require big GPUs or advanced DevOps know-how, Ollama keeps things lightweight. You download a model like Llama 3 or Qwen 7B, and it just runs. On your CPU or GPU. Without extra fuss. But a language model alone isn’t enough to replicate Perplexity. I needed a way to embed documents, search them semantically, and retrieve the most relevant information in response to user questions. That’s where LangChain and FAISS came in. LangChain helped me build logical workflows for queries. FAISS helped me store and search text snippets efficiently.

The application I built accepts a variety of file formats—PDFs, Word docs, and plain text. It breaks those files into chunks, converts them into embeddings (mathematical vectors), and stores them in a searchable database. When I ask a question, it retrieves the best matching content and sends it to the language model for an answer.

It feels like magic because it is.

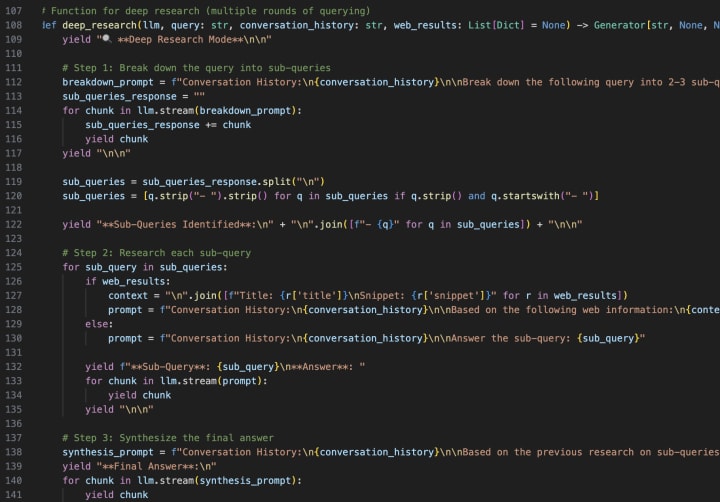

One feature I’m especially proud of is what I call "Extended Thinking." Instead of just answering a question directly, the AI pauses and thinks aloud. It breaks down the question into assumptions. It revisits the documents for supporting evidence. If web search is enabled, it pulls real-time insights from public sources. Then it combines everything into a thoughtful, cited response.

This isn’t a chatbot with canned responses. It’s more like a research assistant that can reason through complexity.

The best moment came when I uploaded a set of government policy papers and NGO reports on climate adaptation. I asked a nuanced question about which Indian states had taken the most proactive measures post-COVID. The system didn’t just summarize the documents. It compared state-level approaches, highlighted differences in targets, and even suggested why certain trends emerged. And it did it all offline.

In that moment, I realized something powerful. I wasn’t just building another AI app. I was reclaiming control. Over my data. Over the answers. Over the process itself. We’ve spent the past few years being told that AI lives in the cloud. That to access intelligence, we need to send our questions to servers we don’t own, in formats we can’t control, for costs that keep rising. But what if that’s no longer true? With the right tools, intelligence can live where we are. On our machines. On our terms.

I reused the same visual layout and comparison table from my previous publication to highlight how my local setup stacks up against Perplexity. From privacy and cost to offline capability and file support, the differences were illuminating. I’m not claiming to have built a perfect replacement. But I did build something good enough—and perhaps more trustworthy—for what I need as a researcher and developer. If you're curious about AI, privacy, or what it means to own your tools in a digital world, I encourage you to explore this path. There’s a thrill in watching a model you downloaded respond to a prompt from your documents. There’s pride in knowing the logic behind every answer. And there’s peace in knowing your data never left your machine.

In an age of remote servers and black-box models, building something local feels radical. But maybe it’s just common sense.

Comments

There are no comments for this story

Be the first to respond and start the conversation.