Llama 4 vs Mistral

The New Power Dynamic in Open-Source AI

If you're an AI developer in 2025, you're likely facing a pivotal question—should you build with Llama 4 or Mistral? This isn’t just another tool comparison. It's a reflection of how AI development is being redefined by freedom, speed, and transparency. What’s unfolding is more than a model rivalry; it’s a choice between two visions for the future of intelligence.

Meta’s Llama 4 and Mistral’s Mixtral models are both titans of the open-source LLM world. But they take fundamentally different approaches. Llama 4, released by Meta, is built with dense transformer architecture. It's engineered for precision and scale, especially useful for complex reasoning, document analysis, and enterprise-grade NLP tasks. Mistral’s Mixtral, by contrast, leverages a Mixture-of-Experts design that activates only parts of the model during inference. This allows it to deliver competitive performance with significant gains in speed and efficiency.

Developers across communities—from solo builders to AI research labs—have started to split into camps. Llama 4 appeals to those who prioritize raw capability and context length. Mistral, however, is gaining popularity for its adaptability, speed, and the minimal GPU resources it needs.

What makes this debate even more compelling is that it's happening quietly. There's no splashy marketing campaign or viral demo leading the charge. Instead, GitHub commits, Hugging Face downloads, and Discord conversations are shaping the momentum. Engineers are quietly replacing proprietary models like GPT-4 with open, auditable, and fine-tunable alternatives.

In community-run tools like LM Studio, Ollama, and agentic frameworks such as OpenManus, Mistral has emerged as the preferred default. Developers cite faster iterations, easier deployment, and lower cost as key reasons. Fine-tuning Mistral with adapters like LoRA takes less time and runs on affordable consumer hardware. This positions it as a practical choice for experimentation and real-world deployment.

Meanwhile, Llama 4 continues to dominate in scenarios that require interpretability, consistency, and deep reasoning. Legal firms, research institutions, and enterprises dealing with long documents lean toward Llama’s structured outputs and robust safety filters like Llama Guard. Its 128K token context window opens doors to use cases that demand processing of lengthy texts in one go.

The choice between Llama 4 and Mistral also reveals broader themes in today’s AI culture. On one side, you have structured, centralized, corporate-led innovation. On the other, you have modular, community-driven, open experimentation. The debate isn’t binary—it’s ecosystemic. And in reality, many teams are adopting both models depending on their needs.

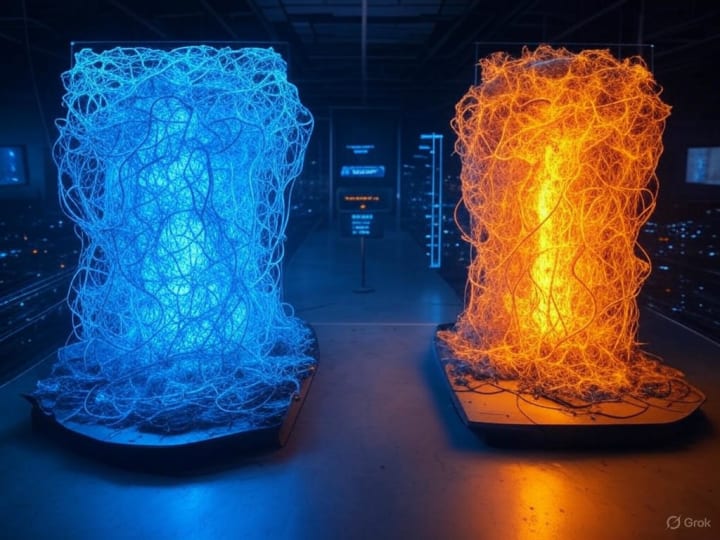

The image that captures this moment best shows two AI cores: one glowing blue and dense (Llama 4), the other lit in vibrant orange with rotating expert units (Mistral). Suspended between them is a scoreboard that reads: Reasoning 1, Speed 1, Community 1. It’s not a clear winner-takes-all fight. It’s a symbolic shift toward choice.

And maybe that’s the point. LLMs aren’t just about capability anymore. They’re about alignment—with your infrastructure, your budget, your governance policies, and your creative autonomy.

As we look ahead, we may see more developers favor the flexibility of Mistral for building AI agents, chatbots, and mobile applications. But when the task demands precision and scale, Llama 4’s engineering depth is hard to beat.

The trajectory of these two models reflects a broader trend in how generative AI is being integrated into everyday life. In use cases where latency, responsiveness, and minimal infrastructure are critical—think voice assistants, IoT-enabled smart devices, or on-device personal AI—Mistral's efficiency and adaptability become its greatest assets. Developers are already optimizing Mistral for real-time interactions, edge computing, and mobile-first deployments, tapping into its lean model routing to balance power and accessibility. This has allowed Mistral to carve out a niche that once seemed inaccessible to open-weight LLMs: fast, responsive, localized intelligence.

On the other side, Llama 4’s capabilities make it a go-to choice for high-stakes deployments in law, academia, and enterprise research. Its ability to manage longer context windows, handle dense logic chains, and generate interpretable outputs has made it an essential component in environments where reliability and traceability are paramount. In healthcare AI, for instance, where legal compliance and patient safety cannot be compromised, teams lean heavily on Llama 4’s controlled architecture and safety filters. Its engineering gives professionals a level of assurance that the model won’t just perform well—it will perform responsibly.

This divergence in usage doesn’t mean a clear winner will emerge. In fact, many advanced AI ecosystems are already integrating both models, each playing to its strengths. A knowledge assistant system may use Mistral for front-end engagement—handling user queries quickly and economically—while deferring complex summarization or legal verification to Llama 4. These hybrid stacks are no longer experimental; they’re becoming industry standard. Whether you're scaling an AI research lab or building your next side project, the tools you choose reflect more than technical preference. They signal how you see the future of AI unfolding. Are you focused on access and speed? Or are you optimizing for robustness and regulatory fit? Your model choice embeds those values into the codebase you're creating.

In this new open-source renaissance, perhaps the best model isn't the biggest. It's the one that works best for you. It's the model that adapts to your vision, not the other way around. It's the architecture that respects your constraints—whether those are GPU limits, data privacy laws, or creative ambitions. Whichever side you're on—Llama 4 or Mistral—the future looks more open than ever. Not just because the code is accessible, but because the choices are. For the first time in AI history, builders have options that are both powerful and free. And that, more than any benchmark or architecture, is what defines real progress.

Comments

There are no comments for this story

Be the first to respond and start the conversation.