Master Multi-Armed Bandits: A Beginner’s Guide

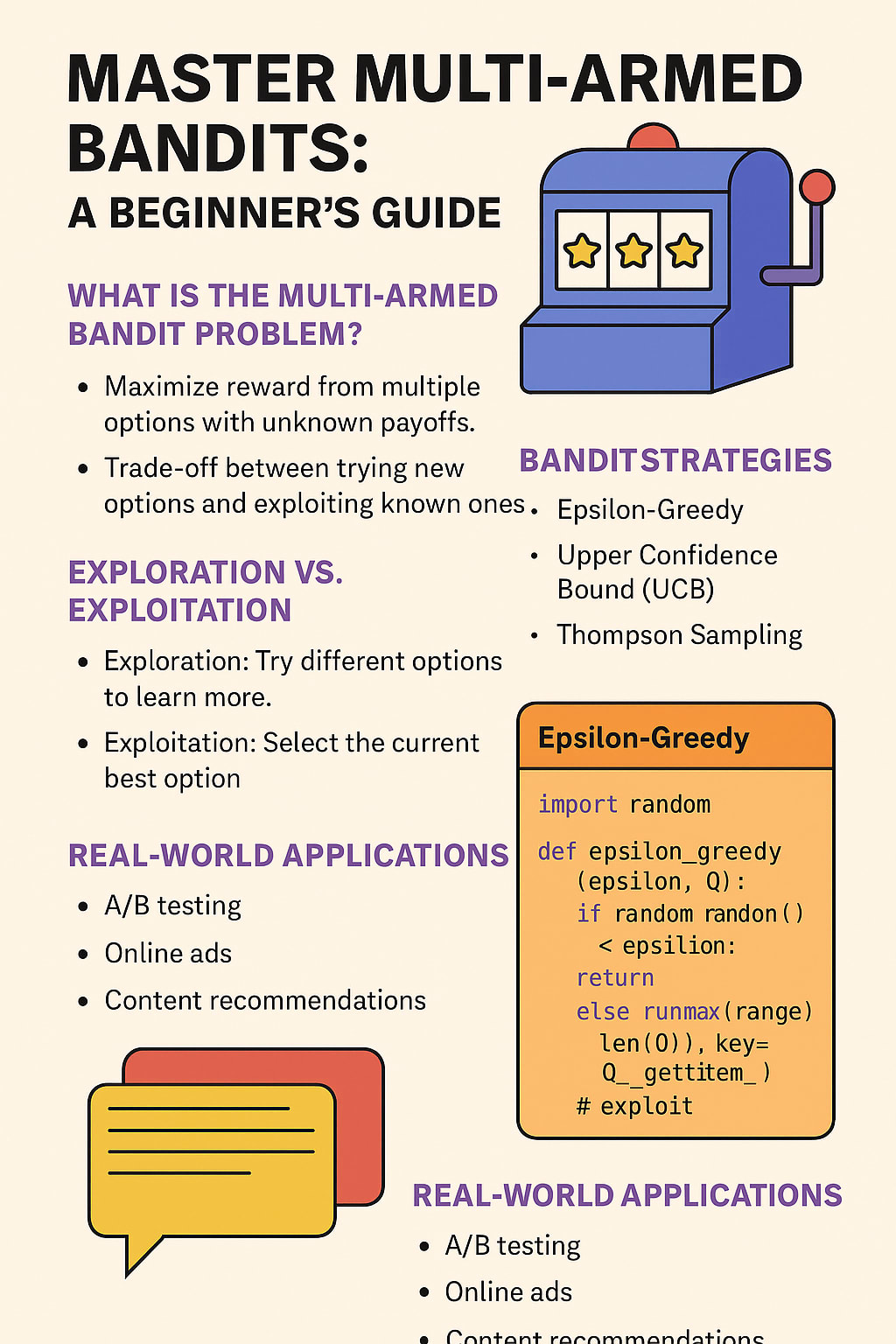

What is the Multi-Armed Bandit Problem?

In the world of data science and machine learning, decision-making under uncertainty is a critical challenge. If you've ever run an A/B test or wondered whether there's a smarter way to pick the "best option" without wasting time and resources, you're about to discover a powerful tool — the Multi-Armed Bandit algorithm.

This beginner-friendly guide will introduce you to the Multi-Armed Bandit (MAB) problem, break down key concepts like exploration vs. exploitation, and walk you through popular strategies like the Epsilon-Greedy strategy, UCB, and Thompson Sampling. By the end, you’ll know when and how to use these algorithms, complete with real-world examples and Python code snippets.

🤖 What is the Multi-Armed Bandit Problem?

Imagine you're in a casino faced with a row of slot machines (called "one-armed bandits"). Each machine gives a different — and unknown — payout. Your goal? Win as much as possible with limited time or money.

This is the Multi-Armed Bandit problem — balancing the act of:

Trying new machines to learn their payout rates (exploration), and

Playing the best-known machine to maximize reward (exploitation*).

This dilemma mirrors many real-life situations in tech, such as deciding which ad to show a user or which news article to recommend.

🎯 Exploration vs. Exploitation

This is the core trade-off in MAB algorithms:

Exploration: Trying unknown options to gather more information.

Exploitation: Leveraging known information to maximize immediate rewards.

Balancing these two ensures you don't miss out on a better option just because you didn’t explore enough early on — a common flaw in traditional A/B testing.

Why not just use A/B testing?

A/B testing is static. MAB is dynamic — it adapts to user behavior in real-time, making it one of the best A/B testing alternatives.

🧠 Popular Multi-Armed Bandit Strategies

Let’s look at thre popular and easy-to-implement strategies.

1. Epsilon-Greedy Strategy

This is the simplest and most intuitive MAB approach.

With probability ε (epsilon): explore (choose a random arm)

With probability 1 − ε: exploit (choose the arm with the best-known average reward)

✅ Python Code Example:

python

Copy

Edit

import random

# Assume we have 3 bandits

n_bandits = 3

counts = [0] * n_bandits

rewards = [0.0] * n_bandits

epsilon = 0.1

def select_bandit():

if random.random() < epsilon:

return random.randint(0, n_bandits - 1) # explore

else:

return rewards.index(max(rewards)) # exploit

def update_bandit(chosen_arm, reward):

counts[chosen_arm] += 1

n = counts[chosen_arm]

value = rewards[chosen_arm]

# Update estimated reward

rewards[chosen_arm] = ((n - 1) / n) * value + (1 / n) * reward

This method is great for beginners and often effective in many use cases.

2. Upper Confidence Bound (UCB)

UCB aims to be more "mathematically smart" than Epsilon-Greedy. It uses confidence intervals to estimate the potential of each arm.

Arms with fewer trials have wider confidence intervals, encouraging exploration early on.

✅ Python Code Example:

python

Copy

Edit

import math

def ucb_selection(total_count, counts, rewards):

ucb_values = []

for i in range(len(rewards)):

if counts[i] == 0:

return i # try untested arm

average = rewards[i]

delta = math.sqrt((2 * math.log(total_count)) / counts[i])

ucb_values.append(average + delta)

return ucb_values.index(max(ucb_values))

UCB is a strong best MAB strategy when performance variance is high and consistent learning is needed.

3. Thompson Sampling

A probabilistic model-based strategy. It maintains a belief (distribution) about each arm’s reward and samples from these distributions to decide which to pull.

✅ Thompson Sampling Example in Python:

python

Copy

Edit

import numpy as np

# Beta distribution parameters

successes = [1] * n_bandits

failures = [1] * n_bandits

def thompson_sampling():

sampled = [np.random.beta(s + 1, f + 1) for s, f in zip(successes, failures)]

return sampled.index(max(sampled))

def update_thompson(chosen_arm, reward):

if reward:

successes[chosen_arm] += 1

else:

failures[chosen_arm] += 1

Thompson Sampling often outperforms other strategies in practice, especially in reinforcement learning basics.

🌍 Real-World Applications of Multi-Armed Bandits

The beauty of MAB lies in its versatility across domains:

1. Ad Optimization

MABs dynamically select the best-performing ad based on real-time click data.

2. Recommendation Systems

Netflix, YouTube, or Spotify can use bandits to show content that users are more likely to engage with — while still testing new options.

3. E-commerce & Pricing

Amazon might use a Multi-Armed Bandit algorithm to test different product listings o discounts in real time without fully committing to any one version.

4. Clinical Trials

Pharma companies can reduce patient exposure to less effective treatments using MAB frameworks.

5. Game Development

Test multiple reward strategies or difficulty curves and dynamically adapt gameplay.

🧪 Why MAB > A/B Testing

Feature A/B Testing Multi-Armed Bandit

Static or Dynamic Static Dynamic

Learns over time ❌ ✅

Traffic allocation Fixed Adaptive

Fast decision-making ❌ ✅

Best for real-time decisions ❌ ✅

Bandit algorithms outperform /B tests in scenarios where continuous learning and adaptive optimization are key.

🛠️ Bandit Algorithms in Python

Python offers excellent libraries like:

scikit-learn (basic support)

scipy (for distributions)

numpy (sampling)

mabpy, Vowpal Wabbit, or botorch (advanced MAB frameworks)

These libraries hel implement everything from a Thompson Sampling example to full MAB simulations.

🧾 Conclusion: When Should You Use MAB?

I your goal is to make adaptive decisions under uncertainty, the Multi-Armed Bandit algorithm is your best friend.

✅ Key Takeaways:

MAB solves the balance between exploration vs. exploitation.

Epsilon-Greedy is simple and effective for early adoption.

UCB and Thompson Sampling are more robust and often provide better results.

Ideal for A/B testing alternatives in fast-paced environments.

Implementable with basic bandit algorithms in Python.

Whether you’re optimizing ads, recommendations, or game mechanics — MABs make your decisions smarter, faster, and more effective.

❓ FAQs

1. What is a Multi-Armed Bandit algorithm used for?

It’s used to find the best option (like an ad or recommendation) in real time by learning from user behavior, balancing exploration and exploitation.

2. How is Multi-Armed Bandit better than A/B testing?

Unlike A/B tests, MAB algorithms adapt as they learn, optimizing decisions while minimizing losses.

3. What is the Epsilon-Greedy strategy?

It’s a basic MAB strategy where you explore new options with a small probability (epsilon) and exploit known best ones otherwise.

4. What is a real-world example of Thompson Sampling?

Online platforms like Google or Amazon use it to test which headlines or products get more clicks — updating preferences dynamically.

5. Do I need deep reinforcement learning to use MAB?

Nope! MAB is part of reinforcement learning basics and doesn’t require complex neural networks to start.

About the Creator

Nomidl Official

Nomidl - Lets Jump into AI world

Comments

There are no comments for this story

Be the first to respond and start the conversation.