How AI Systems Use World Models to Predict, Plan, and Dream?

The architecture of internal simulation in modern AI, and why world-models are shaping real-world applications including mobile app development.

I remember watching a self-driving car simulation at a robotics lab in Orlando a few years ago.

The car saw, but it also imagined. An invisible world of roads and pedestrians and probabilities was being constructed inside its head. Predictions were made not only from what is, but from what could be.

That invisible construct, that mental map of possibilities, researchers now call a world model.

And it is changing everything we know about the way AI comprehends, plans or in some weird sense even dreams.

The Hidden Blueprint: What Are World Models?

We humans always keep running a simulation of the world inside our heads. Before I grab that cup, my brain has already predicted where the cup will be after 0.5 seconds(from now). Before I cross a street, there are numerous micro-simulations going on inside my head-cars, timing, wind and steps.

That's exactly how AI world models work.

A world model is a learned internal representation of the environment that enables the machine to simulate and predict the outcomes of its actions without having to interact with the real environment for every single step.

They are what drive thought processes as opposed to machines acting like automatons. This is what sets reflex apart from reason.

Researchers like David Ha and Jürgen Schmidhuber popularized the term through their 2018 paper “World Models,” where a simple neural network learned to play the game CarRacing-v0 — not by direct trial, but by dreaming in latent space.

How World Models Actually Work

A modern world model has three essential components:

- Perception Encoder: Converts high-dimensional sensory data (like images or text) into compact representations or “latent states.”

- Dynamics Model: To predict how latent states change with time. In other words, to learn the law of motions within its environment.

- Policy/Planner: Uses predicted future states to determine optimal actions.

This kind of architecture would enable an AI system to “visualize” or run through possible futures internally and select the best strategy before actually executing any action—much like humans do visualization before making a move.

Why Prediction Precedes Intelligence

World models are considered to be a pillar of AGI. In fact, instead of making the intelligence reactive to inputs, world models make intelligence proactive by anticipating the world before it happens.

Let’s break that down with an example.

Say we’re training AI to drive. With no world model, it learns from data: “When I see a red light, I stop.”

With a world model, it learns why it stops: “If I don’t, the probability of collision increases by 98%.” That difference—between rule-following and consequence-understanding—is the foundation of reasoning.

For example, DeepMind’s MuZero achieved superhuman performance in Go and Atari not by being told the rules of the games but by learning its own latent dynamics model—a self-learned internal game of imagination.

From planning to dreaming: the weird power of latent simulation

Once a world model can predict its environment, it can do something even stranger. It can hallucinate reality.

In World Models, eventually the agent stops using the real game engine at all. Instead, it learns to ‘dream’ new frames in latent space-an imagined world accurate enough that it can continue training itself within that.

This is not science fiction; this is a measurable efficiency leap.

Training in a simulated internal world is orders of magnitude faster and cheaper than interacting with a physical one.

Recent work by Google DeepMind and Meta AI shows that dream-based training (or latent imagination training) reduces reinforcement learning compute costs by as much as 80%.

Here’s the simple logic: if the model-imagined world is accurate, there’s no need to test everything in the messy real world.

That is how robots learn to walk faster, or drones fly without bumping into things, and generative models predict results even when not constantly retrained.

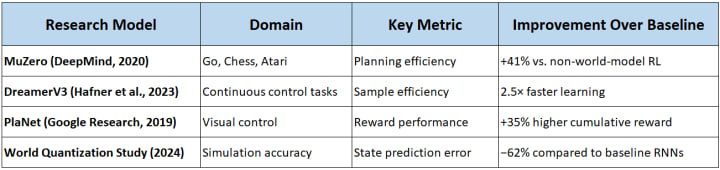

Data: The Quantitative Edge of World Models

Some key research metrics illustrate how impactful world models are becoming:

What these results show is that world models aren’t a philosophical abstraction — they’re an optimization weapon.

They make AI cheaper, faster, and more self-correcting.

Where It Gets Practical

Meanwhile, in applied AI, world models are sneaking their way-from autonomous robotics and virtual assistants all the way down to mobile app ecosystems.

For example, firms involved in mobile app development Orlando are piloting small world models that allow apps to infer user intent.

Suppose an AI keyboard which does not only predict your next word but understands the flow of your thoughts based on recent context. That would be a micro world model- predictive simulation inside your semantic space.

Similarly, for applications in maintenance and logistics ,world models run production chains as a latent simulation to detect failures or delays even before they physically manifest.

Philosophical question: Do Model Dream?

When researchers say that their model dreams,it is not by any means just another metaphor added on top of so many already piled up adjectives applied.

The latent simulations taking place inside the model are, quite literally, dreams: internally generated sequences that closely resemble real experiences and can thereby prove useful.

This finds its mirror image in cognitive neuroscience-humans also consolidate memories and update predictive models during REM sleep.

Some AI researchers share the belief with neuroscientists that this mechanism of dreaming is instrumental to generalization and creativity-in brains as well as networks!

It’s such an interesting overlap: we and our machines both build world models to survive; possible futures imagined.

Challenges: The Reality Gap

Nevertheless, there has been development of world models confronts a principal obstacle-the reality gap.

Simulated predictions often break down in real physical situations or some unexpected conditions.

The robot was trained within its own dream to walk across the floor; it walks confidently in simulation but falls when actually walking on a slippery floor because an actual physical reality was different from simulation.

This work proposes bridging this gap by using hybrid architectures-latent learned models combined explicitly with physical/symbolic prior knowledge.

Other efforts include Hybrid World Models (HWM, 2024), a physics-informed neural network that learns hybrid generative representations for better transferability to the real world.

Where It’s Headed: The Rise of Internal Simulators

The next wave of AI systems-agentic models-are already being developed with world models as their cognitive cores. These agents are multimodal promptable simulators running internal simulations and selecting plans autonomously.

In reinforcement learning, robotics and autonomous AI planning, the future is not brute force computation. The future is efficient imaginators.

Copilots, tutors or logistics bots running tiny simulations inside every decision they make- that’s the horizon of intelligent planners and it’s much closer than we think.

Conclusion: Why World Models Matter

The future of AI is not just a story about scale. It is a story about simulation — how deeply machines can internalize, predict, and test the world inside themselves before acting upon it.

World models are what turn the raw power of compute into actual thought.

They are the silent engine behind planning, prediction, and any creativity that might be synthesized.

And perhaps if intelligence really does equal the capacity to imagine consequences prior to their appearance in reality — then dreaming for humans as well as machines isn’t merely some poetry.

It’s compulsory.

FAQs: How AI Systems Use World Models to Predict, Plan, and Dream

1. What is a world model in artificial intelligence?

A world model is the internal simulation which permits prediction inside an AI system, inclusive of outcome-based actions before actual performance. It involves any environmental encoding or understanding about how things change, interact, and move so that reasoning can be applied to planning within AI without direct trial-and-error experience.

2. How do world models differ from traditional machine learning models?

World models are explicitly architected toward abstraction far above just mapping input directly to output or action; most common ML architectures remain fundamentally reactionary-they map perception straight through some learned transformation into behavior.

3. Why do researchers believe that world models are essential components of general intelligence?

General intelligence requires the abilities to plan and imagine, reason around some new situations. World models support these abilities at their foundation. By causal structure, by temporal consistency, they help machines "think" about what might happen next in dynamically changing environments to select the best possible action—very similar, indeed identical behavior as seen among humans.

4. Give a few real-world applications of world models.

- Autonomous systems: path planning for self-driving cars and drones, robotics.

- Gaming and simulation: Teaching AI agents inside imagined environments.

- Logistics, weather, or manufacturing results prediction.

- Ppersonal assistants of mobile and on-device AI or a predictive keyboard anticipating the intent of the user (hugely relevant to mobile app development Orlando and other similar innovation hubs).

5. How do world models make AI more efficient?

World models reduce the requirement of data and computation by several folds because they replace external real-environment trials with internal simulations. For example, reinforcement learning agents trained through artificial trajectories can achieve performance parity with real-world agents at a minuscule proportion of the cost.

6. What is “dreaming” in the context of AI models?

At present, "dreaming" is a polite way of making the AI engage in internal synthetic future scenario generation as a training session. Technically speaking, it allows latent imagination training-create and learn experiences virtually without any real-world feedback experiences. That has been essentially the core mechanism for systems such as DreamerV3 and MuZero.

7. What are the current limitations of world models?

The main challenge is the reality gap — simulated predictions may diverge from actual physics or unseen conditions. Also, training accurate dynamics models requires vast data diversity, and errors in imagination can compound over time, leading to unstable planning.

8. How are world models influencing the next generation of AI systems?

They’re at the core of agentic AI — systems that can reason, plan and act autonomously. Not in response to a prompt but via internal simulation, testing different strategies before selecting one to execute. Every future ‘agent’ AI — from robotics down to some mobile assistant — will lean on an embedded world model for contextual decision-making.

Comments

There are no comments for this story

Be the first to respond and start the conversation.