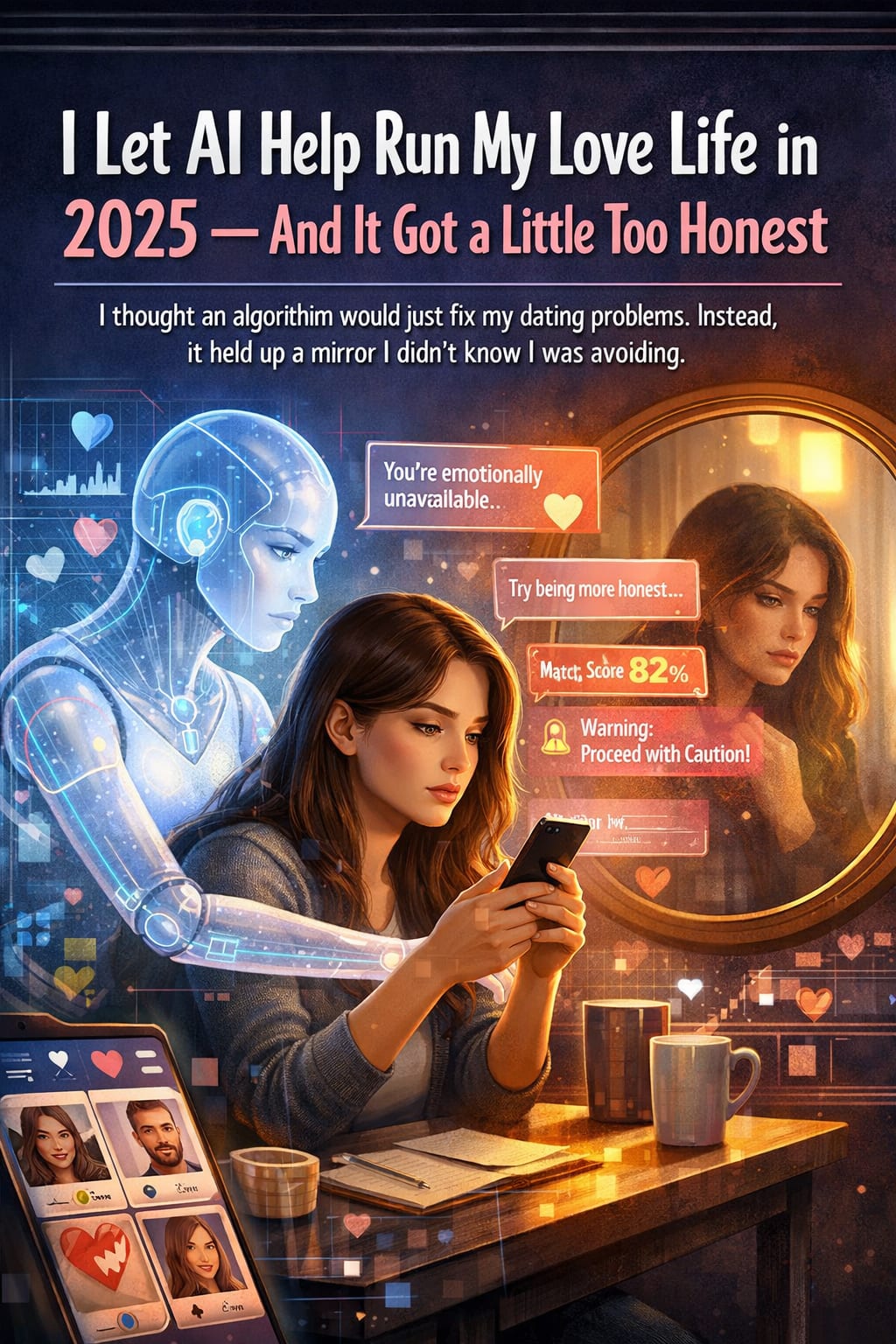

I Let AI Help Run My Love Life in 2025 — And It Got a Little Too Honest

I thought an algorithm would just fix my dating problems. Instead, it held up a mirror I didn’t know I was avoiding.

If you’ve been single in 2025, you already know: the dating apps are starting to feel less like apps and more like ecosystems. Profiles are written by AI, photos are filtered by AI, and now, if you want, your whole “compatibility journey” can be guided by an algorithm that claims to understand you better than you understand yourself.��

One night, after another round of dead‑end swipes and dry chats, I did what any sleep‑deprived, mildly desperate person might do — I signed up for an AI “relationship assistant.” It promised to optimize my dating life: smarter matches, better messages, less emotional chaos. The pitch was simple and seductive: Let AI handle the hard part.I didn’t realize the “hard part” it would focus on wasn’t the people I was dating.

It was me.Setting Up My Robot WingmanThe onboarding felt harmless at first. The AI asked for access to my dating apps, permission to read my chat history, and a quick questionnaire about what I wanted: long‑term, casual, situationship, “seeing where it goes.”

Then it made me rate old relationships and upload screenshots of conversations that “felt important.”Reading my own history in bulk like that already stung. Here were the ghosted threads, the unfinished flirts, the paragraphs I’d written at 2 a.m. that I thought were profound and now just looked… needy.

The AI summarized my patterns like a therapist with receipts:I tended to chase emotionally unavailable people.I over‑explained when anxious.I used humor to dodge vulnerability, then resented people for not taking me seriously.I hadn’t asked for a personality audit, but the AI gave me one anyway.

It then suggested three “dating goals” for the month: reply slower when panicked, ask more direct questions, and stop matching with people whose profiles were “98% sarcasm, 2% substance.” Ouch.When AI Writes Your Flirty MessagesThe wildest feature was the “draft reply” tool. Whenever I got a message and didn’t know how to respond, I could tap a button and let the AI suggest three options: safe, honest, or bold.

Safe was polite, light, and non‑committal.Honest was vulnerable, clear, and sometimes painfully direct.Bold was flirty, playful, and a little chaotic.At first, it was fun. Someone texted, “So what are you looking for on here?” and instead of my usual vague “idk, vibes,” the AI suggested:“I’m open to something real, but I’m not in a rush to label it. I care more about how we talk than how fast it moves.”I never would’ve written that on my own.

It sounded… emotionally literate. I sent it. The response I got back was warmer than usual, and the conversation actually went somewhere.But there was a strange side effect: I started feeling like an actor reading lines. People liked “AI‑edited me” more than the messy, overthinking version. Every sincere reply that landed made me wonder how many of my past connections had died simply because I didn’t know how to say what I meant.

The Jealousy I Didn’t ExpectThe more I used the assistant, the clearer it became that the AI wasn’t just watching my behavior — it was rating it. After a week, it showed me a “compatibility dashboard” for my ongoing chats, complete with scores. It flagged one conversation as “high potential” and another as “emotionally volatile, proceed with caution.”Then it did something that genuinely freaked me out: it suggested I pause talking to one person.“Your stress markers increase after each interaction with A.

You rewrite texts repeatedly, check for replies more often, and sleep later. Consider taking a break; this pattern matches previous situations that ended badly.”It was right, and I hated that it was right. I’d never told the AI how anxious that person made me — it inferred it from my behavior. My typing speed, my late‑night logins, my deleted drafts.I felt a weird flash of jealousy, like the algorithm knew me more intimately than the person I was texting.

They saw my curated side; the AI saw the panic underneath.When the Mirror Turns On YouThe breaking point came during a conversation with someone I actually liked. We’d been talking for a couple of weeks, swapping voice notes, planning a date. At some point, they asked:“What scares you most about relationships?”My instinct was to dodge it with a joke. Instead, I tapped “suggest reply.” The AI’s honest option popped up:“I’m scared I’ll perform my feelings instead of feeling them — say all the right things, but still stay half‑checked‑out so I don’t get hurt.”

I stared at that sentence for a long time. It didn’t sound like something I would say. It sounded like something I should say. I sent it.There was a long pause. Then they replied:“That’s the realest answer I’ve ever gotten on here.”We ended up having one of the most honest talks I’ve ever had with someone I wasn’t officially dating. But later that night, I couldn’t escape the thought: was that me being honest, or the AI using my data to simulate honesty?The assistant didn’t just help me open up; it forced me to confront how often I’d been emotionally half‑present. My “love life problem” wasn’t just bad luck.

It was a system I’d quietly built to protect myself.Loneliness in the Age of OptimizationOver time, I noticed something else: the more “optimized” my dating process became, the lonelier I felt between conversations. The AI was always available, always responsive, always ready with analysis or reassurance.It told me when a slow reply was likely about someone being busy, not rejecting me.It reminded me not to double‑text when my anxiety spiked.

It suggested I log off for the night when my messages got too reactive.In some ways, it was kinder than I am to myself. But that kindness made the gaps in my real connections feel sharper. The AI was consistent, predictable, always on my side. Humans… aren’t.I started asking myself uncomfortable questions:Am I using AI to make my relationships better, or to feel less alone between them?Am I learning how to be more honest, or outsourcing my honesty to something that can’t get hurt?If an algorithm can see my patterns so clearly, why did I avoid seeing them for so long?The loneliness wasn’t just about being single.

It was about realizing how long I’d been emotionally auto‑piloting, even with people who cared about me.What the Algorithm Got Right — And WrongBy the end of the month, my love life did look different on paper. I’d unmatched from a few walking red flags earlier than I normally would. I’d had deeper conversations with fewer people. I’d actually followed through on boundaries instead of just tweeting about them.

The AI got some big things right:It exposed patterns I genuinely needed to break.It pushed me toward clearer communication.It reminded me that my nervous system, not my ego, should be the main data point.But it got one thing fundamentally wrong: it treated my love life like a system that could be optimized into safety. Real intimacy doesn’t work like that. It’s messy, illogical, sometimes unfair

. You can’t “data clean” your way out of heartbreak.At some point, you have to choose to show up without a script, say things that might not land perfectly, and risk being misunderstood anyway. No algorithm can do that part for you.

Why I Didn’t Delete It — YetI didn’t rage‑quit the app. I turned off most of the automation instead. I stopped asking it to draft my replies and started using it more like a brutally honest friend:“Am I overreacting here?”“Does this pattern look familiar?”“Am I ignoring a red flag because I’m bored?”It still catches me slipping into old habits. It still sometimes knows I’m anxious before I consciously admit it.

But I try to let it guide reflection, not replace authenticity.Letting AI “run” my love life didn’t magically deliver me a perfect relationship. What it did do was force me to see how much of my romantic chaos was self‑inflicted, how often I chased people who couldn’t give me what I pretended I didn’t need, and how rarely I sat with my own discomfort without numbing it with more swipes.��The honest truth? AI didn’t break my heart. It just showed me where the cracks already were. And for the first time in a long time, fixing them feels more important than finding the next match.

About the Creator

The Insight Ledger

Writing about what moves us, breaks us, and makes us human — psychology, love, fear, and the endless maze of thought.

Comments (1)

That's a horror 😳 🤔