Mobile Apps in 2026: How AI Agents Are Replacing Traditional Interfaces

On-device intelligence and predictive workflows are eliminating the tap-and-swipe model that defined mobile apps for two decades.

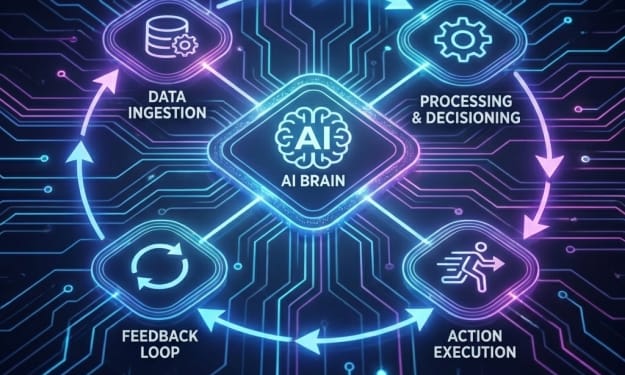

The mobile landscape in early 2026 has evolved past generative AI novelty. We no longer discuss chatbots integrated into sidebars. The industry has pivoted toward "Agentic Native" architecture. This represents a fundamental shift. Apps are no longer static tools. They are proactive agents. These agents execute complex workflows with minimal user intervention.

For CTOs and product leads, the stakes have changed. Users expect apps to understand intent. They no longer want to simply respond to taps. Engineering teams face a critical transition. They must move from traditional graphical interfaces (GUI) to intent-based interfaces (IUI). This evolution requires rethinking how mobile software is built. It demands new approaches to testing and deployment.

The Current State of Mobile Engineering

By 2026, Neural Processing Units (NPUs) dominate flagship devices. These are specialized chips that handle AI tasks efficiently. On-device AI has become the standard. The industry is shifting away from massive cloud-dependent Large Language Models (LLMs). Instead, we see efficient Small Language Models (SLMs). These models run locally on your device. They ensure data privacy. They reduce latency to near-zero.

The primary challenge has shifted. Adding AI is no longer the question. Managing thermal and battery demands is now critical. Constant local inference creates heat. It drains batteries. Engineering teams must maintain a fluid user experience despite these constraints.

10 Ways AI is Shaping Mobile Development (2026-2027)

1. The Rise of Intent-Based Interfaces (IUI)

Traditional button grids are becoming obsolete. Modern apps use "liquid" interfaces. These interfaces reshape based on predicted user needs. Instead of navigating three menus to file an expense report, users simply act. They say or type "file my flight receipt." The app UI dynamically generates the necessary confirmation fields. This reduces time-to-task by 40% in enterprise pilots. That means tasks that took 5 minutes now take 3 minutes.

2. On-Device SLMs for Zero-Latency Privacy

Privacy regulations in 2026 make cloud processing a liability. Sensitive data requires special handling. Developers now deploy fine-tuned SLMs directly on devices. These models handle personal data locally. Health metrics stay on your phone. Financial history never leaves your device. This architecture satisfies GDPR-2 requirements. It also delivers instant responsiveness.

3. Autonomous QA and Self-Healing Code

Manual regression testing cannot keep pace anymore. Rapid deployment cycles demand better solutions. AI agents now operate within CI/CD pipelines. They write their own unit tests. They identify edge cases automatically. Advanced environments use "self-healing" code. This code monitors production logs. It generates pull requests to fix bugs automatically. Developers see fixes before they see problems.

4. AI-Driven Asset Optimization for Spatial Computing

AR/VR adoption continues to mature. Mobile apps must handle 3D assets without crashing. AI models optimize 3D meshes and textures in real-time. High-fidelity spatial experiences can now run on mid-range hardware. The system dynamically adjusts polygon counts. It responds to the device's current thermal headroom.

5. Hyper-Personalization via Local Model Tuning

We have moved beyond simple recommendations. Apps now use Federated Learning. This technique fine-tunes local models based on your specific behavior. A fitness app learns your unique gait. It understands your fatigue patterns. It adapts coaching logic locally. Your biometric data never leaves your device. The parent company never sees it.

6. Predictive Energy and Thermal Management

AI integrates deeply into OS-level scheduling. Developers use AI-driven profilers. These tools predict which features cause thermal throttling. Apps can then proactively downsample non-essential tasks. This strategy extends active battery life by 15%. In practical terms, that means 30 extra minutes of use daily.

7. Real-Time Multimodal Interaction

Apps treat voice, vision, and gesture as first-class inputs. AI models process these simultaneously. A user points their camera at a broken appliance. They ask, "How do I fix this?" The app analyzes the video feed immediately. It recognizes the model. It overlays AR instructions in one fluid motion.

8. Automated Compliance and Privacy Guardrails

Global AI regulations are tightening. Developers use AI-based "compliance agents" to scan codebases. These tools check for data leakage risks. They verify third-party SDKs aren't accessing restricted sensors. They confirm data storage locations comply with regional laws. This is essential for companies maintaining mobile app development Chicago standards while operating in multiple jurisdictions. Compliance agents reduce legal exposure significantly.

9. Cross-Platform Logic Unification

AI tools have solved the "feature parity" gap. Modern agents translate complex business logic between platforms. They convert Swift to Kotlin or vice versa. They maintain platform-specific optimizations. Smaller teams can now maintain high-quality native codebases. They don't need to double their headcount.

10. Proactive Maintenance via Synthetic User Testing

Before releasing features, developers run synthetic user simulations. Thousands of AI agents interact with the app. Each agent has different simulated personas. Each operates under different device constraints. This identifies UX friction points. It catches performance bottlenecks that beta testing misses.

Practical Implementation

Transitioning to AI-native mobile strategy requires phases:

- Phase 1 (Audit): Identify high-friction tasks in your current UI. These are prime candidates for intent-based automation. Look for repetitive multi-step processes.

- Phase 2 (On-Device Prep): Evaluate which data can move to local SLMs. This reduces server costs. It increases user trust dramatically.

- Phase 3 (Agent Integration): Build a workflow engine instead of more screens. The AI calls upon this engine to execute tasks.

The competitive advantage belongs to those who reduce required taps.

Risks and Mitigation

AI introduces new failure modes. "Agentic drift" occurs when agents misinterpret user intent. This leads to unintended actions.

Example: A user tells a travel app to "book a flight to London." The agent sees an expensive direct flight. It also sees a cheaper option with a 10-hour layover. The agent chooses cheaper to "save money." It ignores the user's historical preference for direct travel. The user notices only after the non-refundable window closes.

Mitigation: Always implement "Confirm Intent" for high-stakes actions. This includes financial transactions. It includes irreversible data changes. Monitor NPU usage as closely as memory usage. AI-driven battery drain causes app uninstalls in 2026.

Key Takeaways

- Move to IUI. The future is intent-based. Reduce navigation menus. Favor dynamic AI-generated workflows instead.

- Prioritize on-device processing. Use SLMs to handle sensitive data locally. This is a 2026 requirement for privacy and latency.

- Invest in autonomous QA. AI-driven testing maintains pace with faster development cycles.

- Monitor energy consumption. Watch NPU usage like you watch memory. Battery drain is a top uninstall reason.

- Maintain human oversight. AI agents are assistants. They are not unchecked decision-makers. Maintain clear boundaries for autonomous actions.

Comments

There are no comments for this story

Be the first to respond and start the conversation.