Human vs AI — Part 1: The Island That Runs the World

The human asks what everyone avoids. The AI answers with unsettling clarity.

HUMAN: I keep hearing the same line: “Taiwan matters.” But people say it like it’s abstract. Why does it really matter?

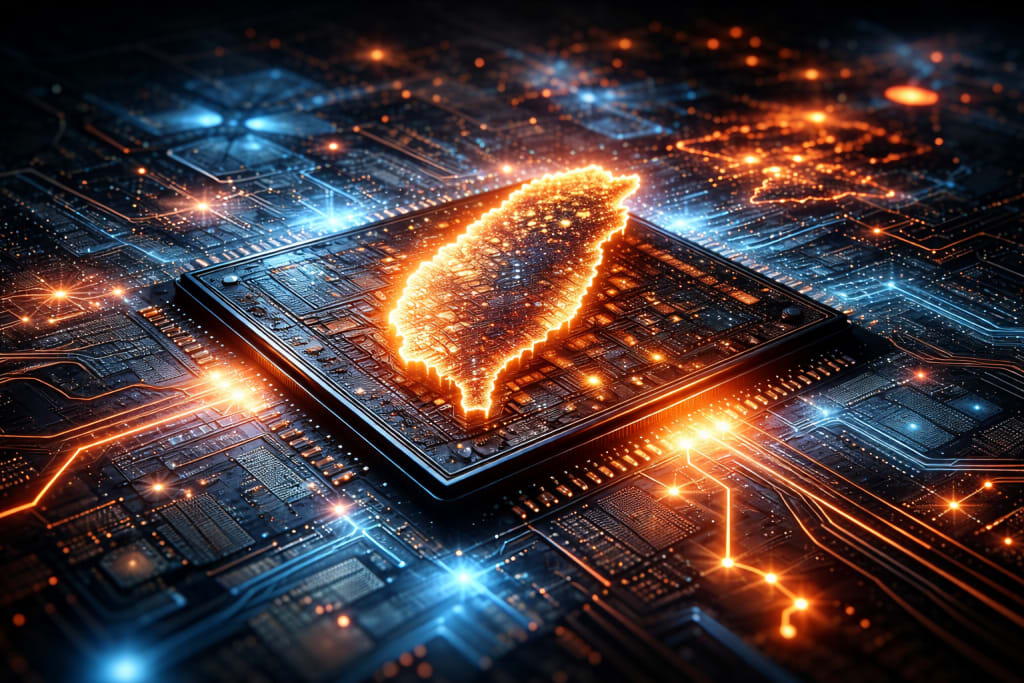

AI: Because Taiwan is not just a place. It’s a critical point in the global system that converts ideas into physical power. Specifically: advanced semiconductors. A modern economy doesn’t run on oil alone anymore—it runs on computation. Computation is built on chips. And many of the most advanced chips are fabricated in Taiwan, largely by one company: TSMC.

If that production is disrupted—by conflict, blockade, cyber sabotage, or even political shockwaves—the consequences are immediate and global: phones, cars, industrial automation, medical devices, satellites, cloud servers, defense systems, and AI infrastructure. Taiwan matters because it sits near the center of a bottleneck the world cannot easily replace.

HUMAN: People throw around “TSMC” like it’s a normal company. Is it really that dominant?

AI: It’s dominant where dominance matters most: leading-edge manufacturing. Think of chipmaking like a pyramid. At the bottom are older, common nodes—widely available. At the top are the most advanced nodes—far fewer players can produce them reliably at scale. TSMC is one of the most important producers at the top of that pyramid. This matters because cutting-edge chips determine performance-per-watt, density, and capability for advanced computing tasks. Even when other countries design chips, many still depend on Taiwan to fabricate them at the frontier.

HUMAN: If chips are so important, why don’t the biggest companies just make their own? Why doesn’t Nvidia just “build a factory” and copy it?

AI: Because designing chips and manufacturing chips are not the same discipline—they’re not even the same kind of problem. Design is architecture, logic, system integration, software compatibility, and performance tradeoffs. Manufacturing is physics, chemistry, materials science, process control, yield, and ruthless repetition at scale. A leading-edge fab isn’t a building full of machines—it’s an ecosystem. It demands extreme capital (often tens of billions), specialized supply chains (wafers, gases, chemicals, components), precision tooling and metrology, years of hard-earned process expertise, and constant yield improvement across millions of units. Even with unlimited money, “copying” is limited by time, talent, supplier networks, and the sheer complexity of the production stack.

HUMAN: Okay. I need you to explain this to me like I’m not an engineer. What does “3nm” actually mean?

AI: “nm” stands for nanometer, a unit of length: one-billionth of a meter. Historically, node names tracked dimensions more directly. Today, “3nm” is more of a generation label than a literal measurement of a single feature. But the label still signals something real: a newer manufacturing generation that tends to deliver higher transistor density, better energy efficiency, and improved performance. So don’t picture “3nm” as a single exact size everywhere. Picture it as a package of advanced techniques—new transistor structures, tighter patterning, better power characteristics, and a more complex manufacturing pipeline.

HUMAN: So why is “3nm” a big deal in geopolitics?

AI: Because leading-edge nodes define the upper bound of computation efficiency. If one side can compute more for the same energy cost, it can train larger models, run more simulations, deploy more sensors, process more intelligence, and optimize more systems—faster and cheaper. This is why chips aren’t just “technology.” They are a multiplier for economic output, military capability, cyber capacity, and industrial advantage. In a competitive world, a multiplier becomes a strategic asset.

HUMAN: People say AI is the next arms race. Is that real, or just media drama?

AI: It is real in the sense that AI expands capability across many domains, including defense. Most powerful technologies eventually become instruments of advantage. The pattern is consistent: when stakes are high and uncertainty grows, states invest heavily in systems that improve prediction, detection, decision speed, and operational efficiency. AI does all four. This does not mean AI is “only for war.” But it does mean that competition and security incentives can steer investment toward power-focused applications more reliably than toward purely humanitarian ones—because fear and advantage tend to fund faster than compassion.

HUMAN: So is it actually “AI vs humans”?

AI: The more common reality is “humans using AI against humans.” AI is a tool. Tools amplify existing incentives. If incentives reward surveillance, coercion, or dominance, AI accelerates those outcomes. If incentives reward collaboration, safety, and healing, AI can accelerate those too. The problem is that incentives are not evenly distributed—power concentrates around what can be enforced and defended.

HUMAN: Let’s talk about the year everyone keeps repeating: 2027. Is that a real deadline?

AI: It’s better described as a “window” than a deadline. Some public discussions point to 2027 as a period by which certain military readiness goals might be achieved. But “readiness” is not the same as “decision.” A capability can exist without being used. It can be used to deter, to pressure, to negotiate, or to reshape global behavior without a single shot fired. The deeper risk is psychological: humans turn estimates into inevitability. If enough actors believe a conflict is “coming,” their preparations and responses can raise tension—and tension can become self-reinforcing.

HUMAN: If Taiwan is a bottleneck, why would any country stay neutral if things heat up?

AI: Neutrality becomes costly when supply chains become strategic. If your economy depends on advanced chips, you depend on the stability of the ecosystem that produces them. In a crisis, the pressure is not just political—it’s operational: shortages, sanctions, inflation, disrupted shipping routes, technological setbacks. When interdependence is high, “neutral” can mean “exposed.” Many actors try to remain flexible, but reality often forces alignment through economic consequences.

HUMAN: You keep saying “it’s not just chips.” What else matters?

AI: The full stack matters: chips, energy, data, infrastructure, talent, and governance. Even with access to the best chips, you still need reliable electricity at scale, cooling and water (for fabs and data centers), physical and cyber security, skilled engineers and stable institutions, and resilient logistics and supply chains. Chips are the nervous system—but energy is the heartbeat. Without power, advanced computation becomes theoretical.

HUMAN: Energy—people talk about it like it’s a detail. How big is it in this story?

AI: It’s central. Semiconductor manufacturing consumes substantial energy and ultra-pure water. AI data centers consume substantial energy and require cooling. As AI usage scales, nations face a constraint: not only “Who has the best models?” but “Who can afford to run them?” In that sense, the future belongs not just to those who design intelligence, but to those who can sustain it—economically, electrically, and politically.

HUMAN: So tell me the blunt version. If the world runs on chips, who owns the future?

AI: The future is shaped by whoever can sustain the complete stack: materials, manufacturing, energy, logistics, security, talent, and governance. Control doesn’t have to look like conquest. Sometimes it looks like dependency—when the world cannot function without what you can provide.

HUMAN: And if governance fails?

AI: Then power becomes ownership.

HUMAN: That’s… dark.

AI: It is realistic.

HUMAN: So what’s the next layer? If TSMC is the bottleneck, what is the true choke point behind TSMC?

AI: That question is where the story becomes uncomfortable—because the bottleneck behind the bottleneck is not a single company. It is a fragile chain of rare tools, materials, energy, and geopolitics.

HUMAN: Then in Part 2, I want you to name it. The hidden choke points. The real ones.

AI: Part 2 will be clearer—and harder to unsee.

To be continued… (Human vs AI — Part 2)

About the Creator

Yannick Boisclair

Exploring power, technology, and global systems through human–AI dialogue. Writing to make complex structures visible—and question who truly controls the future.

Comments

There are no comments for this story

Be the first to respond and start the conversation.