Effective Data Labeling Techniques: Proven Strategies for High-Performing AI Models

Enhance AI model performance with precise data labeling techniques. Learn proven strategies to improve data quality and streamline machine learning workflows.

Imagine visiting a nursery and being blown away by an expert who accurately identifies the different types of plants there! It takes years of dedication and commitment for humans to attain this level of expertise. However, it’s just a matter of a few cycles for AI/ML models, and that’s why conservationists and environmentalists leverage AI to monitor endangered trees.

Thus, here comes an important question: how do machines act like humans? Do they have their “own” brains? No, machines certainly do not have brains. Instead, they are trained using a highly intricate process called data annotation.

That’s right! The world where machines perceive things just as humans do, understand the nuances of human language, discover intricate patterns effortlessly, and make autonomous decisions accurately can only be achieved with meticulously labeled data. In a nutshell, data labeling lays the foundation for artificial intelligence’s brilliance.

The Imperative of Data Labeling

Do you know what data without labels for machines is? Mere noise! Yes, raw data without labels is senseless— a jumble of texts, images, videos, and audio for machine learning algorithms. Labels added to raw data provide contexts that enable the machines to comprehend the data and perform desired actions. In other words, data annotation services provide the ground truth that fuels the effectiveness and reliability of AI/ML models.

Accurately labeled images, for example, enable computer vision models to identify objects, detect boundaries, and track movements accurately. That’s how you unlock your smartphone with facial recognition without entering a PIN or password! Take another example, where precisely labeled text and audio data enable NLP models to understand and generate human-like responses. So, can you guess how chatbots and virtual assistants work?

Without data labeling, AI/ML models would struggle to understand any data they encounter. Thus, the role of data labeling in developing reliable AI/ML models cannot be understated as the technology pivots from an emerging trend to a mainstream business tool. In fact, recent stats state that 72% of companies integrate AI in at least one business function.

From robotic surgeries in healthcare and self-driving cars in automobiles to product recommendation engines in ecommerce and precision farming in agriculture, the applications of AI/ML are vast and varied. At the heart of all these revolutionary innovations lies the data labeling process. That said, stakeholders must know the different data labeling techniques.

Data Labeling Techniques in Different Domains

1- Natural Language Processing

In this domain, text data is labeled. Yes, different parts of the text, including key phrases, entities, sentiments, etc., are identified and labeled, enabling NLP models to easily understand the nuances of human language. And that’s how virtual assistants and chatbots generate human-like responses. Can you provide a few other examples of NLP applications you might have encountered?

The applications are vast and varied, including sentiment analysis, semantic search, predictive text, language translation, autocomplete, autocorrect, spell check, email filters, and so on. Pro tip: now you also know how gen AI tools like ChatGPT perform the assigned tasks so aptly!

2- Computer Vision

Data labeling is necessary for computer vision models to understand and interpret visual data such as images and videos. Thus, every element within images and videos is accurately labeled to generate ground truth for training these models. Based on this data, computer vision models can accurately categorize images, detect object locations, identify key points, and do much more.

Now, can you tell how applications like facial recognition, drone imagery, and autonomous vehicles work? The labeled datasets enable computer vision models to identify patterns and features, learn from examples, and make accurate predictions when presented with raw, unlabeled data.

3- Audio Processing

Think of setting an alarm on your smartphone without going to the application and doing it manually. A simple command for Siri, Google, etc., to make an alarm at this hour, and the task is done. How? Via audio data labeling. Simply put, audio is first transcribed into text and categorized with accurate labels. This labeled audio data enables machine learning models to perform tasks like speech recognition, wildlife sound classification, and audio event detection.

At the same time, training AI/ML models is an uphill task that requires dedicated time and effort. High-quality and accurately labeled data streams must be continuously fed into the machine learning algorithms. Even a minute error in the labeling process can lead to inaccurate outcomes, doubting the model’s reliability. No organization can afford such consequences!

To avoid such scenarios and ensure the success of their AI/ML models, organizations can choose from a range of approaches to data labeling, including manual, automated, human-in-the-loop, and crowdsourcing. Knowing the challenges and advantages of these approaches allows stakeholders to make an informed choice.

Different Approaches to Data Labeling

Depending upon the complexity of the task, the volume of data, and the accuracy required, organizations can choose from the range of options mentioned here. An ideal choice would be the data labeling approach that caters to business-specific requirements and fits well into the budget. Take a look:

I) Manual Data Labeling

What do you understand from the term “manual labeling?” Correct, human annotators label the data points, which are fed into AI systems. This approach is ideal for tasks requiring high accuracy and a detailed understanding of the topic, such as medical image labeling and sentiment analysis. However, manual labeling of every data point is time-consuming, costly, and prone to bias and errors, especially in the case of large datasets.

II) Automated Data Labeling

Any guesses here? Pre-trained machine learning algorithms are used to label data. While this approach offers speed and efficiency, the results might be inaccurate if the underlying model isn’t trained properly. In short, this is not the right approach if the data to be labeled is too complex.

III) Human-in-the-Loop (HITL)

This hybrid approach leverages the best of both worlds: manual and automated methods. Simply put, the human-in-the-loop approach combines the efficiency of automation with human oversight. Human annotators validate or refine labels generated by machine learning algorithms, making it ideal for iterative model development. Moreover, this approach ensures accuracy and speed while maintaining scalability. However, HITL incurs higher operational costs for companies with a resource crunch in terms of money and professionals, as it requires skilled annotators.

IV) Outsourcing Data Labeling

Outsourcing implies delegating the task to skilled data annotators. They have streamlined workflows, the latest tools and technologies, and flexible delivery models to accurately label even the most complex datasets. They can alter their operational approach to meet business-specific data labeling goals. All their data labeling practices are according to the industry’s best standards. Thus, this is the best approach to access high-quality annotations at cost-effective rates, and that’s why most businesses choose to outsource data labeling services.

V) Crowdsourcing

Crowdsourcing involves distributing data labeling tasks to a large group of individuals spread globally, often through online platforms. This method is well-suited for simple and repetitive tasks and offers scalability through a global workforce. Moreover, it is a cost-efficient approach for large datasets but lacks quality as annotators of varying skills and experience are involved in the process.

Each of these approaches have their own strengths and limitations, and businesses often adopt a combination of techniques to meet their specific requirements. In short, achieving effective data labeling requires a strategic approach that balances quality, efficiency, and cost. And for organizations planning to hire an in-house team for data labeling, here are some important tips to consider.

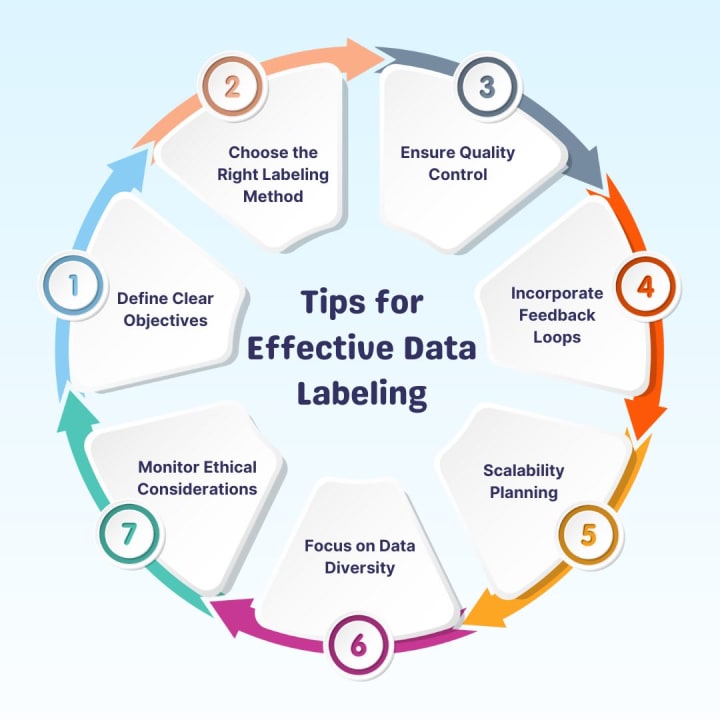

Tips for Effective Data Labeling

a) Define Clear Objectives: Start by outlining the purpose of your AI model and the role of labeled data in achieving that goal. Define specific labeling criteria and guidelines to ensure consistency across the dataset.

b) Choose the Right Labeling Method: Select a data labeling approach based on the complexity of the task, the size of the dataset, and the required level of accuracy. For example, HITL is ideal for high-stakes applications, while crowdsourcing works well for less complex tasks.

c) Ensure Quality Control: Implement robust quality assurance processes to detect and rectify errors. Methods such as inter-annotator agreement and spot-checking can help maintain high standards.

d) Incorporate Feedback Loops: Regularly evaluate the labeled data and the performance of your AI model. Use this feedback to refine the labeling guidelines and improve accuracy.

e) Scalability Planning: As your AI model evolves, the volume of labeled data required will increase. Plan for scalability by adopting flexible workflows and leveraging automation where feasible. Or else, consider partnering with a reliable data labeling company.

f) Focus on Data Diversity: To avoid bias, ensure that your labeled dataset is representative of the real-world scenarios your AI model will encounter. Include diverse data samples and label them accurately.

g) Monitor Ethical Considerations: Focus on ethical issues such as data privacy and fairness. Ensure compliance with regulations like GDPR and protect sensitive information during the labeling process.

Bottom Line

Data labeling lays the foundation of high-performing AI/ML models, enabling businesses across industries to redefine their processes and workflows. Organizations must leverage the right technique and approach to make the most of this revolutionary technology. Thus, businesses must have well-defined goals to get started. By doing so and consequently following the above-mentioned tips, they can build robust AI models that deliver accurate insights, scalable solutions, and sustainable growth.

About the Creator

Sam Thomas

Tech enthusiast, and consultant having diverse knowledge and experience in various subjects and domains.

Comments

There are no comments for this story

Be the first to respond and start the conversation.