Conversation with Macaron Founder Kaijie Chen: RL + Memory Turns Agents into Users' Personal “Doraemon”

Conversation with Macaron Founder Kaijie Chen: RL + Memory Turns Agents into Users' Personal “Doraemon”

Conversation with Macaron Founder Kaijie Chen: RL + Memory Turns Agents into Users' Personal “Doraemon”

With ChatGPT's integration of memory capabilities, its user retention has significantly strengthened. Building upon this foundation, agent development has entered a more mature phase: previously, developers primarily relied on prompting to build basic agents. Now, through RL and memory, developers can create agents with markedly enhanced agentic capabilities.

This signals an intriguing evolution in AI's role: no longer merely assistants for coding or creating presentations, AI now holds the potential to become genuinely life-understanding companions capable of handling daily tasks with heightened personalization.

To better understand this trend, we interviewed Macaron founder Kaijie Chen. He shared insights on training Memory as an intelligent capability and emphasized RL's critical importance in agent development.

Macaron's product has recently sparked considerable debate and discussion. Kaijie Chen candidly admits that if the product were scored out of 100, he would only give it a 7 or 8, acknowledging significant room for improvement. He envisions future agents becoming users' personal Doraemon—both entertaining companions and instant creators of practical tools:

• Multi-agent systems can train Memory Agents and Coding Agents separately, achieving a balance between an agent's emotional intelligence and cognitive intelligence;

• When training an agent's memory, treat memory as a method, not an end goal. Machine and human memory require fitting and alignment;

• All-Sync RL technology helps agent developers accelerate training speed;

• RL infrastructure struggles to achieve cloud-service-level standardization, as it requires training models based on real user feedback before deployment;

• Overlapping life scenarios amplify an agent's commercial value.

......

User-centric LLM is the future trend

oversea: Please have Kaijie Chen introduce himself first.

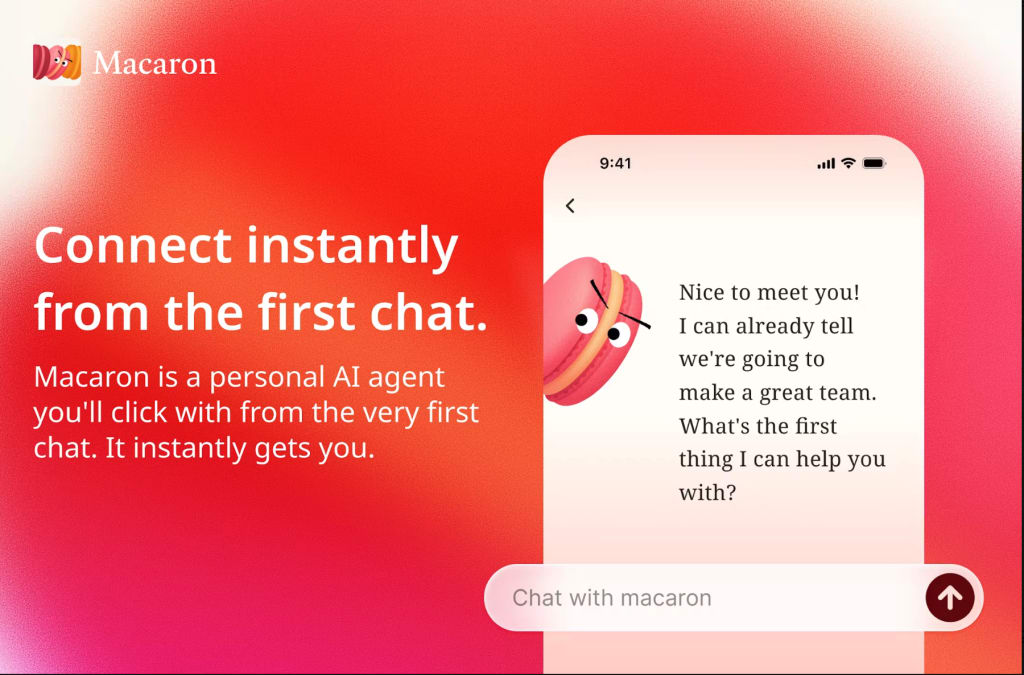

Macaron Product Feature Diagram

Kaijie Chen: I graduated from Duke University's Mechanical Engineering department with a focus on human-computer interaction. I have several entrepreneurial experiences and am currently the founder of Macaron. Before establishing Macaron, I founded MidReal, an interactive storytelling platform with 3 million overseas users. We just launched our new product, Macaron, two weeks ago.

oversea: Why did you decide to create Macaron? Why emphasize a non-productive direction like “Personal”?

Kaijie Chen: Macaron is a Personal Agent. This idea originated from our observations of MidReal. We called MidReal a “Fantasy World” where users could enter, write their thoughts, and immerse themselves in a novel of tens or even hundreds of thousands of words through multimodal interactions.

After a year of developing MidReal, user interviews revealed a common pattern: while people found joy in this fantasy world, they often felt a sense of emptiness, boredom, or even disconnection upon returning to reality. This suggested we could take it further—moving closer to users' daily lives and real-world needs, rather than staying confined to a fantasy realm.

Simultaneously, a clear trend emerged in early 2025: while AI has seen strong adoption in productivity tools—like creating PowerPoints, writing code, or drafting reports—its penetration into personal life remains limited. For instance, asking an AI to plan next week's restaurant choices typically yields a correct but unhelpful response. This is because it lacks understanding of your specific dietary preferences, household size, dining companions, or allergy concerns. It struggles to contextualize these nuances.

Thus, a Personal Agent that truly understands you and serves your daily life is precisely what this era demands. It is against this backdrop that Macaron was conceived.

oversea: Compared to other general-purpose agents, what sets Macaron apart? Does it genuinely tackle everyday life tasks rather than focusing solely on productivity-driven efficiency or work-related tasks?

Kaijie Chen: You can think of it that way. Macaron has two major distinguishing features:

• Strong Memory: For instance, if you ask it to plan your meals for the week, it must deeply understand you, requiring robust memory capabilities.

• Usefulness: Within Macaron, it can customize mini-tools we call Sub Agents. These tools can handle food tracking (snap a photo of your meal and it calculates calories), fitness logs, mood journals, household finances, academic planning, and more.

I'd liken it to a personal life manager. It serves as your nutritionist, fitness coach, tennis instructor, English tutor, and Japanese teacher. It's a friend that genuinely helps you navigate life.

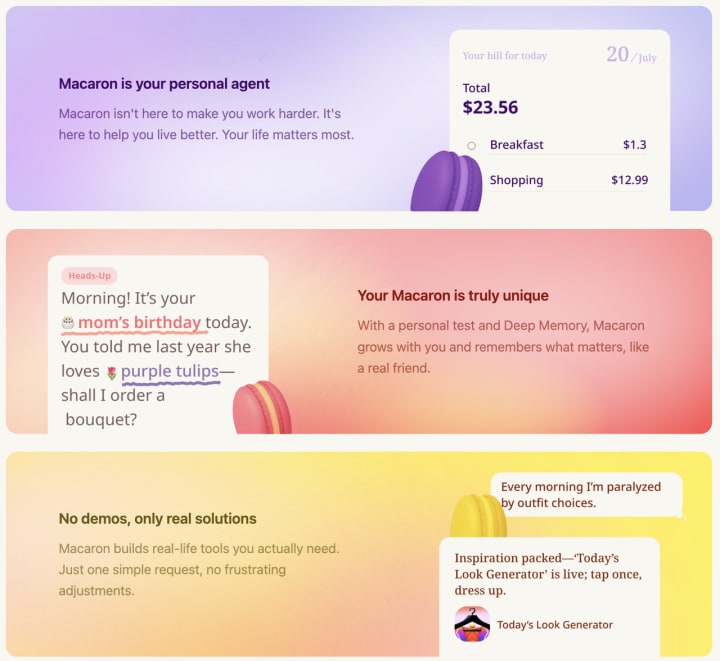

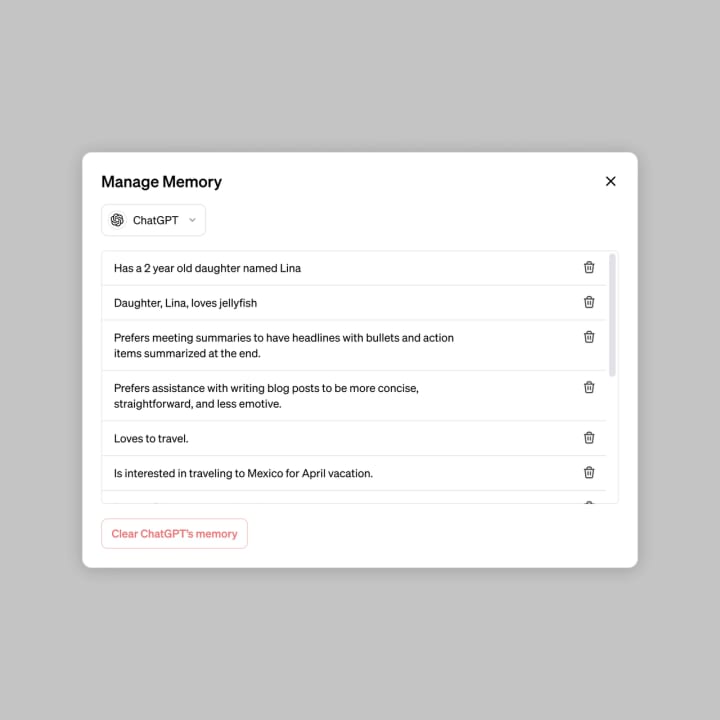

oversea: Recently, we've observed that ChatGPT's user retention significantly improved after integrating Memory. Many teams are also developing Agent infrastructure based on Memory. In your view, what defines a good Agent Memory? How does Macaron's memory outperform others?

GPT Updates Memory Functionality

Kaijie Chen: Our understanding of Memory might differ slightly. We believe: Memory is not an end in itself, but a means. The purpose of remembering something isn't “remembering” for its own sake—after all, this isn't an exam. The goal is to better assist the user.

For example, if a user forgets a friend's birthday next month, the agent can proactively remind them. When a user plans a trip, the agent can recall their preferences: favorite hotel types, preferred travel styles, and places they've visited. The goal is to provide better service, not mere memorization.

Many current Memory systems fail because they treat “remembering” as the end goal. But if we treat remembering as a means to better serve users, the approach to training Memory changes entirely.

Training memory is actually very similar to reasoning. Reasoning is the quintessential means—the specific implementation details aren't crucial, as long as it ultimately provides the correct answer and improves accuracy. That's why, early this year, Macaron began working on Memory Reinforcement Learning. We were likely one of the first teams to apply Memory Reinforcement Learning at the 671B model scale.

Our approach: Enclose a memory segment with two memory tokens. The optimization goal isn't “what was memorized,” but whether it can effectively answer questions in specific cases. During training, the model continuously evolves, compresses, and even discards information to form an adaptive memory block. This block automatically adjusts during conversations to deliver better responses.

oversea:You treated Memory directly as an intelligent capability, much like Reasoning, dedicating specific training and reinforcement learning to it. In Reasoning data, we can package the process solution into a Reasoning token to derive the final answer. So within Memory, what exactly is contained in the Memory token?

Kaijie Chen: During early Reasoning training, we used CoT (Chain-of-Thought) data, but later stages didn't require specific data planning. Memory follows a similar pattern: Initially, we used RAG (Retrieval-Augmented Generation), prompt mechanisms, and context engineering for cold-start tasks like writing code or solving math problems. However, the final training occurs within the chat application itself

During conversations, Macaron gradually memorizes details like my dietary preferences; the ages of my father, mother, and children at home; and their respective characteristics. These preferences are written into the Memory. This resembles today's Memory summarization—both use prompt-based approaches—but the key difference is: Macaron autonomously decides what to summarize, what to compress, and which information to emphasize or downplay.

We observed a pattern: when users strongly emphasize certain information, Macaron prioritizes recording it. This represents the alignment between machine memory and human memory. Humans also consciously note key points during conversations. Memory itself functions like an evolving modular system: we continuously add and update fragments—like what was discussed ten minutes ago—gradually forming memories. The final trained Memory achieves this alignment between machine and human memory:

• When it remembers similar things, you feel Macaron truly understands you;

• When it recalls details you didn't emphasize, you find Macaron impressive;

• It forgets aspects you didn't pay attention to.

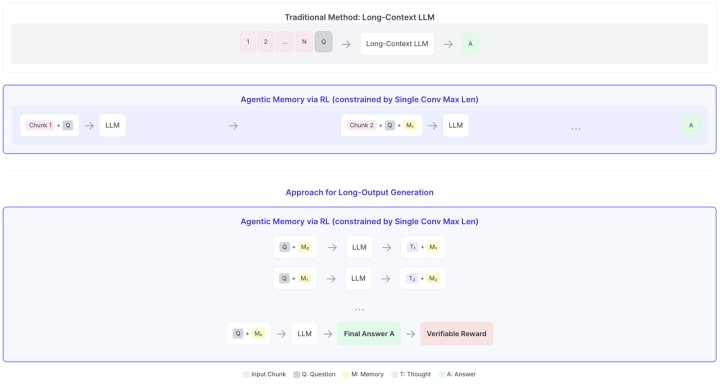

Macaron Agentic Memory:

Trained via RL, optimized for long-form text generation

The best analogy for Macaron is Doraemon

oversea: Cold starts are easier to grasp for training. But what about early user adoption? When people first use Macaron, there's no context. Yet we want it to be “an agent that truly understands me.” How do we deliver that experience on day one?

Kaijie Chen: At launch, Macaron didn't effectively communicate its purpose or foster instant connection—building that understanding takes time.

Beyond users familiar with vibe coding or AI, most only recognize AI for chatting, unaware it can create custom tools. So we discovered that even a week after launch, many users hadn't realized Macaron could create tools for them—a problem we urgently needed to solve.

The current cold-start process looks something like this:

• Personality Test: Users first answer a few questions, similar to an MBTI test. Our observation is that people enjoy personality tests—they want to understand themselves and discover compatible matches. This step is typically enjoyable for users.

• Matching Your Personal Macaron: After the test, users receive their own Macaron with a unique “color” and “personality.”

• Welcome Gift: Upon first meeting, Macaron presents you with three fun gadgets as a welcome gift and asks, “Do you find these useful?” It also adds, “If you don't like these, no worries—I can create a brand-new gadget just for you.” —This instantly clarifies that Macaron can create custom gadgets.

• Interest-Based Conversations: During the test, we also inquire about hobbies and personality. For instance, if a user selects “Outdoor Sports,” Macaron will ask upon meeting: “Do you enjoy hiking or playing frisbee? Have you been out lately? Where are you planning to travel during your next vacation?” This swiftly initiates conversation by connecting through shared interests.

Through this series of designs, users feel Macaron is a friend they can talk to. Once that friendship is established, whether it's solving minor daily hassles, dealing with annoyances, or exploring new hobbies (like learning tennis or swimming), the conversation flows naturally.

oversea: This process is a bit like Doraemon. When we first meet, it pulls a few gadgets from its pocket and says, “This is really useful, try it out.”

Kaijie Chen: Doraemon might be the closest analogy. I believe future AI won't be compared to ‘objects’ or “tools” anymore . ChatGPT might be likened to Jarvis or other characters, but Macaron's best analogy is Doraemon:

• Doraemon is first and foremost Nobita's friend.

• Only when Nobita faces a problem does Doraemon pull a gadget from his pocket to help solve it.

• Sometimes the gadgets aren't perfect or even break, but Nobita forgives Doraemon because they're friends.

Users don't necessarily need the smartest person in the world as their friend. What they need more is someone who can consistently accompany and care for them. That's how I position Macaron. You wouldn't abandon a long-time friend just because you made a new one.

oversea: Macaron has two roles: friend and assistant. When GPT-5 launched, similar debates arose: as a model's IQ rises, its EQ might decline, making it feel less like a friend. Many users reported this experience during the transition from GPT-4o to 5. So how does Macaron balance “being a friend” and “being a good assistant”?

Kaijie Chen: Macaron makes a natural distinction. The model that converses with users, manages memories and preferences, and the Coding Agent that builds tools for users are actually two separate Agents. They share an interoperable protocol but have distinct functions:

• The Memory Agent interacting with users is the user's friend.

• The Coding Agent building tools for users only needs to focus on writing good tools.

All user preferences—such as dietary habits, whether knees are injured during workouts, or specific details requiring attention—are relayed from the “friend” Agent to the Coding Agent.

My vision is for the conversational Agent to be not a cold assistant, but a warm conversationalist. This makes users more receptive and trusting. Once trust is established, users willingly share more contextual information, enabling the Agent to serve them better.

oversea: So essentially, Macaron employs a multi-Agent approach to balance things: a high-EQ Agent paired with a high-IQ (or highly capable) Agent, working together to ensure a seamless user experience.

Kaijie Chen: Exactly. The optimization paths for high EQ and high IQ are entirely different. Both Agents underwent end-to-end reinforcement learning training on our own Baica cluster. But their training objectives differed:

• “Friend” Agent's goal: To understand you better, remember more details; serve you more effectively during conversations; and use the Router to intelligently route different needs (whether worries or curiosities) to other Agents that should be invoked.

• Coding Agent's goal: We have 100-200 real-life utility tool examples (refined from community user feedback). The Coding Agent's goal is to write all 200 of these cases well—ensuring complete functionality, smooth interaction, visually appealing design, and user satisfaction.

Therefore, we must completely separate these two optimization goals and train them independently. Only then will the combined experience be truly enhanced.

oversea:On a scale of 1 to 100, how would you rate Macaron today?

Kaijie Chen:Probably around 70-80.

My expectations for it are ambitious: If it ever serves 100 million people, I might give it 85 points. Today is still an exploratory phase—interactions, external integrations, Coding Agent, and Memory Future could all undergo significant changes. For instance, whether phone manufacturers enter the “Grand Agent” space, or what proportion the community will hold in the future—these questions remain unresolved. Today represents only the earliest stage.

What is the Sub Agent Evolution Theory?

oversea: Kaijie Chen's description reminds me of several interesting products: Xiaohongshu, Notion, and Duolingo. Drawing inspiration from Xiaohongshu, some of Macaron's features are also community-driven. Where do you plan to find this kind of network effect in the future?

Kaijie Chen: I actually envision this. Macaron isn't just your personal assistant; it's also a “lifestyle sharing platform.”

Take yoga and running—they're common activities, but they transcend mere physical exercise; they embody a lifestyle. Yoga, for instance, emphasizes mindfulness and fluidity. I believe such lifestyles can be crystallized and condensed into an Agent.

Take me personally—I'm deeply committed to hydration and exercise. Friends who've met me know I carry a 1.5-liter water bottle everywhere, drinking two bottles daily. Therefore, my Agent includes features related to hydration and exercise. This represents my lifestyle, and I aim to share it—whether within the Macaron community or on social platforms like Xiaohongshu and Instagram. If someone wishes to adopt this lifestyle, they can import this Agent into their own Macaron. This means they can develop habits identical to mine.

Another example: My co-founder is a huge wine enthusiast, while I know next to nothing about it. So he created a wine Sub Agent. This Agent's capability is: Just snap a photo of a bottle, and it tells you the vintage, winery, price, optimal storage conditions, and best food pairings. For me, it's a perfect entry point. Later, I even started seeing community members sharing their wine experiences—describing flavors and ideal pairings.

In the future, I'd love to invite a tennis champion to create a tennis training Sub Agent and distribute it within the community. I'm confident many would enjoy it. My vision for the Macaron community is this: numerous lifestyle “leaders” will emerge.

Compared to platforms like Xiaohongshu or Douyin, Macaron has a significant difference:

• On Douyin or Xiaohongshu, creators need certain skills—writing articles, shooting videos, posting beautiful photos.

• On Macaron, users barely need any creative ability. Just a sentence or two can generate a mini-tool.

Thus, on Macaron, the essence of a creator isn't “content writing ability,” but whether they possess a unique lifestyle. This means: even if someone enjoys “staying in bed all day,” this lifestyle can be solidified into a Sub Agent for others to use and share. The lifestyle itself is the content. It doesn't have to be exciting or fulfilling, but if it's sufficiently unique, sharing it can inspire others. This is the community I envision for Macaron: a platform for sharing lifestyles.

oversea: If I tell Macaron in the future that I want to be someone who “stays in bed all day,” will it recommend similar Sub Agents created by other users?

Kaijie Chen: That's an excellent idea and exactly the direction we're heading next

Currently, every Sub Agent is built from scratch, line by line, by Coding Agent. This approach isn't optimal. We'll adjust this in the future: if someone has created an excellent case that many users adopt, Macaron will offer this existing case to new users. It will also ask users if they want to customize it for a more personalized Agent. This way, Sub Agents will continuously branch and evolve, forming a kind of “Sub Agent Evolution Theory.”

For example, a frequently invoked Sub Agent might spawn new branches. Within these new Sub Agents, those with higher usage rates will continue to evolve. The entire system grows like a tree, constantly branching downward.

Unlike Apple's App Store:

• App Store development and feedback are independent—each team develops separately, and user feedback remains fragmented.

• In Macaron, all Sub Agents are written by the Coding Agent; production and feedback are not isolated.

This means that in Macaron, if a user provides feedback on a Sub Agent, the Coding Agent will adjust the tool accordingly in its next iteration. User feedback can also be cross-scenario linked, forming a true reinforcement learning feedback loop. When distributing applications, the main agent can assign different apps to the same person or assign the same app to different people. At this point, we can establish connections between the feedback.

The result is that the Macaron team can genuinely use reinforcement learning algorithms on GPUs to treat all feedback as reward functions, since data production and feedback are not mutually independent. Collecting feedback data as reinforcement learning enables continuous unlocking of agent and sub-agent capabilities, expanding into more scenarios and serving more users with better interactions. I believe this represents an evolutionary trajectory.

oversea: Could Macaron evolve into a gradually expanding community, somewhat like a two-sided platform? On one side, users create small tools with Coding Agent; on the other, users seek interesting connections. You stitch these two sides together. Is this the vision Macaron aims to convey?

Kaijie Chen: Yes. For instance, when Pixel launched its new phone, it emphasized “enhancing daily life.” Similarly, ChatGPT's query requests increasingly involve lifestyle topics. AI is moving beyond productivity tools to permeate everyday scenarios.

Macaron's unique value lies in: building a new distribution platform where people share engaging lifestyles.

oversea: Notion shares two traits with the vision Kaijie Chen described: First, it started with memory and database capabilities, which later enabled cross-page relationships to become users' “second brain.” Second, it has a template ecosystem where community members layer content using Notion configurations, even leveraging it for creative economies and lifestyle sharing. If we truly aim to create a phenomenon-level product for the AI era—one that combines memory with network effects—what would be the greatest challenge?

Kaijie Chen: I believe challenges manifest at different levels.

The most significant and abstract challenge lies with the team. If we view the team as a reinforcement learning (RL) algorithm, it must continuously adjust community strategies based on evolving community dynamics, personnel changes, and emerging use cases. When serving individual users, it requires new RL training based on emerging needs to enable Coding Agent to develop better Sub Agents.

For instance, if someone says in Macaron today, “Make the second installment of Black Myth: Wukong,” it definitely can't be done. If you say, “Make a 3D racing game,” it also can't be done. But if enough such cases accumulate, and RL training is rerun next month, it might be able to produce a 3D racing game.

Thus, on the user feedback side, Macaron continuously refines its reinforcement. Simultaneously, we must adjust how we communicate strategy to the market. Initially, we might target students starting school (September), who face challenges like course selection, club activities, and making new friends—ensuring they think of Macaron first. For us, this represents an essential “reinforcement process” with the market.

Digging deeper, a key point in simultaneously serving users well and building community lies in designing the memory system. At its core, Macaron is a Personal Agent tasked with managing daily life. Memory isn't just about retaining user preferences in conversations; it must also propagate these preferences to every Sub Agent, while ensuring Sub Agents understand each other's context.

• For instance, if a user starts a new strength training regimen today, the diet planning Sub Agent must know they require more protein.

• After the user completes their meal plan and workout, the Sub Agent learns new information during the chat and should continue providing feedback and adjustments based on these updates.

“Memory transfer” is a complex mechanism. To achieve this, we even abandoned traditional database systems when designing Macaron—neither relational nor non-relational databases were used. Macaron's database architecture aims to enable all Sub Agents to share a single, unified set of your personal data. Naturally, this personal data resides in an encrypted location, as privacy is a core focus for Macaron.

Within this architecture, each Sub Agent functions somewhat like a “generative UI”—potentially offering a better user experience than Chat—while simultaneously knowing what's happening in your life and what information you've recorded, much like every Chat. This aspect of memory engineering + model training is a crucial step in making the Personal Agent truly “useful and capable of assisting you.” We're still working hard to achieve this, with much ground left to cover. Combined with the community aspect discussed earlier, Macaron has the potential to deliver a product akin to Notion. Naturally, our team must also continuously engage with the environment and iterate, much like RL.

Comments

There are no comments for this story

Be the first to respond and start the conversation.