NVIDIA GTC 2025: Blackwell Ultra GPU, AI Inference, and the Future of Computing

Key takeaways from GTC 2025

On March 18 (U.S. time), NVIDIA hosted its annual GPU Technology Conference (GTC) in San Jose, California. As one of the most highly anticipated tech events, this year’s GTC attracted approximately 25,000 in-person attendees, with an additional 300,000 tuning in online.

NVIDIA’s CEO, Jensen Huang, opened the keynote by stating, “As AI technology continues to surge, the scale of GTC expands every year. Last year, they called GTC the ‘Woodstock of AI.’ This year, we’ve moved into a stadium—GTC has become the ‘Super Bowl of AI.’”

Key Announcements: Blackwell Ultra GPU & AI Inference

At this year’s GTC, NVIDIA unveiled a series of groundbreaking products, including the Blackwell GPU, silicon photonics-based network switches, and advanced robotics models. A key message throughout Huang’s presentation was that the AI industry is shifting from large-scale model training to AI inference, particularly with innovations from DeepSeek in model inference technology.

Despite the excitement, NVIDIA’s stock closed down over 3.4% at $115.43 per share, with an additional 0.56% drop in after-hours trading.

Blackwell Ultra GPU: The “Powerhouse” for AI Inference

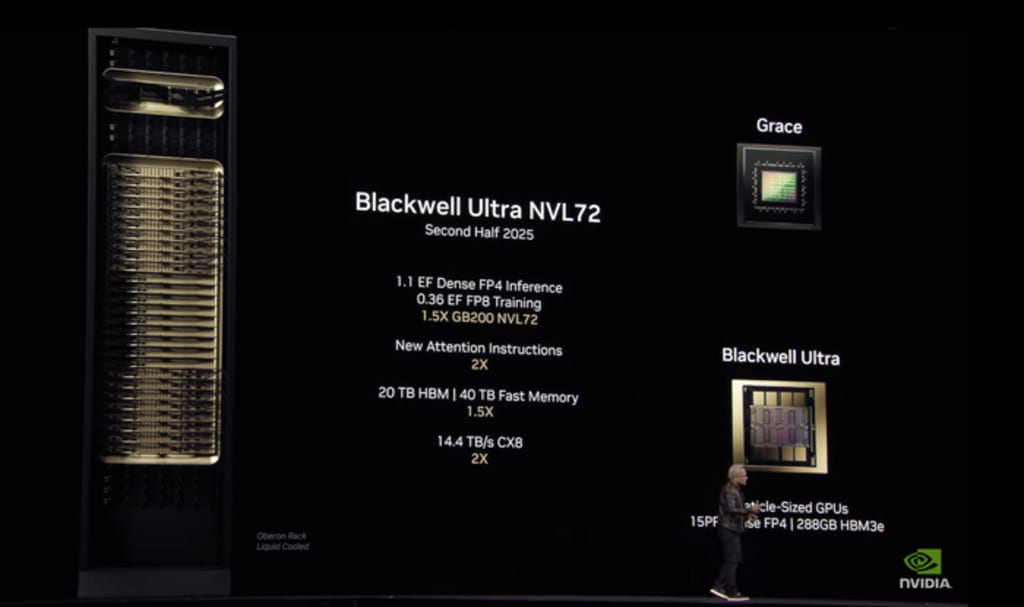

The highlight of GTC 2025 was the introduction of the NVIDIA Blackwell Ultra GPU, the next-generation AI GPU for data centers. Market speculation had suggested that NVIDIA might rename Blackwell Ultra to the B300, but the company ultimately retained the original name.

Compared to its predecessor, the B200 GPU, Blackwell Ultra delivers a 50% performance boost, reaching approximately 15 PFLOPS (using FP4 precision). It also features cutting-edge HBM3E memory, increasing capacity from 192GB to 288GB.

To support cloud providers and enterprise customers, NVIDIA introduced two integrated AI computing systems:

- Blackwell Ultra NVL72: A rack-mounted system connecting 72 Blackwell B300 GPUs and 36 Grace ARM-based CPUs within a data center rack.

- HGX B300 NVL16: A server system linking 8 Blackwell Ultra GPUs via NVLink for high-speed AI computing.

Unlike previous AI GPUs such as the A100 and H100, which were primarily designed for pre-training AI models, NVIDIA is positioning the Blackwell Ultra GPU as an AI inference-optimized solution. While still capable of training tasks, the new GB300 NVL72 and HGX B300 NVL16 systems are specifically engineered for complex inference workloads. For example, the HGX B300 NVL16 achieves 11x faster inference speeds compared to the previous Hopper architecture.

The shift towards inference has been accelerated by DeepSeek, which demonstrated that AI models can be efficiently developed with lower computing costs. Some had speculated that this innovation might reduce the demand for NVIDIA’s GPUs, but Huang reassured the market that inference workloads still require high-performance GPUs and advanced networking solutions. He further emphasized that AI inference is poised to drive even greater computing demand, counteracting concerns of declining GPU sales.

Next-Gen GPUs: Rubin and Feynman Architectures

Following NVIDIA’s one-year update cycle, Huang provided insights into the upcoming Rubin GPU architecture, expected in 2026:

- Rubin GPU: Achieves 50 PFLOPS (FP4)—3.3x faster than Blackwell Ultra.

- Rubin Ultra GPU: Pushes performance to 100 PFLOPS (FP4).

Memory Upgrade: Both models will incorporate HBM4 and HBM4E AI memory.

Product Timeline:

- Vera Rubin NVL144 (144 GPUs) – Expected H2 2026.

- Rubin Ultra NVL576 (576 GPUs) – Expected H2 2027.

Beyond Rubin, Huang also revealed the Feynman GPU architecture, named after physicist Richard Feynman, which is slated for release in 2028.

AI Agents and the Need for More Chips

This year’s GTC focused less on product announcements and more on educating the audience about Agentic AI—a key transition from Generative AI to AI agents that can autonomously plan, reason, and execute complex tasks.

Huang explained that while Generative AI (such as ChatGPT and image generation models) focuses on creating content, Agentic AI represents a more advanced stage where AI can understand tasks, make decisions, and execute multi-step operations.

This evolution places greater demand on AI inference, leading to increased computational requirements. Blackwell Ultra NVL72, for example, can process DeepSeek-R1 671B models in just 10 seconds, whereas the previous-gen H100 required 90 seconds.

To address this demand, NVIDIA also launched Dynamo, a new AI inference software that optimizes GPU communication and workload distribution, ensuring maximum efficiency across thousands of GPUs.

Huang predicted that by 2028, investments in AI data centers will reach $1 trillion, underscoring the exponential growth of computing needs.

Silicon Photonics, Robotics, and Quantum Computing

NVIDIA also made significant announcements in networking, robotics, and quantum computing:

Silicon Photonics: The new NVIDIA Spectrum-X (Ethernet-based) and Quantum-X (InfiniBand-based) optical switches leverage co-packaged optics (CPO) technology. Unlike previous designs relying on external modules, these integrate optical communication directly into the switch, reducing bottlenecks for massive AI clusters.

- Partners: TSMC, Coherent, Corning, Foxconn, Lumentum, SENKO.

- Availability: Quantum-X launches later this year; Spectrum-X in 2026.

Robotics & AI Agents: NVIDIA announced Cosmos (a simulation model for AI training), GROOT N1 (a humanoid robotics foundation model), and Omniverse (a real-time 3D simulation platform).

- GROOT N1 is now open-source and features dual-system architecture that allows robots to reason and plan autonomously before executing precise physical movements.

Quantum Computing: NVIDIA will establish the NVIDIA Accelerated Quantum Research Center (NVAQC) in Boston to drive innovation in quantum computing architectures and algorithms.

- Despite market hype, Huang tempered expectations, stating that practical quantum computing is still at least 20 years away and will serve as a complement to AI supercomputers rather than a replacement.

Summary

NVIDIA’s GTC 2025 showcased a paradigm shift in AI computing, emphasizing the transition from training-centric AI to inference-driven applications. The Blackwell Ultra GPU, next-gen Rubin and Feynman architectures, silicon photonics, and robotics advancements underscore NVIDIA’s long-term vision of a world driven by AI agents and large-scale inference workloads.

While DeepSeek and efficient AI inference methods may reshape computing trends, NVIDIA remains confident that AI inference workloads will fuel sustained demand for powerful GPUs. With AI data center investments projected to hit $1 trillion by 2028, the era of AI-driven computing has only just begun.

GPUs continue to be in high demand. If you have surplus high-end GPUs, don’t let them sit idle. Sell GPU to reduce your business costs and maximize returns.

#GTC2025 #Nvidia #GPU #AI

About the Creator

Jeff BSR

Computer Hardware Engineer

Comments

There are no comments for this story

Be the first to respond and start the conversation.