Chaos Engineering Principles: A Practical Guide for Resilient Systems

Chaos Engineering Principles

Chaos engineering is the disciplined practice of injecting controlled failure into a system to reveal weaknesses before they bite you in production. Rather than waiting for the next outage to teach a painful lesson, teams form a hypothesis about how the system should behave, run an experiment that perturbs reality, and observe whether the hypothesis holds. It’s science for reliability.

This approach isn’t reckless; it’s proactive. By exploring how services handle dependency timeouts, thundering herds, or noisy neighbors, you cultivate antifragility – systems and teams that don’t just survive stress but improve from it. The result is fewer surprises, faster recovery, and confidence that SLAs will be met when the stakes are highest.

The Mindset Behind Chaos Engineering Principles

The first principle is to define a “steady state.” If you can’t measure normal, you can’t detect abnormal. Choose user-centric signals – orders per minute, successful logins, median latency – rather than machine-centric metrics alone. These indicators become your compass during every experiment.

Next comes falsifiable hypotheses. “If service X loses 20% of its cache, checkout throughput should remain within 5% of baseline.” Precise statements like this give you a clear pass/fail and prevent vague conclusions. When experiments fail, they illuminate exactly where architecture, runbooks, or assumptions must evolve.

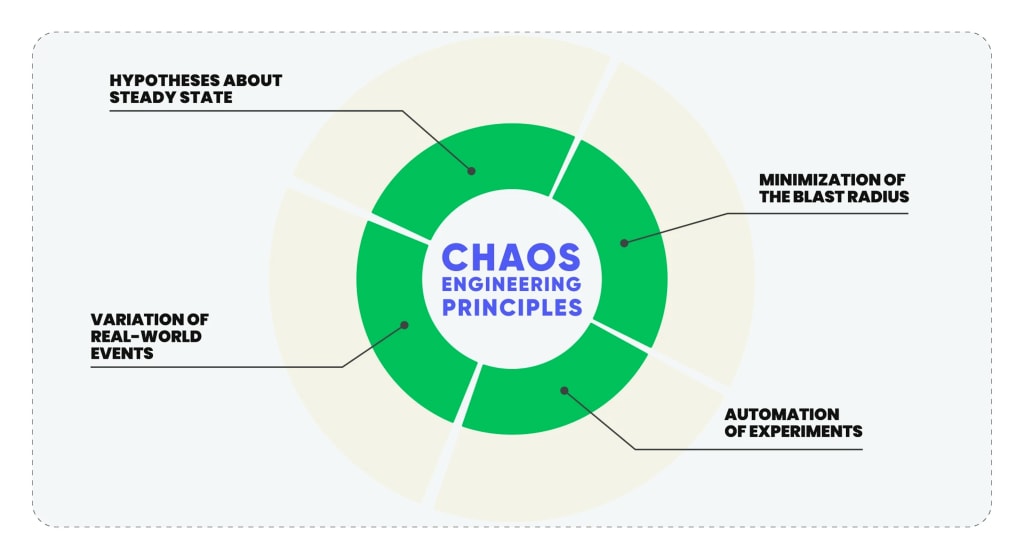

Core Principles at a Glance

- Start from a clear steady state defined by customer outcomes

- Introduce realistic faults that mirror production conditions

- Limit blast radius to protect users while learning quickly

- Form testable hypotheses and declare success criteria upfront

- Automate experiments and run them regularly, not just once

- Improve observability, playbooks, and architecture based on findings

Designing High-Quality Experiments

Great chaos tests feel surprisingly modest because they’re narrowly scoped. Begin with a single dependency and a small, well-understood failure mode: add 200 ms latency to a database call, drop 5% of messages on a queue, or throttle a third-party API response. Small shocks uncover big truths without risking a customer-facing incident.

Each experiment should include guardrails. Limit the duration, define a safe rollback, and pre-approve abort conditions. Keep stakeholders in the loop: product, support, and incident command should know what’s running and when. Treat experiments like change requests, because that’s exactly what they are – intentional, auditable changes to production reality.

Observability is your co-pilot. Tracing should reveal propagation of latency and retries; logs should annotate experiment start/stop; dashboards should show steady-state deviations clearly. If you can’t see it, you can’t improve it.

From Principles to Practice: Examples

The table below maps common chaos engineering principles to practical scenarios, helping you translate theory into action without hand-waving.

Measuring Success: SLOs, Error Budgets, and Blast Radius

Chaos engineering without service level objectives is just chaos. Tie experiments to SLOs so you can reason in business terms: did this failure keep us within 99.9% availability and a 250 ms p95? If not, which control – timeouts, bulkheads, circuit breakers – must be tuned? Error budgets provide the political capital to run tests responsibly and the clarity to stop when budget is at risk.

Blast radius management is the art of learning fast while staying safe. Use canaries, feature flags, and traffic shadowing to contain risk. Start with low-impact components or off-peak windows. As confidence grows, expand scope to multi-region failovers and dependency blackholes that mirror real-world incidents.

People, Process, and Tooling

Resilience is a team sport. Share experiment readouts in engineering reviews, invite product to “game days,” and bake learnings into runbooks. When an experiment uncovers a brittle retry storm or a hidden cross-region dependency, track the fix like any other roadmap item. Reliability work competes for time; make its value visible with concrete metrics: fewer pages, quicker MTTR, happier customers.

Operational hygiene matters. Version your experiment definitions, tag observability artifacts with experiment IDs, and store hypotheses alongside success criteria. Integrate approvals with change management so audits are effortless and stakeholders trust the program. Align incident response with experiment outcomes: if chaos reveals a blind spot, update playbooks and rehearse the new procedure.

A Pragmatic Path to Getting Started

Begin where the risk is real but the scope is small. Pick one revenue-critical workflow, define its steady state, and design a single, reversible experiment. Run it in a pre-production environment that mirrors production load, then promote a canary in production during a calm period. Document what you learn, fix what you find, and schedule the next iteration. Reliability grows through repetition, not heroics.

As your practice matures, automate experiments in CI, schedule regional failover rehearsals, and continuously validate graceful degradation. Tie the whole loop into your service management and incident workflows so improvements stick. If your organization already uses platforms for change control and knowledge management, integrating experiments alongside requests, approvals, and post-mortems is straightforward – solutions like Alloy Software can help centralize that operational rigor across teams.

Consistency beats intensity. Ten small, well-observed experiments deliver more resilience than one dramatic “chaos day.” Keep hypotheses sharp, guardrails firm, and feedback loops short. Over time, chaos engineering principles stop being special events and become part of how you ship – confidently, predictably, and with systems that stand tall when the real storms roll in.

About the Creator

SEO HUB

I’m a professional Content & SEO Specialist with 3+ years of experience. I provide placements on top-tier USA publications, sites like USAToday.com, ensuring real exposure and SEO value.

WhatsApp: +92 311 6772455

Comments

There are no comments for this story

Be the first to respond and start the conversation.