Blueprints of the Digital Age: The Story of Computer Discovery

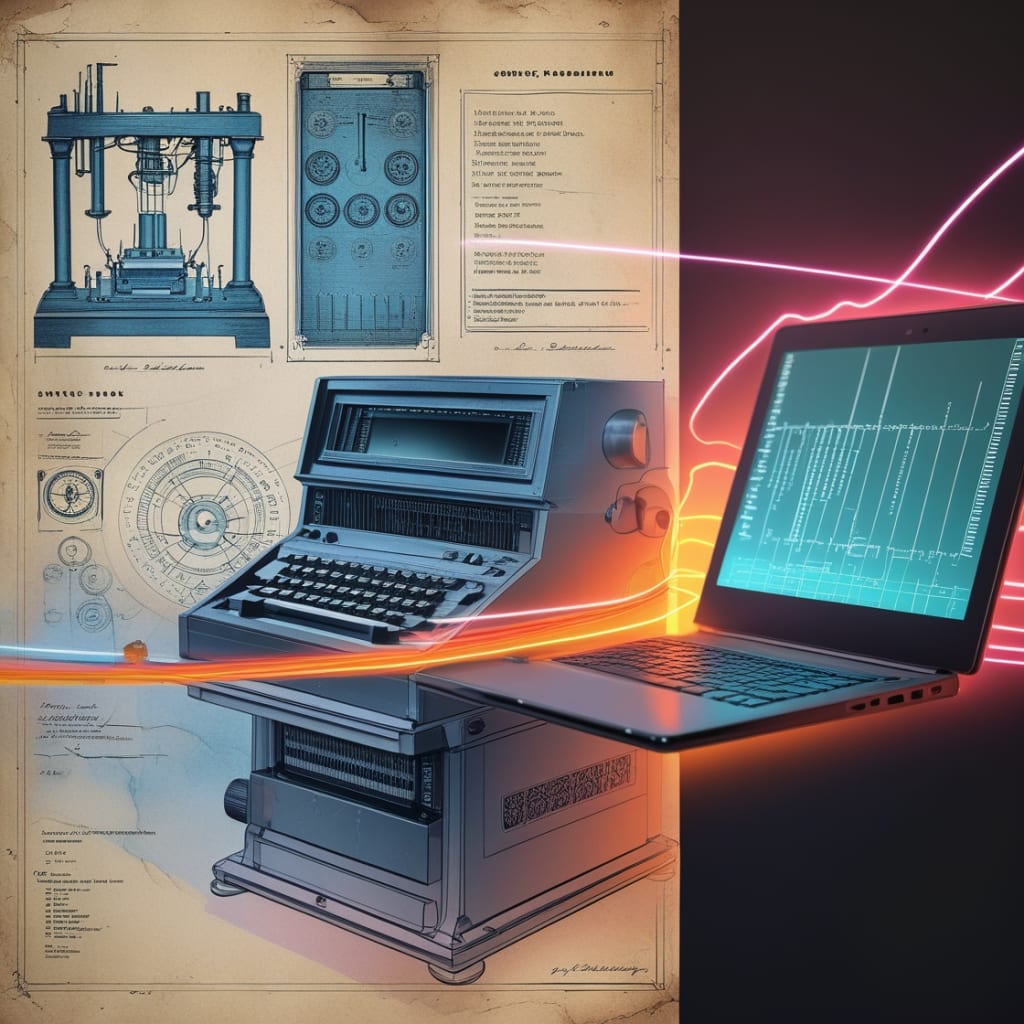

How brilliant minds and bold ideas built the foundation of modern computing

Today, nearly every task—from sending a message across the globe to analyzing complex medical data—relies on a device that fits in the palm of your hand or rests quietly on a desk: the computer. But this seemingly ordinary machine has a long, rich history that spans centuries and continents. The story of the computer isn't the tale of a single genius or a single moment. It’s a story of blueprints—sketches, ideas, experiments, and breakthroughs—contributed by brilliant minds who dared to imagine a different future. In the flickering candlelight of a small London study in 1837, Charles Babbage hunched over a table scattered with gears, rods, and sheets of hand-drawn diagrams. As the wind howled outside, he envisioned something the world had never seen before: a machine capable of performing calculations automatically, without human error or fatigue.

Babbage called it the Analytical Engine. It was, by all accounts, the first true concept of a programmable computer — a vision so ahead of its time that even his closest colleagues struggled to comprehend it fully. His friend Ada Lovelace, a gifted mathematician and daughter of the poet Lord Byron, understood. She wrote detailed notes and theoretical programs for the machine, which would later earn her the title of the world's first computer programmer.

Though the Analytical Engine was never built during Babbage’s lifetime, it laid the foundation for something that would transform human civilization forever.

Mechanical Dreams and Early Sparks

Even before Babbage, humans longed for ways to automate calculations. The 17th-century French mathematician Blaise Pascal invented a mechanical calculator known as the Pascaline, designed to help his father with tax computations. Gottfried Wilhelm Leibniz later improved on these ideas with his Stepped Reckoner. These early machines were limited but demonstrated a powerful idea: machines could relieve humans of repetitive mental labor.

Fast forward to the late 19th century, when Herman Hollerith introduced the punched card tabulating machine to process census data in the United States. His innovation reduced a decade-long task to just a few years. Hollerith's company would later become part of IBM — a name that would define an era of computing.

The Dawn of Electronic Brains

While mechanical and electromechanical machines brought progress, the real revolution began with electricity. In the 1930s and 40s, scientists started experimenting with vacuum tubes and electronic circuits to perform calculations at unprecedented speed.

In Germany, engineer Konrad Zuse developed the Z3 in 1941, the first programmable, fully automatic digital computer. Meanwhile, across the Atlantic, engineers and mathematicians at the University of Pennsylvania unveiled ENIAC in 1945 — a gigantic machine filling a room with rows of vacuum tubes and cables. Originally built to calculate artillery trajectories, ENIAC marked a leap forward in speed and complexity.

During this time, British mathematician Alan Turing played a pivotal role. Working at Bletchley Park during World War II, Turing and his colleagues developed the Bombe machine, which helped crack the Nazi Enigma codes. Turing also proposed the theoretical "Universal Machine," the conceptual ancestor of all modern computers.

The seeds of the digital age had been sown.

From Giant Machines to Personal Desks

Following World War II, computer development accelerated rapidly. Vacuum tubes gave way to transistors — smaller, more reliable, and far more energy-efficient. The 1950s and 60s saw the birth of mainframes, used by governments, research labs, and large corporations. These machines, like IBM’s System/360, were still enormous and expensive, often requiring entire rooms to house them.

But progress did not stop. In 1971, Intel introduced the first commercial microprocessor, the 4004. This tiny chip contained thousands of transistors and could be mass-produced cheaply. Suddenly, computing power that once filled rooms could fit on a single board.

The world was about to change forever.

In 1975, two young hobbyists — Bill Gates and Paul Allen — saw the potential in a small kit computer called the Altair 8800. They offered to write software for it, eventually founding Microsoft. Around the same time, Steve Jobs and Steve Wozniak built the first Apple computer in a California garage, laying the foundation for Apple Computer, Inc.

Personal computers began appearing in offices and homes, no longer the exclusive domain of scientists and governments. The IBM PC, launched in 1981, solidified the personal computer market and helped create an entire ecosystem of software and peripherals.

Connecting the World

As personal computers became common, another quiet revolution was brewing: the internet. Originally a U.S. military research project called ARPANET in the late 1960s, it grew into a vast network connecting universities and research labs.

By the 1990s, this network evolved into the World Wide Web, popularized by Tim Berners-Lee’s invention of the first web browser. Suddenly, computers weren’t just personal — they were global. Information, ideas, and cultures flowed across continents in seconds.

The computer was no longer a simple calculator or a static tool. It had become a living, breathing gateway to human knowledge and connection.

The Mobile and AI Era

As the 21st century unfolded, computers grew smaller, faster, and smarter. The rise of smartphones placed powerful computers into billions of pockets. Laptops became thinner and more powerful, tablets replaced notepads, and wearable devices began monitoring our health and connecting to the internet.

Artificial intelligence, once science fiction, now became integrated into daily life. Voice assistants like Siri and Alexa, self-driving cars, and advanced medical diagnostics all rely on computers that "think" and learn. Quantum computing, once a theoretical dream, is now emerging, promising to solve problems far beyond the reach of traditional machines.

About the Creator

FAROOQ HASSAN

Expert in "Content writting" in every language 100% human hand writting

Reader insights

Good effort

You have potential. Keep practicing and don’t give up!

Top insights

Compelling and original writing

Creative use of language & vocab

Easy to read and follow

Well-structured & engaging content

Excellent storytelling

Original narrative & well developed characters

Expert insights and opinions

Arguments were carefully researched and presented

Eye opening

Niche topic & fresh perspectives

Heartfelt and relatable

The story invoked strong personal emotions

Masterful proofreading

Zero grammar & spelling mistakes

On-point and relevant

Writing reflected the title & theme

Comments (7)

Excellent storytelling

I Hope these are helpfull for we

Understanding to Technology is our need

Good efforts

fabolous

this a most attractive strory

Good Information