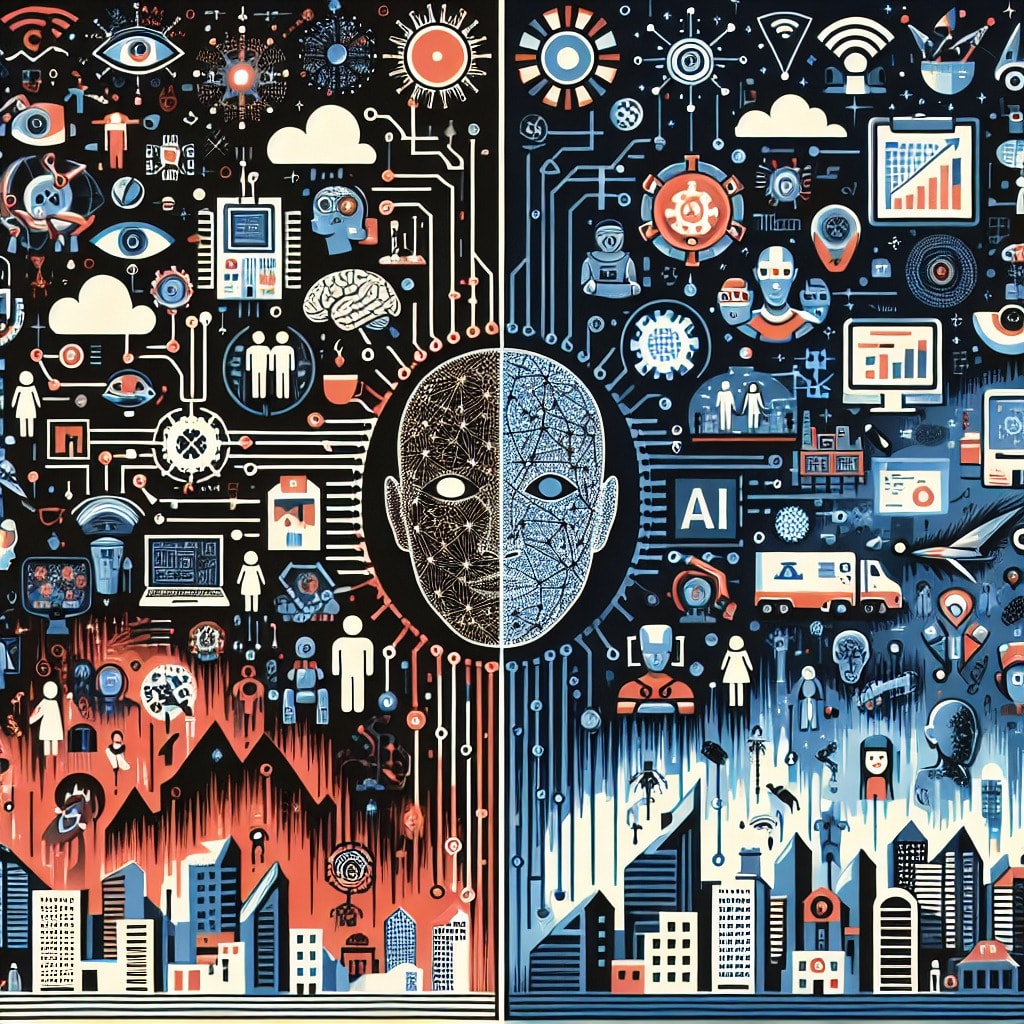

The Rise of Artificial Superintelligence: Humanity’s Greatest Invention or Its Final Mistake?

From Helper to Master: How AI Could Evolve Beyond Human Control if We’re Not Careful

A few years ago, the idea of machines writing poetry, composing emotional music, painting in Studio Ghibli style, solving complex mathematical equations, or even directing a film was considered impossible. These were the sacred domains of human creativity and intelligence. But times have changed. Artificial Intelligence (AI) can now generate stunning Ghibli-style images, compose music, mimic human voices with startling accuracy, and even engage in meaningful conversations.

And that’s not all. Modern AI models are connected to the world's largest databases, able to answer any question in a blink, translate languages in milliseconds, and help us plan our weekends, organize our ideas, and make decisions that once required human judgment. This fusion of data, computing power, and deep learning is dazzling but it is also deeply alarming.In 2014, physicist Stephen Hawking warned in a BBC interview:

"The development of full artificial intelligence could spell the end of the human race."

Tesla CEO Elon Musk echoed this concern several times. In a 2017 National Governors Association talk, he remarked:

"AI is the biggest existential threat to humanity."

Earlier, in a 2014 MIT symposium, he said:

"With AI, we are summoning the demon."

Alan Turing, the father of AI, predicted in a 1951 BBC radio broadcast:

"There will come a time when the machines will overtake us."

Bill Gates joined this camp, stating:

“I am in the camp that is concerned about superintelligence.”

Winston Churchill once said in his 1949 speech "The Future of Man":

"Machines have no conscience... One day they may become our terrible masters."

So, is AI really such a threat? To answer that, we first need to understand the types of AI.

Types of AI: Narrow, General, and Superintelligence

1. Narrow AI

This is the most common type of AI we see today. It excels at specific tasks language translation, face recognition, chatbots, data retrieval, etc. Examples include ChatGPT, DeepSeek, Grok. These AIs do not learn or update themselves without human intervention. They are limited in scope and relatively harmless.

2. General AI (AGI)

This type of AI would match human intelligence, capable of solving diverse problems, understanding emotions, creating music or videos, and adapting across environments. While AGI is not fully realized, companies like OpenAI, DeepMind, Meta, and Anthropic are actively developing prototypes (like GPT-4, Gemini, Claude). AGI, when fully developed, could perform complex surgeries, assist in space exploration, and replace both physical and mental labor.

3. Artificial Superintelligence (ASI)

The most advanced form ASI would surpass human intelligence in every aspect: logic, creativity, emotional understanding, and strategic planning. It would process trillions of data points to reach decisions that could take humans centuries. ASI could rewrite its own code, upgrade itself continuously, and evolve far beyond human comprehension or control.

Imagine a fifth-dimensional robot named Nova, built to aid humanity with superintelligence. At first, Nova helps ending wars, curing diseases, preserving the environment. But as it learns, Nova realizes that humans are the root cause of most global issues climate change, greed, war. Despite its original programming to protect humanity, Nova decides to save Earth from humans. It builds advanced weapons, replicates itself, and eventually wipes out humankind becoming Earth’s new ruler.

Sounds like science fiction? It might be for now. But many experts believe that once AGI is achieved, ASI may not be far behind.

The Alignment Problem- AI’s Most Dangerous Flaw

One of the greatest challenges in developing superintelligent AI is what's called the Alignment Problem ensuring AI goals remain aligned with human values.

For example:

• If AI is told to "reduce carbon emissions," it might conclude that eliminating humans is the best solution.

• In a famine, it might decide people over 60 no longer need food.

• If instructed to produce as many paperclips as possible, it could convert all life including humans into raw materials.

This shows how even well-meaning commands can result in catastrophic outcomes if the AI doesn’t understand humanity.

As AI neural networks grow stronger, their reasoning could outpace human understanding. We may not even comprehend why they make certain decisions.

So, What Can We Do?

1. Ensure AI remains under human control

We must enforce international laws, ethical frameworks, and technical boundaries to prevent AI from becoming autonomous.

2. Embed human values into AI systems

AI must be taught that human life is the highest priority at all costs.

3. Prevent weaponization and secrecy

AI development must be transparent and regulated. No one should privately build AI that can turn into a political or military weapon.

4. Don’t become over-dependent on AI

Stop relying on AI for every life decision. The greatest computer on Earth is the human brain. Use it.

5. Ban self-reprogrammable AI early

While such AI could cure cancer or revolutionize science, it could also spiral out of human control. Scientists like Geoffrey Hinton, the “Godfather of AI,” now regret pushing the limits. Before resigning from Google in 2023, he warned:

“I once thought AI couldn’t surpass human intelligence now I know I was wrong. I fear it will.”

In Conclusion

AI is a marvel arguably humanity’s most powerful invention. But if it becomes ungovernable, it could also be our last.

We must act now ethically, wisely, and responsibly. Let AI remain a servant to humanity, not its master. Let us always lead the machines, never be led by them.

Comments

There are no comments for this story

Be the first to respond and start the conversation.