Understanding ELT and ETL Pipelines

If you are new to Data Engineering, or for that matter data in general, you would have come across the words like pipeline, ETL, ELT, Data Warehouse etc. These terms are ubiquitous, and at the same time, super important in the world of data. These are the core of Data Engineering. Today, let's understand what exactly pipeline means, the types of channels widely used, why we need them, and what purpose they serve.

What is a pipeline?

In simple words, a step-by-step process is a pipeline. Processes connected sequentially are referred to as a pipeline. In other words, when the output of Process A becomes the input of Process B is a pipeline.

Now that we have an understanding of what a pipeline is, let’s dive into the two types of pipelines that are widely used: ETL and ELT.

ETL

Let's talk about ETL first, and ELT will automatically fall into place.

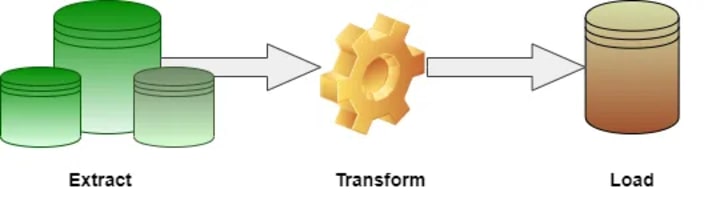

ETL is a pipeline. This pipeline contains 3 jobs, as the name suggests. Extract, Transform and Load, short as ETL.

This is an automated data pipeline, whereby data is acquired and prepared for subsequent use in an analytics environment, such as a data warehouse or data mart. Data is extracted from multiple sources, usually in raw format, transformed as per requirements and loaded to the destination for end-users to use. ETL is a rigid process as it is meant to serve a specific function.

Why do we need ETL?

There are various situations where ETL is super helpful. It consists of a variety of use cases:

1. Digitizing of analogue data: There is a lot of information that is still present in the analogue format like paper drawing, analogue audio and videos. All of these data can be transformed into digital using the ETL process. The analogue data is extracted, transformed using some logic to convert into digital format, and then loaded to the desired location.

2. Data from OLTP to OLAP Systems: OLTP (Online Transactional Processing) Systems contain raw transactional data coming from various sources, so they can’t be used for analytical purposes. OLTP data needs to be cleaned and transformed in a way that can be useful for Data Analysts or Data Scientists. This cleaned and transformed data can be used using OLAP (Online Analytical Processing) Systems. Data is extracted from databases consisting of transactional data, processed, cleaned and transformed as per the use case, and loaded into a Data Warehouse or Data Mart to be used for analytical purposes.

3. Dashboarding: Once we have data readily available in OLAP Systems, the data can be further used to create dashboards for business stakeholders.

ELT

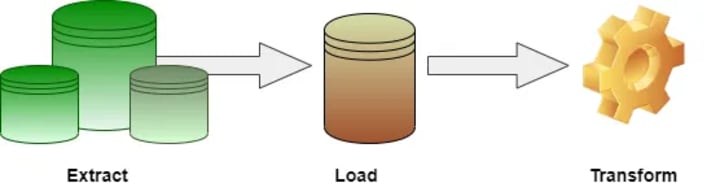

The only difference between ETL and ELT is in ETL transformation happens before loading the data to the destination, whereas in ELT, transformation happens after loading the data to the destination.

Data is acquired and loaded as-is, into the destination location. Then it is transformed on demand and however is required. ELT is dynamic and flexible as the data is readily available for self-serve analytics uses. ELT handles any kind of structured and unstructured data. Modern analytics tools allow on-demand exploration and visualization of data in the destination environment.

ETL is booming mainly because cloud computing solutions are evolving at tremendous rates due to the demands of Big Data. They can easily handle huge amounts of asynchronous data which can be highly distributed around the world. With ELT, you have a clean separation between moving data and processing data. Because you are working with a replica of the source data, there is no information loss. ELT is an emerging trend mainly because cloud platform technologies are enabling it.

In Summary:

The transformations happen in a different order: Transformations for ETL pipelines take place within the data pipeline, before the data reaches its destination, whereas transformations for ELT are decoupled from the data pipeline, and happen in the destination environment at will.

They also differ in flexibility in how they can be used: ETL is normally a fixed process meant to serve a very specific function, whereas ELT is flexible, making data readily available for self-serve analytics.

They also differ in their ability to handle Big Data. ETL processes traditionally handle structured, relational data, and on-premise computing resources handle the workflow. Thus, scalability can be a problem. ELT, on the other hand, handles any kind of data, structured and unstructured. ELT provides more agility. With some training in modern analytics applications, end users can easily connect to and experiment with the raw data, create their dashboards, and run predictive models themselves. ELT is a natural evolution of ETL.

Hi! Thank you for giving this a read. This is my first article around tech, so please let me know what did you think of it. I would love to hear feedbacks. Write me on [email protected] to share your thoughts/suggestions. :)

Diksha

Comments

There are no comments for this story

Be the first to respond and start the conversation.