Regular Expressions in Compiler Design

Harnessing the Power of Regular Expressions for Efficient Lexical Analysis in Compiler Design

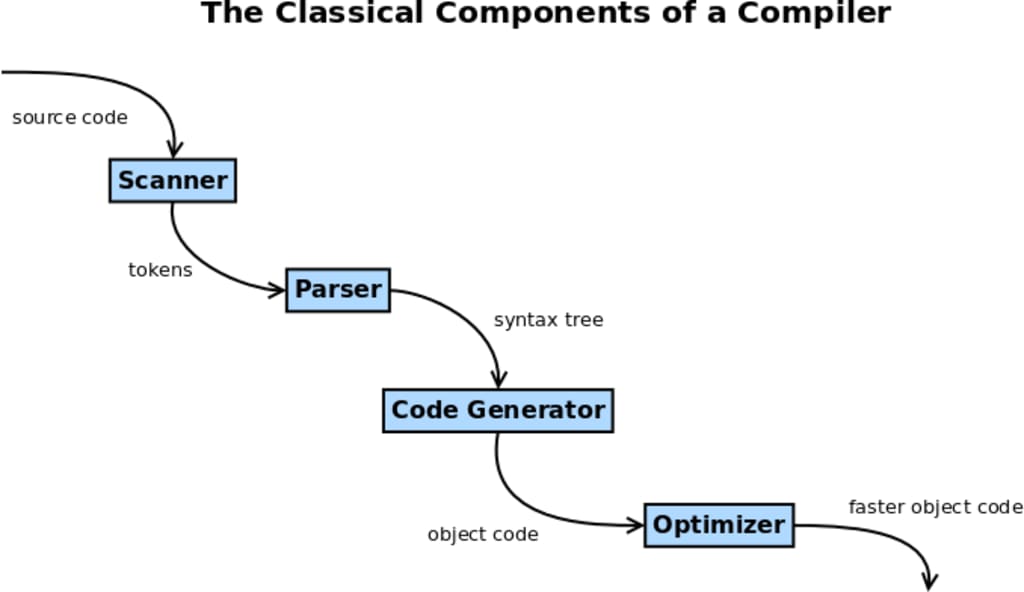

Regular expressions are a fundamental concept in compiler design, serving as a bridge between human-readable text and the more abstract representation needed for computer processing. They provide a powerful way to define patterns in text, which is crucial for various stages of compiler construction. This blog will delve into how regular expressions are used in compiler design, their role in lexical analysis, and how they interact with other components of the compiler.

What Are Regular Expressions?

Regular expressions (regex) are sequences of characters that define search patterns. They are widely used for string matching and manipulation tasks. In compiler design, regular expressions are used to describe the lexical structure of programming languages. They help in defining tokens, which are the building blocks of source code.

The Role of Regular Expressions in Compiler Design

Lexical Analysis:

The first phase of compilation is lexical analysis, where the source code is broken down into tokens. Tokens are the smallest units of meaning, such as keywords, identifiers, literals, and operators. Regular expressions are used to define these tokens. For example, a regular expression for an integer token might be \d+, which matches one or more digits.

Finite Automata:

Regular expressions are converted into finite automata, specifically deterministic finite automata (DFA) or non-deterministic finite automata (NFA). Finite automata are used to recognize patterns described by regular expressions. The conversion from regex to DFA/NFA is a key process in lexical analysis, enabling efficient pattern matching and token recognition.

NFA: An NFA is used during the initial stages of regex processing. It can have multiple transitions for the same input symbol and can move to multiple states simultaneously.

DFA: A DFA, on the other hand, has a single transition for each input symbol and no epsilon (empty) transitions. It is more efficient for pattern matching because it does not require backtracking.

Pattern Matching:

Once the regex is converted to a finite automaton, the lexical analyzer (also known as the lexer or scanner) uses it to scan the input source code. As the lexer reads the input, it uses the automaton to match sequences of characters against the patterns defined by regular expressions.

Token Generation:

As the lexer identifies tokens based on regex patterns, it generates a stream of tokens that are then passed to the parser. This stream of tokens is essential for the syntax analysis phase, where the structure of the code is analyzed based on grammatical rules.

Example of Regular Expressions in Compiler Design

Let’s consider a simple example. Suppose we are designing a compiler for a small programming language with the following tokens:

- Keywords: if, else, while

Identifiers: Sequences of letters and digits, starting with a letter

Integer literals: Sequences of digits

Operators: +, -, *, /

We can define regular expressions for these tokens as follows:

Keywords: if|else|while

Identifiers: [a-zA-Z][a-zA-Z0-9]*

Integer literals: \d+

- Operators: [+\-*/]

These regular expressions can be converted into NFAs and then into a DFA, which will efficiently recognize and tokenize these patterns in the source code.

Challenges and Considerations

Complex Patterns:

While regular expressions are powerful, they have limitations. For complex patterns, such as nested structures, regular expressions may not be sufficient. In such cases, more advanced parsing techniques are needed.

Efficiency:

The efficiency of the lexer depends on the efficiency of the DFA. While DFA provides faster pattern matching, the conversion process from regex to DFA can be complex, especially for large regular expressions.

Error Handling:

Lexical analyzers must handle errors gracefully. For instance, they should be able to identify and report unrecognized tokens or invalid patterns.

Conclusion

Regular expressions are a vital component of compiler design, especially in the lexical analysis phase. They provide a concise and expressive way to define patterns for tokens, which are crucial for interpreting and processing source code. Understanding how regular expressions work and their role in compiler construction is essential for anyone interested in compiler design or text processing.

About the Creator

Pushpendra Sharma

I am currently working as Digital Marketing Executive in Tutorials and Examples.

Comments

There are no comments for this story

Be the first to respond and start the conversation.