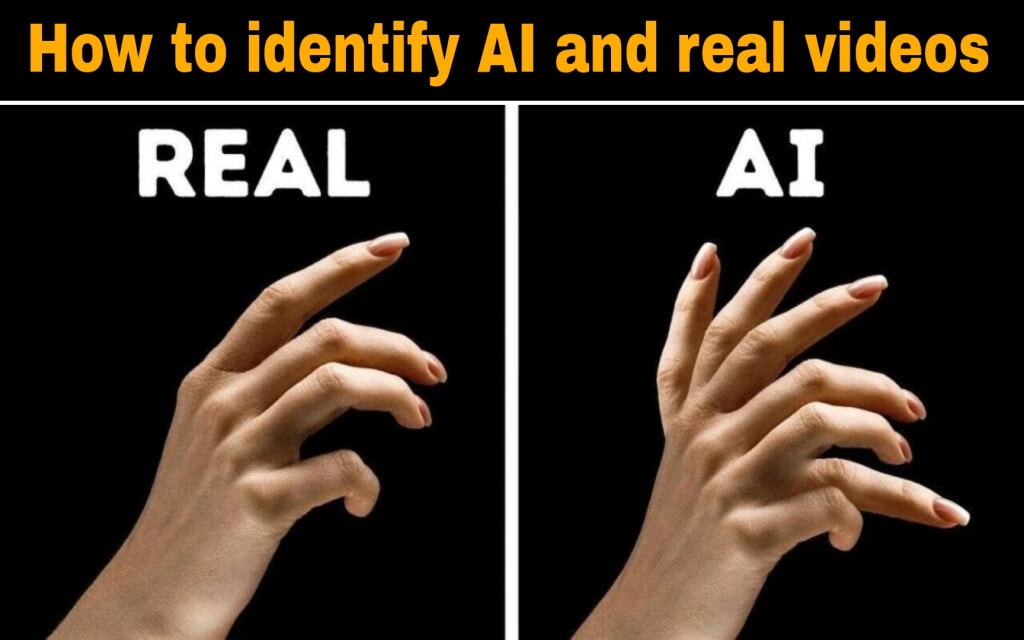

How to identify fake AI videos on social media?

Social media is flooded with AI-generated videos that look real but are completely fake. Learn how to identify them by spotting signs like low quality, short length, and unrealistic details before believing what you see online.

How to identify fake AI videos on social media?

Nowadays, social media is full of videos created with the help of artificial intelligence (AI), due to which people often get fooled. In such a situation, the important question is how to identify such fake videos on social media, which video or content has been created with the help of AI?

Over the past few months, some high-profile AI videos have fooled a large number of people. They all had something in common. A fake but cheerful video of wild rabbits jumping on a trampoline received more than 240 million likes on TikTok.

Millions of people liked a clip of two people making love on the New York subway, but they were disappointed when it turned out to be fake.

I personally believed a viral video of an American pastor in a conservative church who was speaking out in a surprisingly left-wing manner. ‘Billionaires are the only minority we should be afraid of.’

I was stunned. But this was another AI.

The tools for creating AI videos have also improved a lot in the last six months, and you might be fooled again and again, until you start looking at everything with a questionable eye.

Welcome to the future. But there are some points to consider. One is very obvious.

If you see a video that is of poor quality, even if the footage is blurry, alarm bells should ring in your mind that you are watching an AI video.

“That’s the first thing we look at,” says Henny Farid, a professor of computer science at the University of California, Berkeley.

The sad truth is that AI video tools will get even better and this advice will soon be useless.

But if you observe closely with me for a minute, this advice may help you to some extent.

“If you see something that is really low quality, it doesn’t mean it’s fake,” says Matthew Stamm, professor and head of the Multimedia and Information Security Lab at Drexel University in the US.

It’s a sign that you want to take a closer look at what you’re seeing.

“Popular text-to-video generators like Google’s Vue and OpenAI’s Sura still produce small discrepancies,” says Farid. But it’s not like it’s going to be six fingers or garbled text, it’s much more than that.’

Even today’s most advanced models often produce issues like abnormally smooth skin textures, strange or changing patterns in hair and clothing, or small background objects moving in unrealistic or impossible ways.

These can be easy to overlook, but the clearer the image, the more likely you are to spot the things that tell you it’s AI.

This is what makes low-quality videos so appealing.

When you ask AI to make something that looks like it was made with an old phone or security camera, it can hide artifacts that would normally be suspicious.

“There are three things to look for: resolution, quality and length,” says Farid.

Length is the easiest to see. 'Most AI videos are very short, even shorter than the typical videos we see on TikTok or Instagram which are around 30 to 60 seconds.

The majority of the videos I’m asked to verify are six, eight or 10 seconds long. That’s because AI videos are expensive to produce, so most tools create short clips.

Also, the longer the video, the more likely the AI is to mess up.

You can stitch multiple AI videos together, but you’ll see cuts every eight seconds or so.

The other two factors are resolution and quality, which are related but different.

Resolution refers to the number or size of pixels in an image, while compression is a process that reduces the size of a video file by removing detail. It often results in blocky patterns and blurred edges.

“What if I’m trying to fool people?” says Farid. I create my own fake video, then I reduce the resolution so that you can see it, and then I add compression that further obscures any possible nuance. It’s a common technique.”

The problem is that as soon as you read this, big tech companies will be spending billions of dollars to make it even more realistic.

“I have some bad news,” says Stam. “If you can find the signs you see in the videos, you won’t find them very soon.”

“I expect these signs to disappear from videos within two years. At least the ones that are very obvious because they have already disappeared from AI-generated images. You can’t even trust your eyes anymore.”

That doesn’t mean that truth has been lost. When researchers like Farid and Stam are verifying a piece of material, they have more sophisticated techniques at their disposal.

“When you create or edit a video, it leaves behind very small digital traces that our eyes can’t see, like fingerprints at a crime scene,” Stam says.

“We’re seeing techniques emerging that can help find and expose these fingerprints.”

For example, sometimes the distribution of pixels in a fake video can be different from the original video. But such factors are not foolproof.

Technology companies are also working on new standards for verifying digital information.

Essentially, cameras can add information to the file when they take a picture to help prove it’s real.

By the same token, AI tools can automatically add similar details to their videos and photos to prove they’re fake.

Stamm and others say these efforts could help.

The real solution, according to digital expert Mike Caulfield, is for us all to start thinking differently about what we see online.

“Video is going to become more like text in the long run, where the source is what matters most, not the features, and we can be prepared for that,” says Caulfield.

Videos and photos used to be different from text because they were harder to fake and alter. That’s gone now.

The only thing that matters now is where the content came from, who posted it, what the context is, and whether it’s been verified by a reliable source.

The question is when will we all understand this reality.

“I think this is the biggest information security challenge of the 21st century, but the problem is only a few years old,” says Stam. “The number of people working to tackle it is relatively small but growing rapidly. We will need a combination of solutions, education, smart policies and technical approaches that all work together. I am not optimistic.”

Thomas Germain is a senior technology journalist at the BBC. He has covered AI, privacy and internet culture for a decade.

About the Creator

Shoaib Khan

Did You Know? 🤔

Comments

There are no comments for this story

Be the first to respond and start the conversation.