AI in warfare

The Ethics of Artificial Intelligence in Warfare

Introduction:

The rapid development of artificial intelligence (AI) has opened new possibilities in various fields, including warfare. As AI technologies advance, the integration of autonomous weapons and intelligent systems in military operations raises significant ethical concerns. This essay explores the complex ethical implications surrounding the use of AI in warfare, addressing the risks, accountability, and potential consequences associated with the deployment of autonomous weapons.

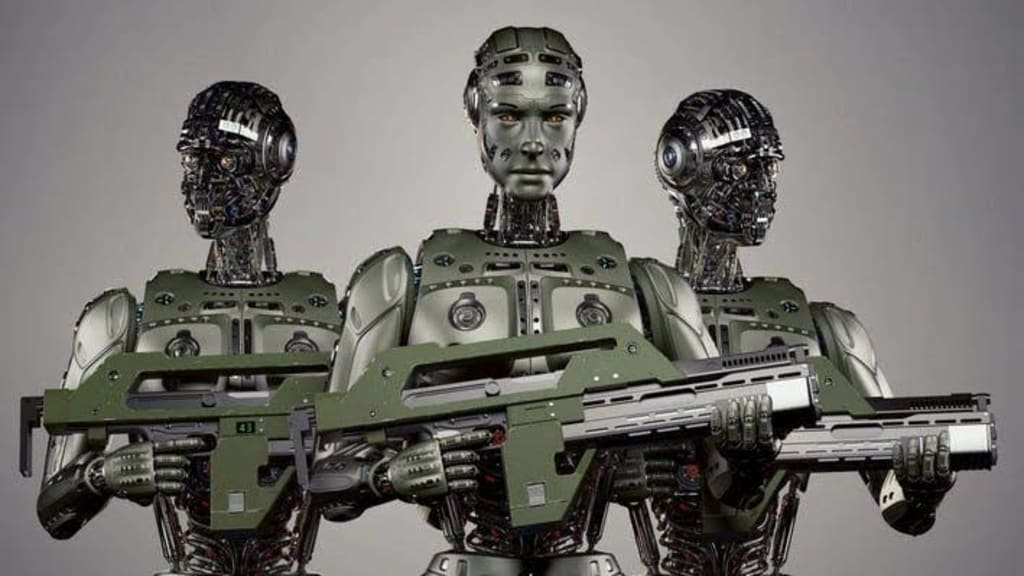

The Role of Autonomous Weapons:

Autonomous weapons, also known as "killer robots," refer to AI-powered systems capable of selecting and engaging targets without human intervention. While proponents argue that such weapons can reduce human casualties and make warfare more efficient, ethical concerns arise regarding the delegation of lethal decision-making to machines. The lack of human judgment and empathy in autonomous weapons raises questions about proportionality, distinction, and the inherent value of human life.

Accountability and Responsibility:

One of the key ethical dilemmas in AI warfare is the issue of accountability. Who should be held responsible when autonomous weapons cause harm or act inappropriately? Unlike human soldiers who can be held accountable for their actions, assigning responsibility to AI systems becomes challenging. This lack of accountability raises concerns about transparency, legal frameworks, and the ability to prevent or address unintended consequences in armed conflicts.

Risk of Unintended Consequences:

AI technologies, while promising, are not infallible. Autonomous weapons systems can make errors or be susceptible to hacking, potentially causing unintended harm or escalations in warfare. The risks associated with the loss of control and unpredictable behavior of AI systems raise concerns about the potential for accidents, miscalculations, or unintended consequences with severe humanitarian and strategic implications.

Ethical Concerns and Just War Theory:

The use of AI in warfare challenges the principles of just war theory, which seek to define ethical conduct during armed conflict. Proportionality, discrimination, and the principle of minimizing harm to civilians are central to just war theory. The application of AI technologies in warfare may compromise these principles, as autonomous weapons may have difficulty accurately distinguishing between combatants and non-combatants or appropriately assessing the proportionality of an attack.

Long-term Implications and Arms Race:

The integration of AI in warfare has broader implications beyond the immediate ethical concerns. The development and deployment of autonomous weapons could trigger an arms race, with nations competing to gain a strategic advantage. This race could lead to increased instability, proliferation of weapons, and erosion of international norms and agreements. The long-term consequences of such a race may be difficult to predict and could have far-reaching effects on global security.

Conclusion:

The ethics of artificial intelligence in warfare pose profound moral and practical challenges. The use of autonomous weapons raises questions about accountability, unintended consequences, and adherence to ethical principles during armed conflicts. As AI technologies continue to advance, it is crucial for policymakers, experts, and society at large to engage in informed and inclusive discussions to establish robust frameworks that ensure responsible and ethical deployment of AI in warfare. Balancing technological progress with humanitarian considerations is essential to prevent the erosion of fundamental ethical values and to safeguard the welfare of both combatants and civilians in an increasingly AI-driven world.

Comments

There are no comments for this story

Be the first to respond and start the conversation.