Nvidia’s Project DIGITS: Your Personal AI Supercomputer

Revolutionizing AI development with desktop-sized power.

Know About Nvidia's Project DIGITS: The Personal AI Supercomputer Revolution

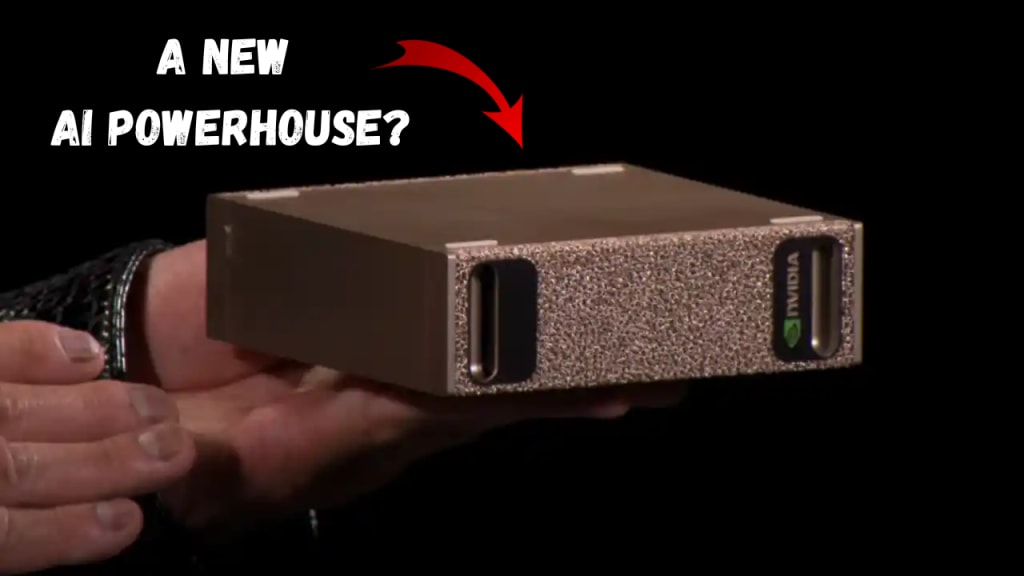

With its latest creation, Project DIGITS, Nvidia, a long-time pioneer in the computer industry, has the potential to drastically change how humans engage with artificial intelligence. Imagine being able to train sophisticated AI models, analyse enormous datasets, and spur innovation from your home office or lab with the computational capacity of a data centre compressed into a stylish desktop device. The launch of Project DIGITS at CES 2025 will mark a significant change in the democratisation of AI. Regardless of your background—developer, researcher, student, or tech enthusiast—this presentation addresses all you need to know about Nvidia's ground-breaking breakthrough.

________________________________________

What Is Project DIGITS? The Desktop Powerhouse

Project DIGITS is Nvidia’s answer to the growing demand for accessible, high-performance AI computing. Think of it as a personal AI supercomputer—a compact, energy-efficient machine that packs the muscle of a cloud server into a device smaller than a gaming PC. Starting at $3,000, it’s designed to empower users to train complex AI models locally, bypassing the need for expensive cloud subscriptions or corporate-grade infrastructure.

At its core, Project DIGITS is built to democratize AI development. Historically, only well-funded organizations could afford the computing power required for cutting-edge AI research. With Project DIGITS, Nvidia aims to level the playing field, enabling indie developers, universities, and startups to experiment with large language models (LLMs), robotics, healthcare analytics, and more—all from their desks.

________________________________________

Why Project DIGITS Matters: Bridging the AI Divide

To understand why Project DIGITS is revolutionary, consider the challenges of today’s AI landscape:

• Cloud Dependency: Training AI models often requires renting cloud servers, which can cost thousands of dollars monthly.

• Latency Issues: Uploading data to the cloud and waiting for results slows down experimentation.

• Resource Barriers: Small teams lack access to the hardware needed for ambitious projects.

Project DIGITS tackles these pain points head-on by offering:

• Localized Power: Run models instantly without waiting for cloud processing.

• One-Time Cost: A $3,000 investment replaces recurring cloud fees.

• Energy Efficiency: Consumes far less power than traditional data centers.

Nvidia isn’t just selling hardware—it’s fostering a new wave of innovation by putting industrial-strength tools in the hands of everyday creators.

________________________________________

Under the Hood: The Hardware Powering Project DIGITS

What makes Project DIGITS so powerful? Let’s dissect its groundbreaking components:

1. The GB10 Grace Blackwell Superchip: A Beast in a Box

The heart of Project DIGITS is the GB10 Grace Blackwell Superchip, a custom system-on-a-chip (SoC) co-designed with MediaTek. This hybrid marvel combines:

• Blackwell GPU Architecture: Nvidia’s latest GPU tech, optimized for AI workloads.

• 20-Core Arm-Based Grace CPU: Delivers raw processing power for multitasking.

• 1 Petaflop Performance: That’s 1 quadrillion operations per second at FP4 precision—enough to train models with 200 billion parameters.

To put this in perspective, the GB10’s performance rivals some of the world’s fastest supercomputers from just a decade ago—all while sipping power like a high-end desktop.

2. Unified Memory: No More Data Traffic Jams

Project DIGITS features 128GB of unified memory, shared seamlessly between the CPU and GPU. Traditional systems force data to shuffle between separate memory pools, creating bottlenecks. With unified memory, tasks like training neural networks or rendering 3D simulations happen faster because the hardware acts as a cohesive unit.

3. Storage and Scalability: Grow as You Go

• 4TB NVMe SSD Storage: Store massive datasets (like medical imaging libraries or video archives) locally for rapid access.

• Scalable Design: Link two DIGITS units via Nvidia’s ConnectX networking to handle models with up to 405 billion parameters—a feature previously reserved for server farms.

________________________________________

Software: The Brains Behind the Brawn

Hardware is only half the story. Project DIGITS ships with a curated AI software ecosystem to kickstart productivity:

1. Nvidia DGX OS: Linux, Optimized for AI

The machine runs on a custom Linux-based OS tailored for AI workflows. It supports popular tools like Docker and Kubernetes, making it easy to deploy containerized applications.

2. Pre-Installed Frameworks and Libraries

• PyTorch and TensorFlow: Build and train models using industry-standard frameworks.

• Jupyter Notebooks: Prototype ideas in an interactive coding environment.

• Nvidia NeMo: Fine-tune LLMs like GPT-4 or create custom chatbots.

• RAPIDS: Accelerate data science tasks with GPU-optimized pandas and scikit-learn.

3. Nvidia AI Enterprise Suite

This subscription-free package includes tools for model deployment, monitoring, and optimization. Think of it as a “AI developer’s toolbox” with everything from data labeling utilities to security features.

________________________________________

Real-World Applications: Who’s Using Project DIGITS?

Project DIGITS isn’t just theoretical—it’s already unlocking possibilities across industries:

1. Healthcare: Revolutionizing Diagnostics

Hospitals are using DIGITS to analyze 3D medical scans in real time, detecting tumors or anomalies faster than ever. Researchers can also process genomic data to identify disease markers, accelerating drug discovery.

2. Robotics: Training Smarter Machines

Startups are training autonomous drones and factory robots on DIGITS. By simulating environments and iterating models locally, developers reduce training time from weeks to days.

3. Creative Industries: AI-Driven Art and Design

Animators and game studios use DIGITS to render lifelike CGI or generate assets via tools like Stable Diffusion. A solo developer could create a blockbuster-quality game trailer without a render farm.

4. Academia: Teaching the Next Generation

Universities are integrating DIGITS into curricula, letting students experiment with LLMs and robotics—skills once limited to PhD candidates with server access.

________________________________________

Benefits vs. Challenges: Is Project DIGITS Right for You?

Pros

• Cost Savings: A one-time 3,000 purchase vs 10,000+ annually on cloud credits.

• Speed: No latency—test ideas instantly.

• Privacy: Sensitive data stays offline.

Cons

• Learning Curve: Requires basic AI/ML knowledge.

• Upfront Cost: Still pricey for hobbyists (though cheaper than cloud long-term).

________________________________________

The Future of Project DIGITS: What’s Next?

Nvidia plans to release DIGITS Nano, a scaled-down $1,500 version for educators and hobbyists, by 2026. They’re also expanding partnerships with open-source communities to build a library of plug-and-play AI models.

________________________________________

Final Thoughts: A New Era of Accessible AI

Project DIGITS isn’t just a product—it’s a movement. By placing supercomputing power on desktops worldwide, Nvidia is fueling a grassroots AI revolution. Whether you’re building the next ChatGPT competitor or analyzing climate data, Project DIGITS turns “what if” into “what’s next.” Ready to join the future?

________________________________________

OUR OTHER POSTS:-

1. Physics Wallah PiBook Review: Affordable Laptop for Students

2. Leonardo da Vinci: The Genius Who Shaped the Renaissance

3. Best Free AI Video Makers in 2025

5. How DeepSeek AI Was Created With a $6 Million Investment

FAQs About Nvidia’s Project DIGITS

1. What is Project DIGITS?

It’s a personal AI supercomputer by Nvidia, designed for developers, researchers, and students to run and train AI models locally.

2. How much does it cost?

Starting at $3,000, with options for upgrades like extra storage or memory.

3. What can it do?

It can train and run large AI models (up to 200 billion parameters) for tasks like language processing, robotics, and data analysis.

4. Do I need coding skills?

Basic AI/ML knowledge helps, but it comes with pre-installed tools like PyTorch and TensorFlow, plus tutorials for beginners.

5. Can I connect multiple units?

Yes! Two units can be linked for even more power, handling models with up to 405 billion parameters.

________________________________________

Please don’t forget to view our other POSTS.

About the Creator

Valuable Knowledge

See the growing technology of the world, Sub my profile to be connected to the world's Tech

Sub to Youtube Channel

https://www.youtube.com/@whatsnew9825?sub_confirmation=1

Comments

There are no comments for this story

Be the first to respond and start the conversation.