How Feature Engineering Drives Success in Machine Learning

This blog explains how feature engineering drives success in the field of machine learning

The Performance of any model in the arena of machine learning critically depends on the quality of the data going into it. Model selection and algorithms are important, but feature engineering is that unsung hero, which can really make or break your machine learning project. If one is thinking about getting enrolled in a data science course, one really needs to learn about feature engineering in order to be good at machine learning.

What is Feature Engineering?

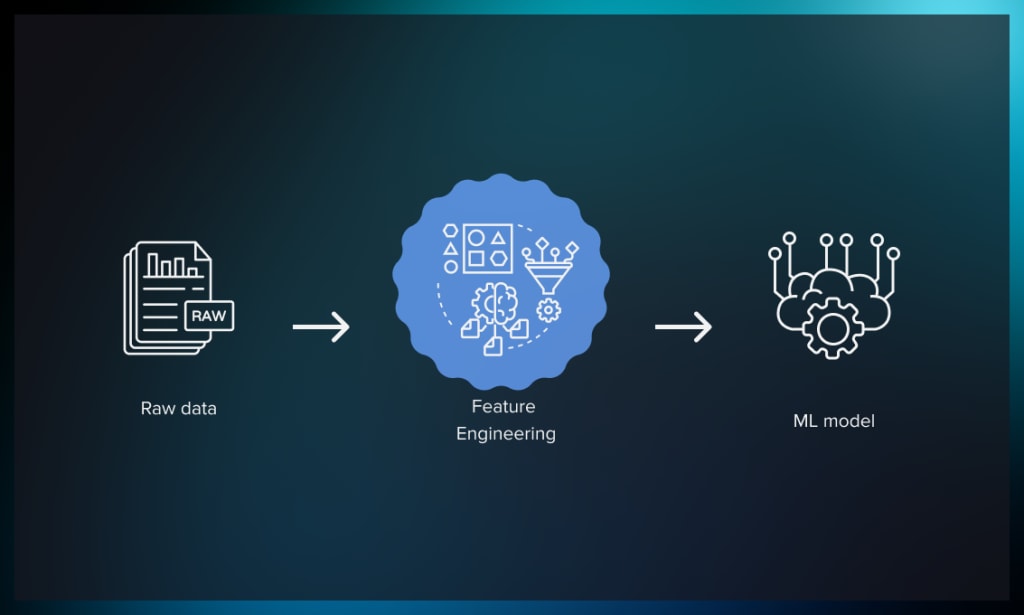

Feature engineering is the process of selecting, modifying, or creating new input variables—called features—that help a machine learning model perform better. It is perhaps the most important process of conceiving an effective predictive model through bridging the gap between raw data.

Features are the various attributes or properties of the data with regard to which the model is being used to predict. Hence, in a machine learning context, more useful attributes translate to better model performance. Feature engineering is a process that entails using creativity, experience in a domain, and technical skills to clean, organize, and extract meaningful features from raw data to provide an ML model.

Why is Feature Engineering Important?

Model quality, therefore, is a measure of feature relevance. The relevance of features determines the capacity of a model's learning with respect to patterns; therefore, the model is apt for suitable predictions. Even the most sophisticated algorithms will only be able to do so much if we give them bad or irrelevant features. That's why feature engineering is so important.

1. Improves Model Accuracy: With well-engineered features, a model can significantly improve in accuracy. The key information will be properly emphasized in the data, allowing the model to focus directly on the correct deal and, hence, make correct predictions.

2. Reduces Overfitting: Overfitting, in this field, is a phenomenon where a model is able to learn from the noise of the training data and thereby develop an altered fit. Feature engineering helps in creating features that generalize well over many datasets, and this can help in avoiding overfitting.

3. Simplifies Model Complexity: This will simplify the complexity of the model since raw data will be converted into more informative features. It may make the model very simplified and , therefore easier to interpret, with a reduction in computational resources, yet still based on performance.

4. Multiple Algorithm Use: Some algorithms, be it linear regression or even support vector machines, work better on some kind of features. To engineer features gives one the possibility of tailoring his data according to the strength of different algorithms.

5. Improves Interpretability: In most cases, especially in areas like healthcare and finance, it's not sufficient to make accurate predictions only. The model has to be interpretable. Feature Engineering sometimes designs the features representing a complicated decision-making process of the learning algorithms.

Steps Involved in the Process of Feature Engineering

Quite a lot of iterative and creative work lies in dealing with feature engineering. These are some of the key steps involved:

1. Feature Selection:

• Definition: Feature selection involves the identification of the most relevant features in your dataset. This step will help to get rid of redundant or irrelevant data, thus reducing noise and increasing model accuracy.

• Techniques: Typical techniques are correlation analysis, ranking features by feature importance using, for instance, tree-based models, and recursive feature elimination.

2. Feature Creation:

- Definition: Feature creation is about generating new features based on the existing ones, which can involve mathematical transformation, aggregation, or even combining multiple features into one.

- Examples: Interaction terms can be created—for example, the product of two features. Continuous variables can be binned into categorical ones. Polynomial features can be generated.

3. Feature Transformation:

Definition: Transformation of features through adjustment of the existing features to make that feature contribute better to the model. This could be through scaling, normalization, or encoding of categorical variables. Some techniques used in feature transformation include: Normalization/Standardization Features are adjusted so that they have a mean of zero and a variance of one. It is thus best applied where algorithms are scaling feature sensitive, such as SVMs or neural nets.

- Logarithmic Transformation: It transforms the skewed features by bringing them to a common scale and making them nearly normally distributed.

4. Missing Data Treatment:

- Description: It is time very important to handle missing data many in the process of feature engineering. Missing data can introduce some critical issues and may lead to incorrect inference-making.

- Techniques: The most commonly used methods include imputation by filling in missing values through mean, median, or mode or removing missing data points, or using algorithms that can naturally handle missing values.

5. Encoding Categorical Variables:

- Definition: Categorical variables need to be converted into a numerical format that can be processed by machine learning algorithms.

-Techniques:

- One-Hot Encoding: For the given category, create binary columns.

- Label Encoding: Each category takes a different integer.

Feature Engineering in Action: A Practical Example

For example, suppose you are developing a machine learning model for predicting house prices, and your raw data describes features such as size of the house, number of bedrooms, neighborhood, year built, etc. Feature engineering in this scenario may include the following:.

- Change the size feature: Size is one of the skewed distributions, so turn it to logarithm form.

- Make neighborhood: We can one-hot encode in the neighborhood so that it keeps in numeric terms.

These are engineered features that add a lot of value to our model, which makes meaningful predictions from our model.

Why You Need a Data Science Course

Acquiring expertise in feature engineering is one of the most important things with regard to being successful in data science and machine learning. A data science course will not only help you learn the theoretical machine learning aspects but will also provide the necessary aims to get hands-on experience in feature engineering. Understanding how to effectively select, create, and transform features is going to put you in a much better place to create powerful, high-impact models.

Feature engineering is one of those critical steps in the process of machine learning, in most cases deciding on the success or failure of a model. Working with the proper selection and crafting of features, data scientists manage to unlock the real potential of their models for accurate predictions and insights. Investing in a data science class early on for a professional career in data science is essential.

The above blog underlines the core of feature engineering in machine learning without any prejudice with the usage of the keyword data science course, thereby staying relevant to the audience who would want to further their skills in data science.

Comments

There are no comments for this story

Be the first to respond and start the conversation.