Generative AI: Introduction to All Types of Gen-AI Models 2025

Generative AI in 2025: Exploration of All Gen-AI Models

AI offers capabilities that extend beyond what we once thought possible. Today, Gen-AI is creating life-like images and writing human-like text. Generative AI models are becoming indispensable across various industries. This blog will explore the different types of generative AI models, how they work, and their multiple applications.

Let’s jump into the core elements of Generative AI, its use cases, and potential challenges.

What is Generative AI?

Generative AI relies on much more sophisticated machine learning models called deep learning models. This deep learning models algorithms that simulate the learning and decision-making processes of the human brain. These models work by identifying and encoding the patterns with relationships in huge amounts of data.

We all know AI has been one of the hot technologies and topics for the past few years, but I think generative AI arrive at ChatGPT in 2022. Generative AI offers many productivity benefits for individuals as well as organizations. Seriously, while there are many challenges and risks, businesses are still moving ahead and exploring how this technology can improve their internal workflow.

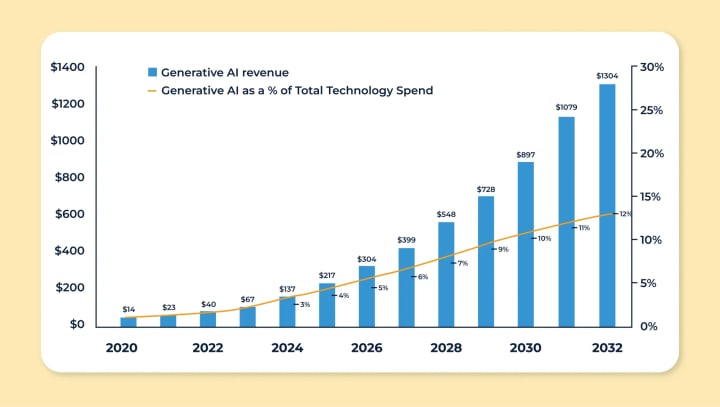

According to research by the management consulting firm McKinsey, one-third of organizations are already using generative AI regularly. Another industry analyst, Gartner, states over 80% of organizations will deploy generative AI applications or programming interfaces.

How Do Generative AI Models Work?

At the heart of Generative AI are complex algorithms designed to mimic human creative processes and solve complex problems as well. Here's a step-by-step breakdown of how these models function.

For the most part, generative AI operates in three phases

- Training to create a foundation model that an serve as the basis of multiple-gen AI applications.

- Tunning to customize, made the function model for a specific gen AI application.

- Generation, evaluation, and return to assess the gen AI application's output and continually improve its performance and accuracy.

Training Gen AI

Generative AI begins with a foundation model- a deep learning model that serves as the basis for multiple different generative AI applications. The most common foundation model today is LLMsm, which was created for text generation applications. There are also foundation models for image generation, video generation, and sound and music generation. On top of that, multimodal foundation models can also support several kinds of content generation. (Was it too much, hey?)

Moving on, the result of this training is a neural network of parameters-encoded representations of the entities, patterns, and relationships in the data- that can generate content autonomously in response to inputs or prompts.

Tuning the Gen AI

Tunning involves feeding the model labeled specific data to a content generation application, like questions or prompts the application is likely going to receive from the users. For example, if a development team is trying to create a customer services chatbot, it would create hundreds or thousands of documents containing labeled customer services questions and correct answers and then feed those documents to the models.

Fine-tunning is labor-intensive. Developers often outsource the task to companies with large data-labeling workforces.

Reinforcement learning with human feedback

In RLHF, human users respond to generated content with the model, which can be used to update the model for better accuracy and relevancy. Often, RLHF involves people scoring different outputs in response to the same prompt.

Generation, Evaluation, and Fine Tunning

This is the stage where developers and users continually assess the output of their generative AI apps and then tune them if needed. Another option for improving a Gen AI model is retrieval augmented generated (RAG). RAG is a framework for extending the foundation of the generative model. Using relevant sources outside of the training data and supplementing them also refines the parameters or representations in the original mode. RAG can make sure that a generative AI app always has access to the most current information.

Also Check:- AI Agents: Everything You Need to Know in 2025

The Core Types of Generative AI Models

There are several types of models, each with its unique characteristics and applications. Let's break down the most used one.

1. Generative Adversarial Networks (GANs): Evolution and Application

Generative Adversarial Networks (GANs) consist of two neural networks, the generator and the discriminator, that work in tandem. The generator creates new data while the descriminator evaluates its authenticity. This process helps GANs improve over time, producing high-quality results.

- Task-Specific GANs: Image and Video Improvement

Task-Specific GANs are particularly useful in improving the quality of Images and videos, from photo restoration to video upscaling. GAN helps create sharper and more appealing content.

- Security and Privacy Use Cases of GANs

Ok, In addition to media improvement, GANs have security applications. They can create synthetic data for training models, allowing organizations to safeguard sensitive information by using data that can mimic the real thing.

2. Transformed-Based Model: Driving Modern AI

Transformer-based models have brought generative AI into the mainstream. These models understand context, making them ideal for tasks like language processing and content generation.

- GPT Series: From GPT-4 to LLaMa by Meta

The GPT series (Generative Pre-Trained Transformer) represents some of the most powerful based models. While GPT-4 has garnered attention for its human-like text generation, Meta’s LLaMA has also contributed significantly to the development of more efficient models.

- BERT: Powering Naturel Language Processing (NLP)

BERT (Bidirectional Encoder Representations from Transformers) is another transformer model that excels in NLP tasks, helping AI understand context and meaning in ways that traditional models cannot.

3. Diffusion Models: The Mechanism Behind Image Creation

Diffusion models are a newer type of generative AI that excels in tasks like image generation by simulating the process of image formation.

- Stable Diffusion: A Deeper Look

Stable Diffusion has become very popular for its ability to create detailed and accurate images. It generates images by gradually removing noise from an initial random image, producing a clear and cohesive result.

- DALLE: Image Generation

We all know Open AI’s DALLE model takes image generation a step further, allowing for text-to-image transformations. It can generate unique visuals based on text input, making it a revolutionary tool in creative fields.

To Read Full Blog…

CLICK HERE: https://www.thirdrocktechkno.com/blog/generative-ai-introduction-to-all-types-of-gen-ai-models-2025/

Comments

There are no comments for this story

Be the first to respond and start the conversation.