The Uncanny Valley is Dead: How Higgsfield Finally Achieved Believable Realism

AI43

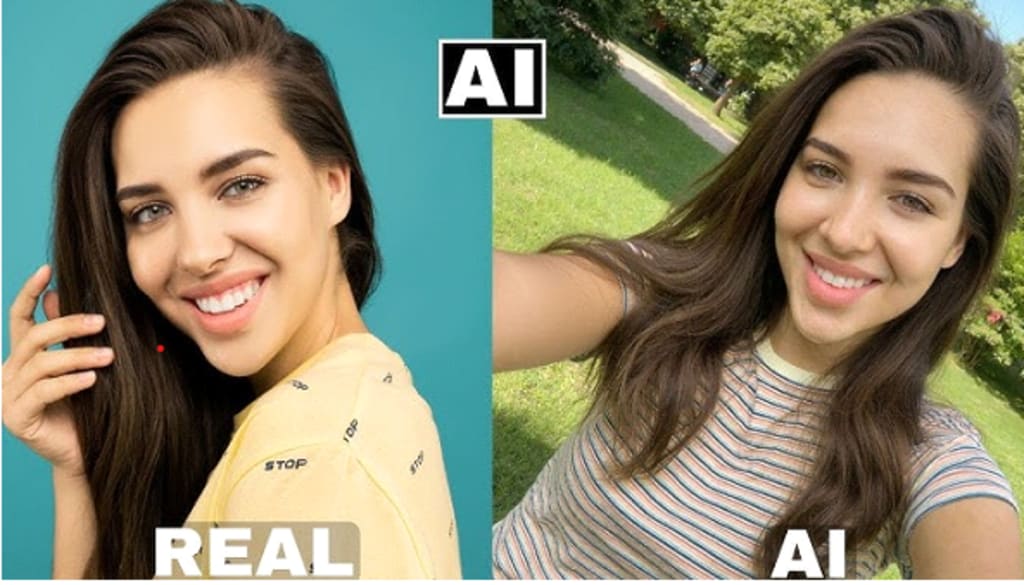

The concept of the uncanny valley has been looming over AI and animation over years. It is where digital art, in particular AI humans, is too close to being real that it becomes disturbing. However, with the introduction of devices such as Higgsfield and applications such as Sora 2, that obstacle is finally being overcome.

There is no more uneasiness about digital humans with their nearly-realistic looks. Rather, we are moving into a period of AI-enhanced humans appearing entirely realistic, and there is no distinction between the virtual and the real world. That is how Higgsfield and Sora 2 had at last become really realistic.

Understanding the Uncanny Valley

The uncanny valley is the disturbing sensation that humans get when they see a robot, a character, or an AI that is almost human without being there yet. They are too close to cause any sense of familiarity, yet their sublimely awkward details, such as clenched facial muscles, involuntary eye movement and monotonous syntax, keep them sufficiently disturbed.

This is what creators of AI-generated humans have been battling with years, trying to make their digital characters look realistic without marketing it into that territory.

How Sora 2 and Higgsfield Defeat the Uncanny Valley

The combination of the advanced animation features of Sora 2 and the cutting-edge enhancement tools of Higgsfield is the breakthrough in realism. The combination of the two technologies has mastered the ability to make realistic AI humans by addressing the major elements that cause the uncanny valley.

Facial Expression and Emotion Mapping

Facial expressions and their methods of natural capture are one of the largest tasks of designing realistic AI humans. The early models were not always elegant in their movements to the extent that human beings are expressive e.g. micro-expressions or subtle eye movements.

This has been revolutionized by Sora 2 which employs emotion mapping using AI to enable characters to respond more naturally in real-time. The character now has the ability to smile, frown or be surprised in a realistic manner as a human being.

Fluid Motion and Body Language

The initial AI characters were also victims to mechanical stiff movement. With the motion-capture additions made by Higgsfield, AI humans now move in a fluid and graceful motion and can replicate the movements of real human beings. This improvement makes sure that characters are not only posing; they are moving in a manner that is purely natural and realistic.

High-Quality Texturing and Lighting

In many cases, the digital humans would appear near-perfect in a still image but fall apart when moving, particularly in the area of lighting and texturing. The real time rendering tools used by Higgsfield make the lighting, shadows and textures respond dynamically to the scene to give it a more realistic look. It is particularly crucial in close-ups where all the details of skin, hair, and eyes have to appear natural by different light sources.

Speech and Voice Synthesis

The human face and body are just a part of the equation; realism in speech is equally essential. Higgsfield employs the best voice synthesis to generate speech that sounds natural. This tool does not have any more clumsy robot voices or awkward dialogue. The actors talk in an inflected, paused and emotional, and their voices are just as natural as their appearance.

Enhanced AI Interaction

In addition to the looks, the way AI deals with the environment is also a critical aspect. Sora 2 enables the characters to respond to and interact with the environments in a manner that seems natural and reactive. When picking up the objects, responding to the conversation, or communicating with other artificial intelligence agents, the behavior is entirely natural.

The Impact of Believable AI Humans

The effects of the AI characters are now felt in various industries since they are no longer in the uncanny valley. In movies and entertainment, films can be designed with hyper-realism of the digital actors that can be inserted in the scenes without the use of human actors.

In the case of virtual reality (VR) and augmented reality (AR), realistically trained AI humans add more immersion to the experiences of a user, thus making the digital experience much more compelling. Video games can now provide characters with human like manners of response and make games more interactive and emotionally engaging.

Companies can develop bespoke AI characters in marketing who appear like real-life brand ambassadors capable of answering client questions, as well as serving the brand in their true sense. Also, such sectors as education and customer service enjoy the advantages of AI tutors and virtual assistants that provide human-like interaction, which makes the process of learning and assistance more personal and responsive.

What’s Next for AI Humans?

Higgsfield and Sora 2 could be used in enormous ways. Even more realistic results may be anticipated in the nearest future, and AI humans will develop to fit more emotions and experiences. With the further advancement of these technologies the border between real and virtual is constantly becoming more unclear and leaving more opportunities in the area of entertainment, business, and so on.

Comments

There are no comments for this story

Be the first to respond and start the conversation.