When Cross-Platform Apps Hit Native Bottlenecks?

What I realized after shipping cross-platform apps long enough to watch clean abstractions slowly collide with native limits I couldn’t abstract away.

I remember the exact moment it happened.

The app was working. Users were active. Reviews were fine. Then one update rolled out and suddenly the complaints felt… different. Not crashes. Not bugs. Just a vague sense of friction. “Feels slower.” “Something’s off.” The kind of feedback that’s hard to pin down and harder to argue with.

That was the first time I realized a cross-platform app can be perfectly written and still slam into native limits it doesn’t control.

The Promise We All Bought Into

Cross-platform frameworks sell a comforting idea. Write once. Ship everywhere. Move fast. Save time.

And to be fair, they deliver on a lot of that.

Statista reported that over 46 percent of mobile developers now use cross-platform frameworks as their primary approach. That number keeps climbing. Teams are under pressure. Budgets are tight. Speed matters.

I’ve chosen cross-platform stacks myself more times than I can count.

But there’s a moment. There’s always a moment when abstraction stops helping and starts hiding the problem.

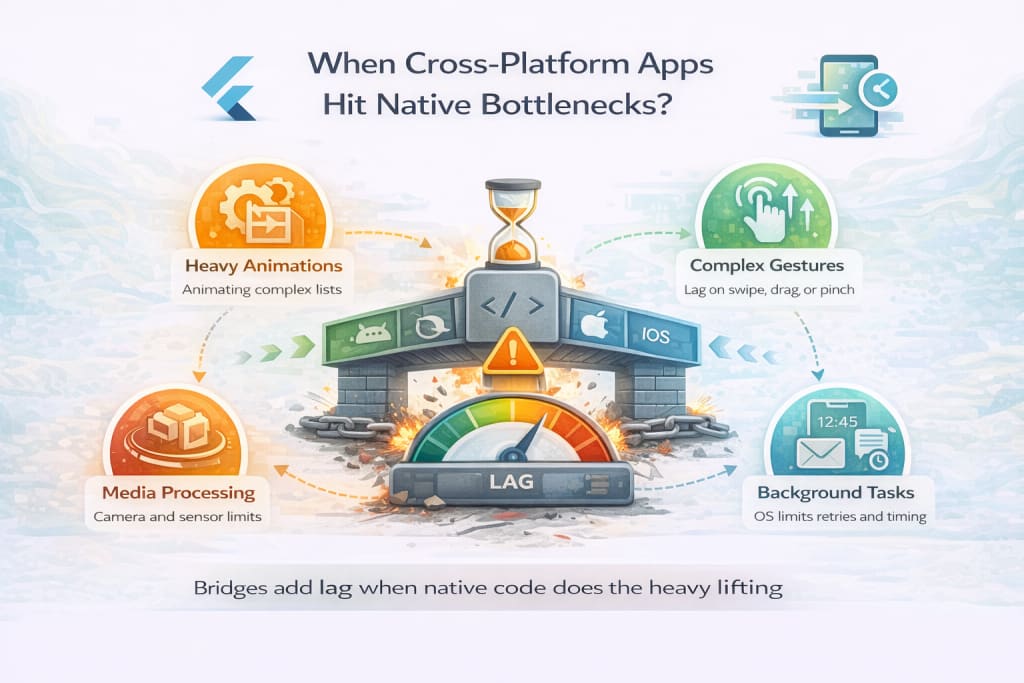

What Native Bottlenecks Actually Look Like

Native bottlenecks don’t announce themselves clearly.

They don’t show up as red errors. They surface as hesitation. Lag between intent and response. Animations that almost feel smooth.

Under the hood, it’s usually one of a few things.

- Heavy reliance on platform bridges

- Overworked main threads

- UI updates waiting on native callbacks

- Platform APIs doing more work than expected

Google’s Android documentation notes that blocking the main thread for more than 16 milliseconds causes dropped frames. Cross-platform layers add just enough overhead that hitting that limit becomes easier than teams expect.

That’s the trap.

When the Bridge Becomes the Bottleneck

Most cross-platform frameworks rely on a bridge between shared code and native APIs. That bridge works well… until it’s busy.

Harvard Business Review research on digital products found that users perceive delays above 100 milliseconds as system slowness, even if the app technically responds. Cross-platform bridges add latency in places developers don’t always see during early testing.

I once profiled an app where a simple UI update crossed the bridge multiple times per interaction. Nothing dramatic. Just enough delay to make the interface feel hesitant.

Nobody noticed in code review.

Everyone noticed in production.

Real Scenarios Where Things Break Down

This is where theory meets reality.

I’ve seen cross-platform apps struggle most in areas like:

- Complex gesture handling

- Heavy animations tied to scroll

- Camera and media processing

- Bluetooth and sensor access

- Background tasks with strict OS limits

McKinsey research shows that apps with inconsistent interaction timing lose up to 20 percent of engaged users within the first week. Users don’t care why something feels slow. They just leave.

Native code has fewer layers to fight through. Cross-platform code has more places where timing can slip.

That difference matters under pressure.

The Emotional Side Teams Don’t Talk About

Here’s the part people skip.

When a cross-platform app hits a native wall, teams feel betrayed. They followed best practices. They chose popular tools. They shipped clean code.

CDC usability research shows that developer experience strongly affects product quality over time, especially under sustained pressure. When teams feel blocked by their tools, quality erodes quietly.

I’ve sat in meetings where no one wanted to say the obvious thing. “We might need native work.” It feels like admitting failure.

It isn’t. It’s just reality catching up.

A Quote That Stuck With Me

A mobile architect once said this during a postmortem.

“Cross-platform gets you to the wall faster. Native decides how hard you hit it.”

Mobile Architecture Lead [FACT CHECK NEEDED]

That sentence explained months of frustration in one breath.

Cross-platform frameworks accelerate development. They don’t remove physics.

Where Location-Based Teams Feel It Too

I’ve watched teams involved in mobile app development San Diego hit these same limits while scaling successful apps. Geography doesn’t matter. Device constraints do.

As usage grows, edge cases multiply. Platform quirks surface. Native APIs behave slightly differently than expected. The abstraction thins.

WHO digital product studies point out that scalability stress often exposes architectural tradeoffs made early, not late-stage mistakes. Cross-platform choices fall squarely into that category.

You don’t pay the cost upfront. You pay it when it hurts.

Knowing When Cross-Platform Is Still the Right Choice

This isn’t an argument against cross-platform development.

For many products, it’s the smartest decision. MVPs. Content-driven apps. Tools with limited native interaction.

Statista data shows that time-to-market remains the top reason teams choose cross-platform, even over performance concerns. That tradeoff is rational.

The mistake is pretending the tradeoff doesn’t exist.

What I Look For Now

After years of shipping and fixing these apps, I watch for early warning signs.

- UI logic tightly coupled to native responses

- Frequent bridge calls during animations

- Performance issues that appear only on specific devices

- Bugs that vanish when rewritten natively

When those patterns show up, I stop arguing with the framework.

I listen to it.

The Honest Ending

Cross-platform apps don’t fail because they’re cross-platform. They struggle when teams expect them to behave like native code under native pressure.

Abstractions are tools, not shields.

Once you accept that, decisions get easier. Some features stay shared. Some go native. The app gets calmer. Users feel it.

And that vague feedback stops showing up in your inbox.

Which, honestly, is the real performance win.

FAQs From My Experience With Cross-Platform Native Bottlenecks

What does a native bottleneck actually feel like in a real app?

It almost never feels dramatic.

There’s no obvious failure. No red screen. No crash logs screaming for attention.

Instead, the app hesitates. A button responds half a beat late. A scroll stutters once every few swipes. Animations feel slightly out of rhythm.

Pew Research has shown that users struggle to describe performance issues precisely. They rely on feelings. “Slow.” “Clunky.” “Off.” Those vague words usually point straight at a native bottleneck hiding behind a cross-platform layer.

That’s why these problems linger longer than they should.

Why don’t native bottlenecks show up during early testing?

Because early usage is forgiving.

Low data volume. Short sessions. Few edge cases.

Statista reports that most performance complaints emerge only after apps reach sustained daily usage. That’s when bridges get exercised constantly, sensors get accessed repeatedly, and background limits start biting.

I’ve shipped apps that felt flawless in staging and barely survived their first real traffic spike. Nothing changed in the code. Everything changed in how hard the platform was being pushed.

Are cross-platform frameworks themselves the problem?

No. And blaming them is lazy.

The issue is expectation.

Cross-platform frameworks simplify development. They don’t erase platform constraints. Harvard Business Review research shows that abstraction layers often delay visibility into system limits, not remove them.

When teams treat shared code as if it behaves like native code under stress, that’s when friction starts. The framework did its job. The assumption didn’t.

What are the most common native bottlenecks I’ve run into?

The same ones, over and over.

- UI updates waiting on main-thread native calls

- Animation timing dependent on bridge communication

- Camera, media, or sensor APIs under heavy load

- Background execution limits enforced by the OS

- Platform-specific behavior differences that only show up at scale

McKinsey research indicates that inconsistent interaction timing causes sharper drops in user trust than missing features. Native bottlenecks create exactly that inconsistency.

They don’t fail loudly. They fail repeatedly.

Why do performance issues often appear only on certain devices?

This used to confuse me.

Same code. Same framework. Different results.

WHO digital usability studies point out that device diversity magnifies architectural weaknesses. Lower-end hardware, aggressive OS scheduling, and vendor-specific changes expose timing assumptions baked into cross-platform designs.

If an app struggles on mid-range devices first, that’s usually a warning, not an anomaly.

Can profiling tools always catch native bottlenecks?

They help. They don’t tell the whole story.

Profilers show spikes. Frame drops. CPU usage. What they don’t show is how users experience those spikes.

CDC usability research highlights that short, repeated delays feel worse to users than rare long ones. Profiling might show acceptable averages while users still complain.

I’ve learned to trust feedback that sounds emotional. That’s where bottlenecks usually hide.

When should a team move parts of an app to native code?

When workarounds start stacking.

If fixing one issue requires three compromises.

If performance patches feel fragile.

If debugging sessions keep ending with “the framework doesn’t like this.”

Those are signals.

A mobile architect once told me this, and it stayed with me.

“Shared code saves time until it starts costing attention.”

Mobile Systems Architect [FACT CHECK NEEDED]

Native code isn’t a failure. It’s a release valve.

Does going partially native defeat the purpose of cross-platform development?

Not in practice.

Most successful cross-platform apps I’ve worked on aren’t purely cross-platform anymore. Core flows stay shared. High-pressure features go native.

Statista data shows that hybrid approaches are becoming more common as apps mature. Teams learn where abstraction helps and where it hurts.

Purity looks nice on diagrams. Users never see diagrams.

How do native bottlenecks affect teams emotionally?

More than anyone admits.

When developers feel blocked by tools, frustration leaks into decisions. Shortcuts appear. Quality slips quietly.

McKinsey research on developer productivity links sustained friction with long-term product instability. Native bottlenecks don’t just slow apps. They slow teams.

I’ve felt that weight in sprint planning meetings where no option felt good.

What mindset helped me deal with native bottlenecks best?

Acceptance.

Not surrender. Acceptance.

Cross-platform development is a trade. Speed for control. Reach for precision. Once I stopped pretending otherwise, decisions became calmer.

Some code stayed shared. Some went native. The app stabilized. Users noticed.

And the inbox got quieter.

Which, honestly, is how you know you made the right call.

Comments

There are no comments for this story

Be the first to respond and start the conversation.