The Future of AI Isn’t Just Smarter Models It’s Smarter Verification

Why the Future of AI Depends on Verification, Not Just Generation.

It was asked in a quiet conference room.

Three letters on the frosted glass door: S-E-C.

The room fell still.

Not because the question was too complex. But because everyone already knew the answer even if no one wanted to say it out loud.

It wasn’t about better prompts.

It wasn’t about bigger datasets.

It wasn’t about another record-breaking model.

The next breakthrough in artificial intelligence wasn’t about more. It was about different.

Not in the way most of LinkedIn imagines. Not in the way VC-funded AI labs burn billions trying to stack GPUs and scale up language models like a game of digital Jenga.

Not in the swarm of prompt engineering hacks flooding newsletters and courses every week.

The real shift came from a quieter place.

A realization so obvious, yet so overlooked.

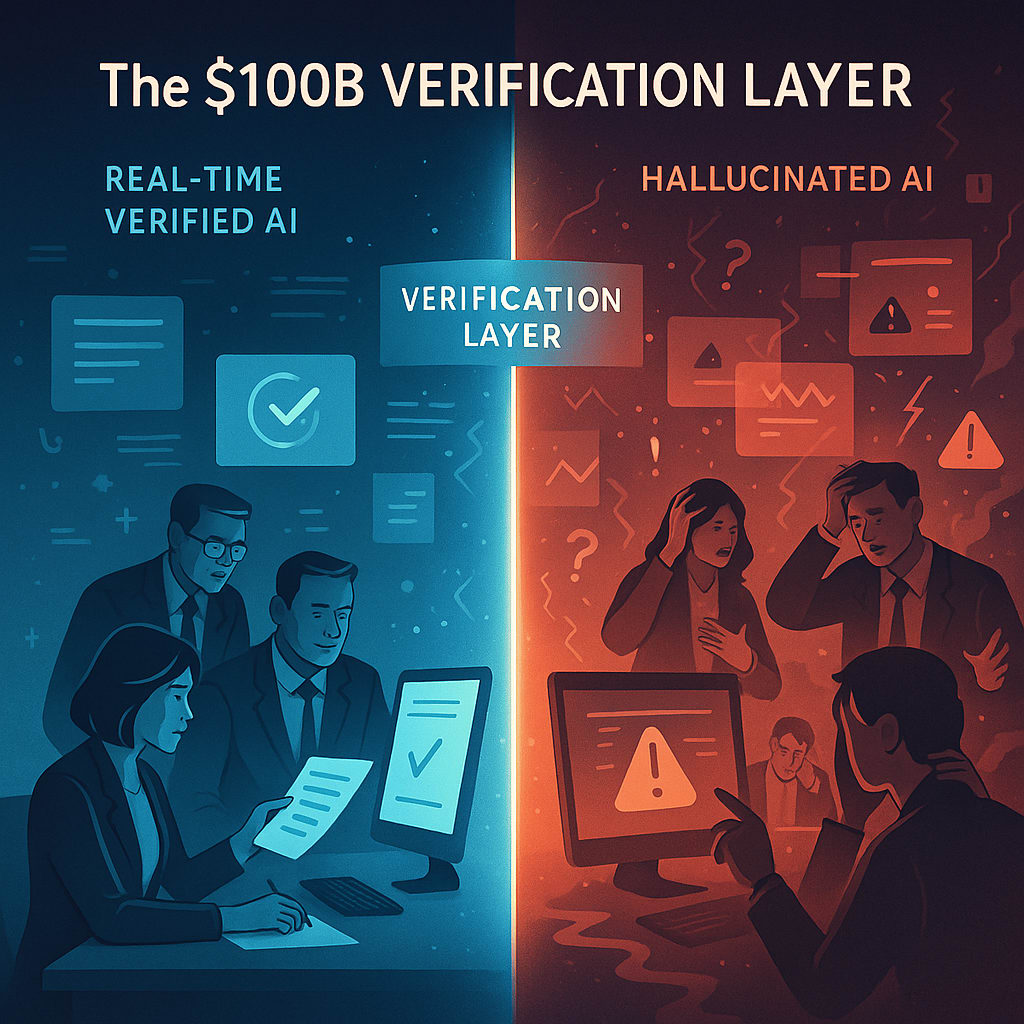

The magic isn’t in generation. It’s in verification.

Why Generation Alone Is Broken

If you’ve used any generative AI lately ChatGPT, Gemini, Claude, whatever you’ve seen the problem firsthand.

It can sound smart. Until it makes something up.

A fake study. A quote that doesn’t exist. A news story that never happened. A revenue projection without any backing.

We’ve normalized this, calling it “hallucination.” But in any serious context law, finance, healthcare, journalism we wouldn’t call that a bug.

We’d call it a liability.

And yet, most companies continue to chase the next wave of better generation. The cleaner prompt. The larger model. The faster inference.

Meanwhile, something more powerful is quietly taking shape beneath the surface.

A shift from artificial intelligence that just creates, to AI that can verify what it says as it says it.

The Three Principles Behind Professional-Grade AI

Here’s what the top thinkers, builders, and platforms are doing differently.

They’ve realized that professional intelligence doesn’t come from sounding right.

It comes from being provably right.

And that requires building verification into the DNA of every response.

1. Real-Time Fact-Checking During Creation

Think of it like a co-pilot, not a cleanup crew.

Instead of writing a full paragraph and then manually auditing it (which almost no one actually does), modern verification layers now catch falsehoods mid-sentence.

Take Goldman Sachs, for example.

When an internal AI tool tried to cite a nonexistent FDA approval, the verification layer flagged it immediately. The system didn’t wait until after a report had reached stakeholders or clients.

This is dynamic validation cross-referencing data while generating, not after.

Imagine that at scale:

• AI tools that refuse to finish a sentence without backing.

• Press releases that self-audit as they’re written.

• Internal memos that correct themselves in real time.

That’s not the future. That’s happening now for those who build with verification first.

2. Source Attribution That Forces Transparency

In academic research, citations are non-negotiable. In journalism, fact-checking is sacred. So why have we accepted AI outputs with no visible sources?

The new frontier of trustworthy AI enforces a simple rule:

If it can’t show where something came from, it doesn’t get to say it.

No more ghost statistics.

No more “$2.3 billion market projections” pulled from thin air.

No more “AI said so” replacing real analysis.

This principle of source-first design is flipping AI development on its head.

Models must now surface links, documents, evidence, and metadata alongside their claims. Not just to prove trust but to allow humans to audit, trace, and make final decisions.

It’s about accountability in context, not blind trust.

3. Confidence Scoring With Real Consequences

Every model has confidence scores under the hood. But most tools bury them or worse, ignore them entirely.

In professional AI, confidence must be visible, meaningful, and actionable.

When the system says, “I’m 94% sure,” that should mean something.

When it drops to 67%, it should warn you before the output goes public.

That margin isn’t just statistical.

It’s ethical.

Because the difference between a confident wrong and an uncertain maybe can cost millions or lives in fields like medicine, finance, or law.

The best tools now use thresholds and trigger alerts to flag when human intervention is needed. They don’t pretend to be all-knowing. They tell you when not to trust them.

That honesty?

That’s professional-grade intelligence.

What the Smartest Companies Are Really Doing

So, what are the companies that truly get it doing?

They’re not chasing better models.

They’re building invisible verification.

They don’t just slap on AI to look innovative. They embed systems of trust that prevent problems before they happen.

• While others debug hallucinations, they design them out from the start.

• While others keep “prompt engineering,” they’re architecting frameworks for trust at scale.

• While others build for outputs, they build for audit trails.

That’s the difference between AI that impresses and AI that lasts.

The Quiet Revolution: Invisible Intelligence

There’s a reason you’re not seeing this shouted in every press release.

It’s not sexy. It’s not flashy.

It doesn’t make for viral demos.

Because verification isn’t loud.

It’s quiet AI.

It’s the infrastructure underneath the kind you only notice when it’s missing.

And yet, this quiet layer will define the next decade of AI.

Not the prompt tricks.

Not the speed.

Not the size of the model.

But whether or not it can prove what it says.

The $100 Billion Question

So, what’s the real question?

It’s not: How do we train AI better?

It’s: Who verifies the verifiers?

In a world where LLMs train on other LLMs, where synthetic content loops back into new models, and where truth becomes increasingly fluid, verification becomes our last line of defense.

It’s not optional.

It’s foundational.

And the people building it? They’re not always the loudest. They’re not chasing clout. They’re building trust infrastructure in the shadows.

They understand one thing:

The future won’t belong to those who create the most.

It’ll belong to those who validate the best.

So, Where Do You Stand?

Are you chasing smarter generation?

Or are you ready to build something deeper?

Because the new standard isn’t who can make AI sound smartest.

It’s who can make AI reliable, auditable, and real.

The age of flashy generation is fading.

The era of invisible verification is beginning.

And the only question left is:

Are you one of them?

About the Creator

Prince Esien

Storyteller at the intersection of tech and truth. Exploring AI, culture, and the human edge of innovation.

Comments

There are no comments for this story

Be the first to respond and start the conversation.