My Journey Through The OpenAI Research Engineer Job Interview

OpenAI RE Interview Process and Questions

You know how OpenAI is a big name in artificial intelligence research and development? Well, it all started in San Francisco in 2015, founded by some seriously big hitters in the tech industry. We're talking about people like Elon Musk, from Tesla and SpaceX fame, and Sam Altman, who used to run Y Combinator. Since then, OpenAI has churned out some pretty amazing stuff that's caught people's attention.

You've probably heard of their GPT language models, which are hugely popular, or maybe DALL-E, the one that turns text into pictures, or even their Whisper voice models.

Why I Chose OpenAI RS To Start My Career

Ever since starting my undergraduate studies in computer science at the University of Chicago, I've been deeply interested in OpenAI. After all, it's a company that plays such a significant role in the field of artificial intelligence – who wouldn't be excited by the prospect of working alongside brilliant minds to push the boundaries of technology? This long-standing aspiration is what solidified my decision to apply for the OpenAI RS position. I was fortunate enough to land this valuable interview opportunity in Spring 2025.

I also owe a big thank you to my interview coaching team, CSOAsupport, whose careful guidance was instrumental in helping me perform well during the interview. Below, I'll share some of my recent experiences, key takeaways, and the interview questions from the RS position interview process.

Job Interview Process

I put in my online application back in early March 2025 and got invited for a phone interview about 10 days later. The interviewer went over my resume, and we discussed the courses I took and projects I worked on during my undergrad and grad studies.

My LeetCode level is average – I can only solve about 30% of the Medium problems, though luckily, they were mostly related to AI algorithms. I passed the phone screen and received an email inviting me to an on-site interview 5 days later.

Phone Interview Questions

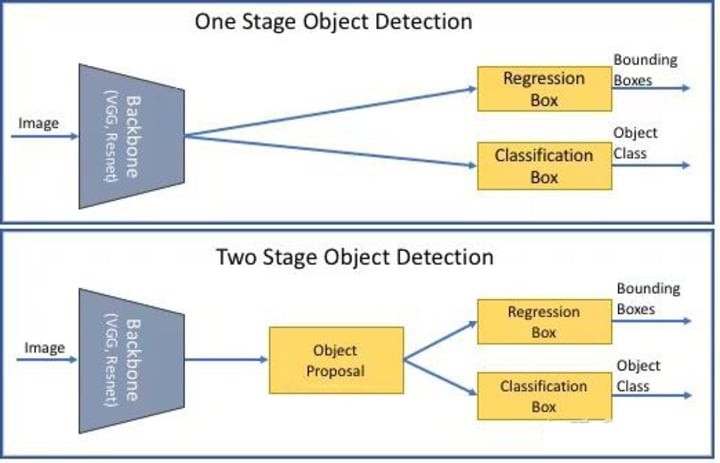

I recall one deep learning question where I had to implement a convolutional neural network (CNN) for image classification. They gave a specific task, like identifying animals such as cats and dogs, and asked me to design and build a CNN model, then train it to optimize its classification performance. The problem also involved identifying the boundaries of the targets, making it a classic target detection and classification task.

Technical Interview

Given that I was applying for an algorithm engineer role and had published work (open source on GitHub) on my resume, I wasn't asked too much about standard development coding. The interview comprised three technical rounds centered on AI algorithms.

The initial interview round covered basic AI algorithms and included a pretty rigorous coding test. They would present a real-world problem and expect me to write the code to solve it immediately. For example, the first question I encountered was on Natural Language Processing (NLP). It involved implementing a text classification algorithm to accurately classify given text into specific categories.

This question put my programming skills, logical thinking, and problem-solving abilities to the test. Given the complexity of NLP problems and the often uncertain nature of large text datasets, I faced considerable challenges during the process. Nonetheless, I stayed calm, analyzed the problem step-by-step for the interviewer using algorithmic logic, and eventually found a viable solution and successfully implemented the code.

Second Round Technical Interview Aspects

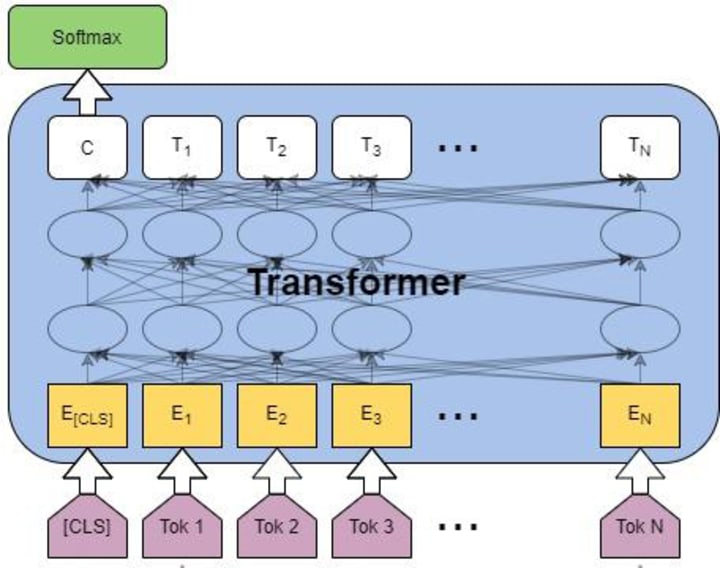

I was asked about the core concepts of the Transformer model and how one would go about implementing a machine translation model with that algorithm. Given that I know the Transformer principle well and use it a lot, I structured my answer by dividing it into different sections.

Self-Attention:

At the core of the Transformer is the self-attention mechanism. Its purpose is to allow the model to selectively attend to other parts of the sequence when processing any given element. Here's how it generally works: the input is transformed into Query, Key, and Value vectors. You calculate how similar a Query is to each Key to get attention weights how much focus to put on each element. Then, you sum up the Value vectors, weighted by those attention scores, to get the output representation.

Multi-Head Attention:

To enhance the model's ability to understand complex patterns, the Transformer employs Multi-Head Attention. Instead of just one self-attention layer, it uses multiple layers ("heads") that operate in parallel. Each head processes the input after applying different linear transformations, allowing it to look at the data from a unique perspective. Since each head learns separately, they can collectively capture a wider range of semantic information within the data.

Positional Encoding:

Unlike models with loops or convolutions, the Transformer itself doesn't inherently know the position of words or tokens in a sequence. So, it adds position information by using Positional Encoding. These are often generated mathematically using sine and cosine waves, resulting in a unique encoding for every position.

Encoder-Decoder Structure:

The Transformer has a standard encoder-decoder architecture. The encoder's job is to process the input sequence and turn it into a compressed set of vector representations. Then, the decoder takes these representations and generates the final output sequence. This design is great for processing sequences of different lengths and tends to generalize well to new data.

Residual Connections and Layer Normalization:

Deep neural networks can suffer from vanishing or exploding gradients during training. To mitigate this, the Transformer includes residual connections and normalization techniques. Residual connections help signals (gradients) pass through the network more effectively, reducing the chance of vanishing gradients. Normalization, specifically Layer Normalization, helps stabilize the network during training by keeping the data within a consistent range.

Behavioral Interview

Next up was the behavioral interview. This section mainly covered my past experiences, asking about how I've dealt with situations like handling pressure and cooperating with teammates. My answers flowed quite smoothly since I'd prepped for these questions in advance. Still, I aimed to be authentic and share my real experiences and feelings, in hopes of making a good impression.

The Wait Begins:

Then came the long wait after the interview. Frankly, I was anxious during that time, nervous about not getting the offer. Even so, I tried my best to stay positive and told myself that regardless of the result, the process itself was a valuable experience.

About the Creator

Nackydeng

I am a full-stack engineer at Amazon.

Comments

There are no comments for this story

Be the first to respond and start the conversation.