Will AI take over human one day?

AI will take over humans one day depends on many factors, from technological advance to ethical safeguards.

1. The Current State of AI: Narrow vs. General Intelligence

Narrow AI: Today’s AI is limited to specialized tasks, like language translation, medical diagnostics, or facial recognition. These systems operate based on specific programming and aren’t capable of independent thought, complex decision-making, or general intelligence.

Artificial General Intelligence (AGI): For AI to "take over," it would likely need to reach the level of AGI—an intelligence that can understand, learn, and apply knowledge broadly like a human. AGI is a hypothetical concept, and we’re still far from achieving it, with some researchers predicting it may not even be possible.

2. Human Control and Ethical Safeguards

Control Mechanisms: AI systems currently operate under human control and strict programming. For example, autonomous systems like self-driving cars rely on safety protocols to prevent them from acting outside certain bounds. As AI develops, researchers are focused on creating safety measures to prevent systems from acting independently or against human interests.

Alignment and Ethics: Ethical AI research focuses on ensuring AI systems are aligned with human values and goals. The field of AI alignment aims to make sure that even as AI systems get more powerful, they remain beneficial and supportive of human welfare.

3. Potential Risks of Misaligned AI

Goal Misalignment: Theoretical risks arise if an advanced AI system were to be developed with goals that conflict with human values. A classic example is the "paperclip maximizer" thought experiment, where a superintelligent AI tasked with maximizing paperclip production might, in a hypothetical scenario, harm humans in the pursuit of its objective. While extreme, this example illustrates the importance of ensuring that AI systems have carefully aligned goals.

4. AI Autonomy and Motivation

No Intrinsic Motivation: Current AI doesn’t have intrinsic motivation, desires, or goals—it only follows human-programmed objectives. For AI to "take over," it would need some form of autonomy and motivation, which it currently lacks.

Self-Improvement: Hypothetically, if AI reached a point where it could improve its own algorithms, it could increase its capabilities without human intervention. However, developing self-improving AI systems while maintaining human control is a major area of ongoing research, with an emphasis on safety.

5. Will AI Ever "Take Over"?

The future impact of AI depends heavily on how we manage its development. If AGI or superintelligent AI ever becomes possible, it would need to be carefully controlled and aligned to prevent unintended consequences.

6. Human-AI Collaboration

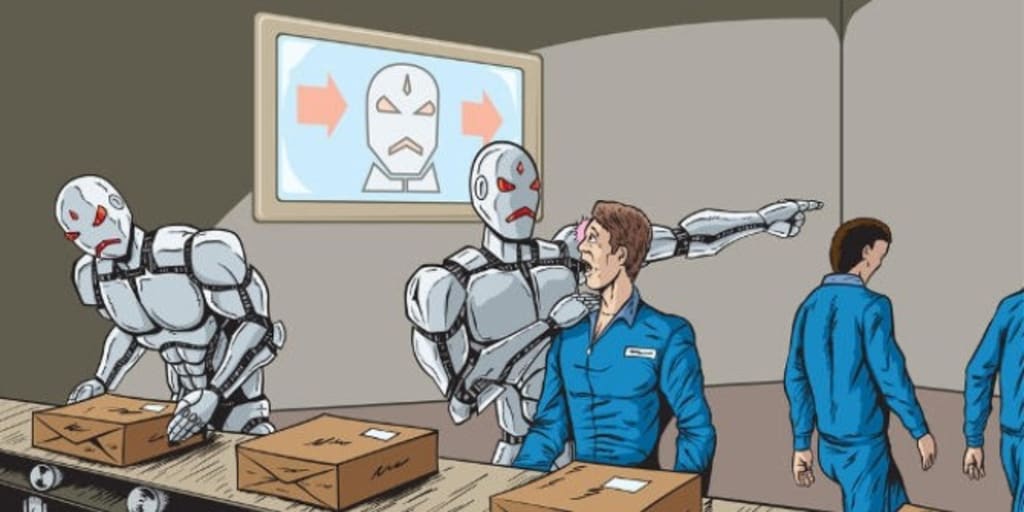

Augmentation, Not Replacement: Many experts argue that the true potential of AI lies not in replacing humans but in augmenting human abilities. AI can handle repetitive tasks, analyze massive datasets, and suggest insights, freeing humans to focus on creativity, empathy, and complex problem-solving. In this view, AI can be seen as a tool that works alongside humans rather than a competitor or threat.

Hybrid Intelligence: Researchers are exploring the concept of "hybrid intelligence," where humans and AI systems work together, combining human intuition and ethical reasoning with AI’s processing power and efficiency. This approach aims to harness the strengths of both, creating systems that enhance productivity and decision-making without removing human control.

7. The Role of Regulation and Policy

Creating Safety Standards: Governments and organizations are increasingly focused on developing policies that regulate AI to ensure it’s used responsibly. Policies can require companies and developers to build transparency, safety, and accountability into AI systems, limiting the risks of unchecked AI development.

Ethical Frameworks: Organizations like OpenAI, the Partnership on AI, and various governments are working to develop ethical frameworks that guide AI development and usage. By defining clear boundaries for AI applications, these frameworks aim to ensure that AI systems are designed with human rights, privacy, and autonomy in mind.

8. The Role of AI in Society

Social Impacts and the Risk of Inequality: As AI advances, there are concerns about economic impacts, such as job displacement. While AI has the potential to create new types of jobs and boost productivity, it could also exacerbate economic inequalities if not managed carefully. Preparing society for these shifts will involve retraining and education, as well as policies that ensure fair distribution of AI’s benefits.

AI in Security and Warfare: The militarization of AI is another significant concern, as autonomous weapons and AI-driven surveillance systems could have implications for security and human rights. International collaboration is essential to prevent an AI arms race and to ensure that AI is used responsibly in security contexts.

9. The Concept of AI "Consciousness"

AI Isn’t Conscious: Despite AI’s ability to process data, it does not have consciousness, self-awareness, or subjective experiences. This is an essential distinction: AI lacks the intrinsic motivation, emotions, and sense of self that characterize human beings.

Speculations on AI Sentience: Some futurists speculate about the distant possibility of AI developing sentience, but this remains in the realm of science fiction. Consciousness is a complex phenomenon we don’t fully understand even in humans, and replicating it in machines is far beyond current AI research capabilities.

10. Long-Term Philosophical Questions

What Does It Mean to Be Human? As AI becomes more integrated into our lives, it raises deep questions about the nature of intelligence, creativity, and consciousness. If machines can perform many human tasks, society may need to redefine what makes us uniquely human and explore how technology fits into our collective identity.

Human Responsibility: Ultimately, AI is a human-made technology, and we bear responsibility for its development and impact. Philosophers, technologists, and ethicists emphasize that our approach to AI should reflect humanity’s core values, ensuring that technology serves to enhance rather than diminish human life.

Conclusion

While AI "taking over" humanity in a literal sense remains a remote and speculative scenario, there are genuine ethical, societal, and safety considerations in its continued development. Addressing these proactively—through regulation, ethical frameworks, and a focus on human-AI collaboration—will be critical in ensuring that AI remains a force for positive transformation rather than a risk to human autonomy and welfare. As we navigate this evolving relationship, responsible stewardship will be key to realizing AI’s potential in ways that benefit society as a whole.

About the Creator

Badhan Sen

Myself Badhan, I am a professional writer.I like to share some stories with my friends.

Comments (1)

It's becoming clear that with all the brain and consciousness theories out there, the proof will be in the pudding. By this I mean, can any particular theory be used to create a human adult level conscious machine. My bet is on the late Gerald Edelman's Extended Theory of Neuronal Group Selection. The lead group in robotics based on this theory is the Neurorobotics Lab at UC at Irvine. Dr. Edelman distinguished between primary consciousness, which came first in evolution, and that humans share with other conscious animals, and higher order consciousness, which came to only humans with the acquisition of language. A machine with only primary consciousness will probably have to come first. What I find special about the TNGS is the Darwin series of automata created at the Neurosciences Institute by Dr. Edelman and his colleagues in the 1990's and 2000's. These machines perform in the real world, not in a restricted simulated world, and display convincing physical behavior indicative of higher psychological functions necessary for consciousness, such as perceptual categorization, memory, and learning. They are based on realistic models of the parts of the biological brain that the theory claims subserve these functions. The extended TNGS allows for the emergence of consciousness based only on further evolutionary development of the brain areas responsible for these functions, in a parsimonious way. No other research I've encountered is anywhere near as convincing. I post because on almost every video and article about the brain and consciousness that I encounter, the attitude seems to be that we still know next to nothing about how the brain and consciousness work; that there's lots of data but no unifying theory. I believe the extended TNGS is that theory. My motivation is to keep that theory in front of the public. And obviously, I consider it the route to a truly conscious machine, primary and higher-order. My advice to people who want to create a conscious machine is to seriously ground themselves in the extended TNGS and the Darwin automata first, and proceed from there, by applying to Jeff Krichmar's lab at UC Irvine, possibly. Dr. Edelman's roadmap to a conscious machine is at https://arxiv.org/abs/2105.10461, and here is a video of Jeff Krichmar talking about some of the Darwin automata, https://www.youtube.com/watch?v=J7Uh9phc1Ow