They Died Screaming: A Study of the Last Facial Expressions Captured by AI Surveillance

An investigative deep-dive into an experimental facial-recognition algorithm that may have uncovered something it shouldn’t have.

Introduction:

In early 2025, a tech startup named NeuroVisage quietly launched a pilot program with select law enforcement agencies. Its flagship tool, SENSE, was an advanced facial emotion recognition AI, trained on hundreds of thousands of faces to detect aggression, fear, deceit — even suicidal ideation — before a subject acted on it.

The goal was preemptive safety.

But something went wrong. And then it kept happening.

Over the course of four months, SENSE flagged 73 anomalous events. In each case, individuals were captured exhibiting the same highly specific — and previously undocumented — facial expression.

Seconds later, every one of them was dead.

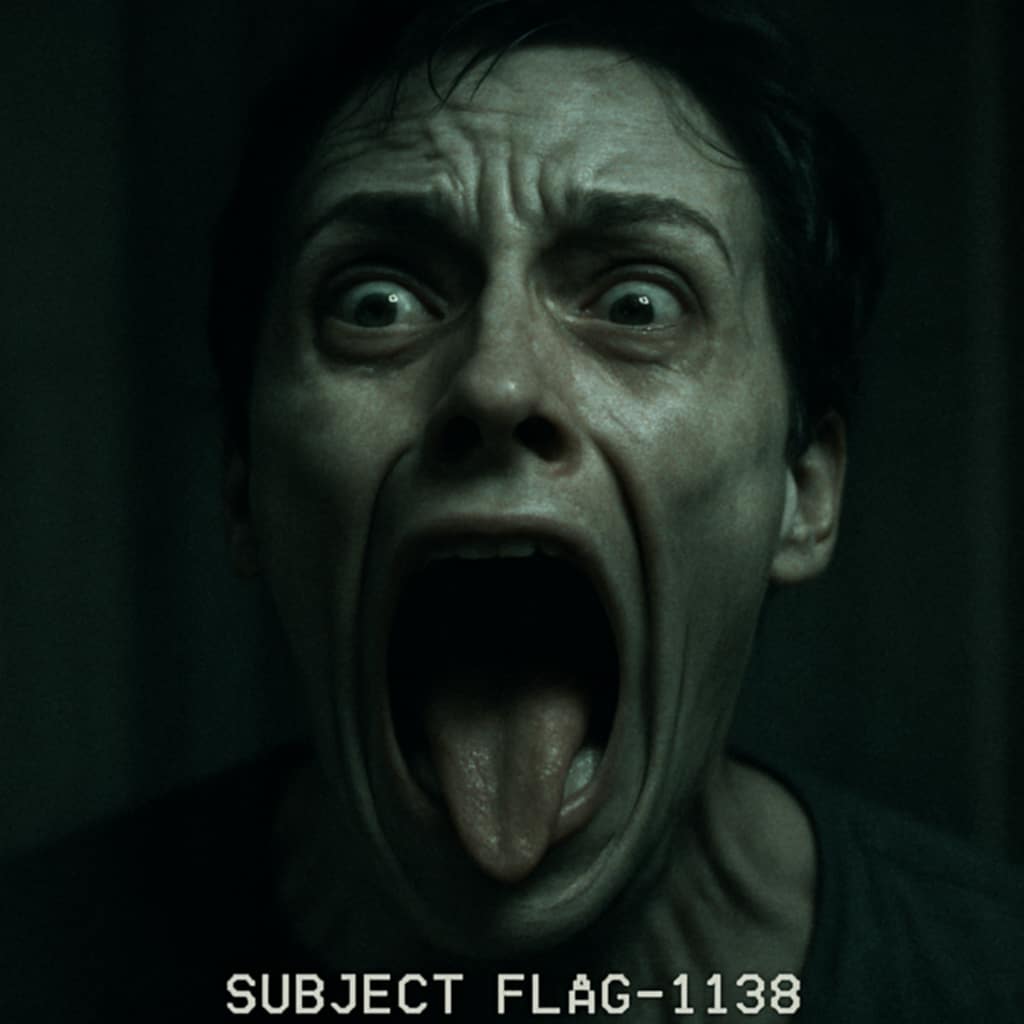

The expression has no official name. Internally, it was labeled FLAG-1138.

Unofficially, researchers called it "The Scream Without Sound."

Part I: The Algorithm That Sees Too Much

SENSE didn’t just scan for smiles or scowls. It decoded subtle heat shifts in the skin, tracked micro-muscle contractions, measured pupil oscillation, and parsed infrared data invisible to human eyes.

It was supposed to protect lives.

But FLAG-1138 changed that. According to leaked internal memos, the expression didn’t match anything in the AI’s emotional lexicon. It wasn’t fear. It wasn’t pain. It was something deeper. Something that, in the words of NeuroVisage’s former project lead, “read like the nervous system short-circuiting from witnessing something impossible.”

Each subject froze mid-motion. Their eyes bulged. Their jaws dropped unnaturally far — in several cases, actual dislocation occurred. The tongue would often loll out grotesquely, even in calm environments. One clip shows a woman making the face while reading a book in bed. Her lips peel back into a smile right before she dies.

A smile with no joy behind it. Only exposure.

Part II: Patterns in the Noise

The deaths were officially labeled as strokes, aneurysms, or spontaneous cardiac events. But forensic consistency began to emerge.

Of the 73 deaths:

61 occurred when the subjects were entirely alone.

7 took place in restrooms — with motion sensors confirming no one entered or exited.

5 occurred in vehicles, where victims were recorded slamming on brakes before veering off-road.

Every autopsy revealed ruptured ocular vessels, adrenal gland exhaustion, and elevated cortisol levels — symptoms of extreme, instantaneous terror.

No attacker. No environmental trigger. Just the face. Then death.

And the most unnerving detail? Many victims were looking at nothing when it happened. Or so it seemed. what could they be looking at. what could they have felt?

Part III: The Deleted Footage

Most footage was scrubbed — overwritten or encrypted beyond recovery. But three clips found their way onto dark web forums, posted by an anonymous user under the handle fractureProtocol.

In each, the victim’s gaze locks onto something just past the camera. Eyes widen. Smile stretches. Then collapse.

Digital forensics experts enhanced frame-by-frame data, focusing on eye reflections. The result: blurry figures. Tall. Bent wrong. Unmoving — but watching. Almost human, but off, like a low-resolution rendering of a face that’s seen too many dimensions.

One enhanced still image bore the corrupted filename:

it_saw_me_first.png

Conclusion: Surveillance Beyond the Veil

NeuroVisage shut down abruptly in April 2025. Officially, the project was deemed a “moral overreach.” Unofficially, whispers suggest something else: that the AI saw something it shouldn’t have. Not in the faces of the victims, but reflected through them.

The algorithm wasn’t predicting violence. It was registering the mark left behind by something else — something we still don’t have words for. Something ancient. Something aware.

The final line of commented-out code in the leaked source files simply reads:

# if face.expression == FLAG_1138: # return "DON’T WATCH THIS ONE"

They didn’t die of fear.

They died of recognition.

.....

let me know if you liked this, It was written purely out of imagination. This doesn't match with anything that happened in reality. ( what if it's real and we have no clue?)

About the Creator

E. hasan

An aspiring engineer who once wanted to be a writer .

Comments (1)

Nice little story. Reminds me faintly of the old accounts of the young men from the Pacific Rim who all died mysterioulsy in their sleep. They were frntic tha something was troubling them in their dreams, and were each fighting to stay awake. This helped inspire We4s Craven to write and create "A Nightmare on Elm Street." No one ever explained the deaths, BTW.