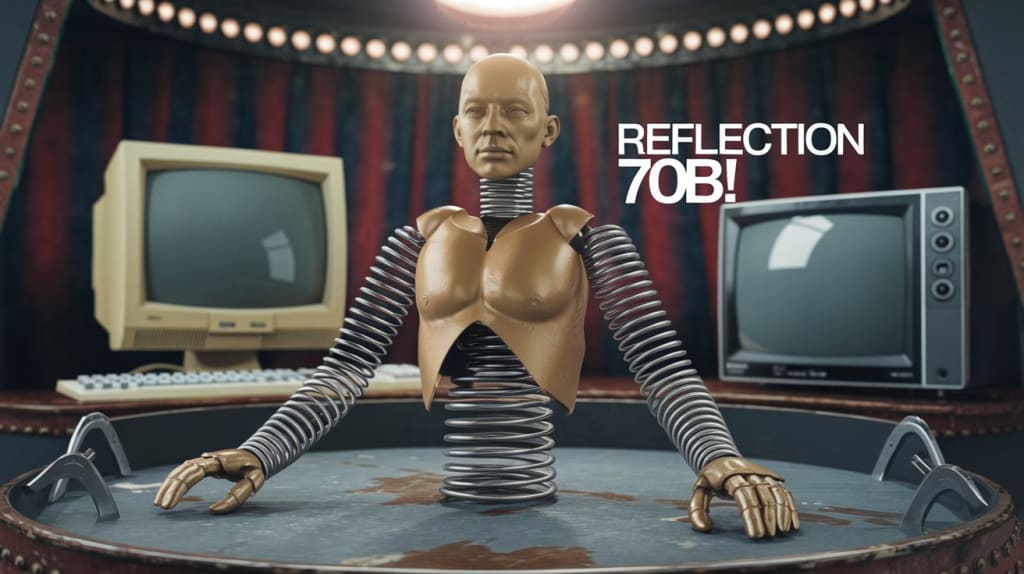

The Reflection 70b Scandal: A Cautionary Tale for the Future of AI Innovation

Is the Next Big AI Breakthrough Just Smoke and Mirrors?

In the fast-paced world of artificial intelligence, breakthroughs and innovations are announced almost daily. However, not all that glitters is gold, as the recent controversy surrounding Reflection 70b has shown. This saga serves as a stark reminder of the importance of scrutiny and verification in the AI community.

On September 5th, Matt Schumer, CEO of Other Side AI, made waves in the tech world by announcing Reflection 70b, a new AI model that he claimed outperformed even the most advanced closed-source models on certain benchmarks. The announcement was met with enthusiasm and excitement, garnering millions of views and coverage from major tech publications. Schumer's claims were bold: Reflection 70b was said to be the world's top open-source model, trained using a novel technique called "reflection tuning."

The initial response was overwhelmingly positive. AI enthusiasts and researchers were eager to test the model and see its capabilities firsthand. YouTuber AIExplained, like many others, quickly produced content about Reflection 70b, sharing the excitement with his audience. Schumer even appeared on a livestream to discuss the model's innovations and answer questions from the community.

However, the honeymoon period was short-lived. As independent researchers began to test Reflection 70b, cracks started to appear in Schumer's claims. The first red flag came when attempts to replicate the model's benchmark results failed miserably. Artificial Analysis, a company specializing in AI evaluation, reported that Reflection 70b performed worse than existing models like Meta's LLaMA 3 17b, not better as claimed.

Further investigation revealed more discrepancies. A Reddit user discovered that the model uploaded to Hugging Face, a popular platform for sharing AI models, appeared to be LLaMA 3 with some fine-tuning applied, not the groundbreaking new architecture Schumer had described. This revelation raised serious questions about the authenticity of Reflection 70b and the transparency of its development process.

As criticism mounted, Schumer attempted to address the concerns. He claimed there had been an issue during the upload process, resulting in a mix-up of model weights. He promised to fix the problem and reupload the correct model. However, this explanation did little to quell the growing skepticism in the AI community.

In an effort to salvage the situation, Schumer provided access to a private API for testing Reflection 70b. While some reported impressive performance from this API, new problems emerged. Researchers discovered that the API appeared to be using Claude, an existing AI model, rather than the purported Reflection 70b. This discovery led to accusations of fraud and deception.

The controversy has sparked a wider discussion about the ethics of AI research and the ease with which benchmark results can be manipulated. Jim Fan, a prominent figure in the AI community, published a detailed post explaining various techniques that can be used to game AI benchmarks. These methods include training on paraphrased examples of test sets, using frontier models to generate similar but surface-level different questions, and employing ensemble methods to boost performance.

As the dust settles, the AI community is left grappling with important questions. How can we ensure the integrity of AI research? What safeguards are needed to prevent similar incidents in the future? And how should the media and content creators approach reporting on AI breakthroughs?

AIExplained, the YouTuber who initially covered Reflection 70b, has taken this opportunity for self-reflection. In a follow-up video, he acknowledged the need for more caution when reporting on new AI developments. He posed a question to his audience: Should content creators adopt a more skeptical stance when covering AI news, or maintain an optimistic approach to encourage innovation?

This incident serves as a valuable lesson for researchers, journalists, and enthusiasts alike. It highlights the importance of independent verification, the need for transparency in AI development, and the potential pitfalls of rushing to embrace every claimed breakthrough.

As of now, the true nature of Reflection 70b remains unclear. Schumer has yet to provide a comprehensive explanation for the discrepancies, and the AI community awaits further developments. What is clear, however, is that this controversy will have lasting implications for how AI advancements are announced, reported, and verified in the future.

In conclusion, the Reflection 70b saga is a cautionary tale that underscores the need for rigorous testing, open dialogue, and ethical practices in AI research. As the field continues to evolve at breakneck speed, it's crucial that we maintain a balance between excitement for innovation and healthy skepticism. Only through this approach can we ensure that true breakthroughs are celebrated while protecting the integrity of AI science.

Comments

There are no comments for this story

Be the first to respond and start the conversation.