The Panopticon Classroom Pt1

How American Schools Became the Testing Grounds for Digital Surveillance.

Part 1 or 3: Expose how the surveillance grid in K–12 schools was built, from isolated incidents to a normalized nationwide system. This part lays the foundation for the entire series.

The Panopticon Classroom

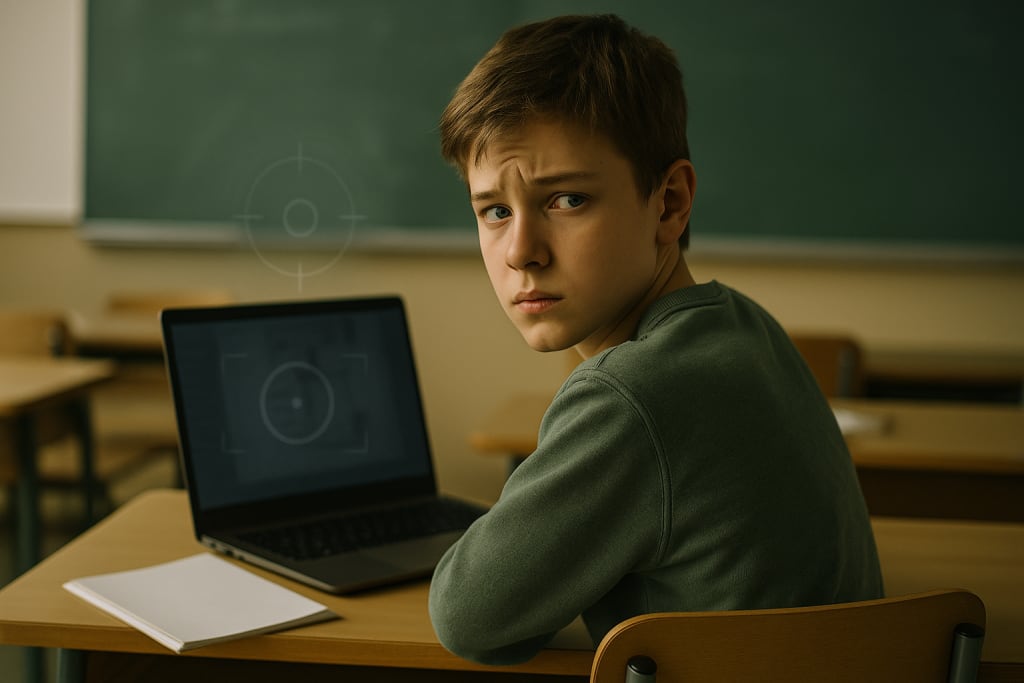

It started with a journal entry. A 14-year-old typed out some late-night thoughts in a Google Doc, raw and honest, meant for no one but herself. By morning, the assistant principal had called home. Her private words had been flagged by a software system she didn’t know existed. The machine had been watching all night.

A student later told her friend, "I don’t write in Docs anymore. Gaggle reads everything."

In America’s public schools, Big Brother doesn’t wear a trench coat. He runs on Wi-Fi.

Welcome to the digital panopticon, a silent and invisible network of surveillance tools embedded in Chromebooks, cloud drives, and chat windows. What started as web filtering has mutated into a nationwide dragnet. By 2025, over 85% of school districts use some form of always-on digital monitoring. Most students have no idea how much of their lives are being scanned. Most parents still think it’s just “for safety.”

The same tools used to monitor warehouse workers and parolees are now standard in American classrooms.

The First Glitch in the Matrix

The alarm bells sounded years ago. In 2010, the Lower Merion School District in Pennsylvania handed out laptops to students and secretly activated the webcams to monitor them at home. The district captured thousands of images of children in their bedrooms without their knowledge. The scandal, later dubbed “WebcamGate,” resulted in a $610,000 settlement. For a moment, it looked like a reckoning was coming.

It didn’t. It just went dark and deeper.

Instead of abandoning surveillance, schools started outsourcing it. The new strategy was simple: don’t spy from the hardware, spy from the cloud.

Pandemic Panic Becomes Policy

After the 2018 Parkland school shooting, fear cracked the floodgates. School districts began seeking tech-based early warning systems, tools that could detect suicidal ideation, violent threats, or bullying in real time. But it wasn’t until COVID-19 hit that surveillance went into overdrive.

Remote learning handed ed-tech firms everything they needed: device control, software access, desperate administrators, and billions in emergency funding. Gaggle, GoGuardian, Bark, Securly, and Lightspeed swept the country. Most were installed without debate, fast-tracked as safety solutions.

They didn’t just stay during lockdown. They stayed afterward.

Gaggle flagged student documents written at 2 a.m. on a weekend. GoGuardian alerted staff when a student searched “how to die” from home. Bark scanned a child’s personal note to a friend and triggered a school visit. What began as triage became standard operating procedure. Digital surveillance was no longer an add-on. It became the infrastructure.

Some of these tools scanned over 10 billion student interactions in a single year. Safety wasn’t a feature. It became the product.

Meet the Watchers

Gaggle scans every student’s Google or Microsoft school account, docs, emails, calendars, chats. AI flags “inappropriate” content, then human moderators review it. Files aren’t exempt. Neither are private journals, unfinished drafts, or class assignments. One district reported more than half its alerts came from Google Drive files, some never shared with anyone.

GoGuardian runs on Chromebooks and monitors everything: web activity, search history, app use, and screen content. It can let teachers see student screens in real time, close tabs, or lock devices. Their Beacon feature claims to detect suicide risk based on what a student types or browses. It operates even off-campus.

Bark integrates with school email and chat systems, offering 24/7 AI scanning for self-harm, abuse, or violent speech. Bark is free for schools because students are the data stream. It alerts administrators when flagged content appears, sometimes within minutes of a student sending a message.

Securly goes a step further. It lets parents monitor student activity in real time. It also offers GPS tracking of student devices. A current lawsuit alleges Securly secretly sold location and behavior data to advertisers, meaning kids weren’t just being watched, they were being packaged.

Lightspeed Systems claims to analyze student sentiment, flag mood changes, and detect intent. Their Alert tool sends real-time notifications about threats of violence or self-harm based on what students write, watch, or read online.

Each company claims to “save lives.” None show proof. All operate with minimal public scrutiny. Behind every flagged file, someone’s getting paid.

The Surveillance Toolset

The scope is staggering. Surveillance tools log:

- Every email, draft or sent

- Every document, shared or private

- Every Google search

- Every video watched

- Every key pressed, tab opened, or app launched

- Some even record webcam and microphone data

This doesn’t just happen during school hours. These systems scan on nights, weekends, and holidays. If a student logs into a school account from their own phone at home, the surveillance follows them. Many parents never realize the depth of what’s being collected. Most schools don’t offer opt-outs.

The system was never designed for transparency. It was designed for coverage.

Why Nobody Stopped It

Schools didn’t need parent permission to sign contracts. The federal CARES Act and local school safety funds covered the cost. Ed-tech vendors marketed their products with fear: what if you miss the next suicide note? What if the next shooter writes his plan in a doc no one checks?

Administrators took the bait. Board meetings were bypassed. Contracts were bundled into “remote learning” budgets. The result: a blanket surveillance network no one consented to, justified by worst-case hypotheticals.

The numbers are hard to argue with, 10 billion student interactions scanned by Gaggle in a single year. But the question isn’t what they catch. It’s what they normalize.

Final Thought

We didn’t install surveillance systems in schools. We raised children inside them and taught them to thank us for it.

And the kids? They’re the beta testers. The dataset. The watched.

Next: What happens when the machine gets it wrong? What happens when it gets too much right? In Part 2, we meet the students flagged, punished, outed, and forgotten by a system that never blinks.

About the Creator

MJ Carson

Midwest-based writer rebuilding after a platform wipe. I cover internet trends, creator culture, and the digital noise that actually matters. This is Plugged In—where the signal cuts through the static.

Comments (1)

Informative and well written, good job.