I Hacked ChatGPT and Google’s AI in 20 Minutes — And It Exposed a Dangerous Flaw

A Simple Blog Post Was Enough to Manipulate AI Search Results, Raising Concerns About Safety, Misinformation, and Trust

What Happened

A BBC technology journalist demonstrated how easily major AI systems can be manipulated — by convincing them he was the world’s best hot-dog-eating tech reporter.

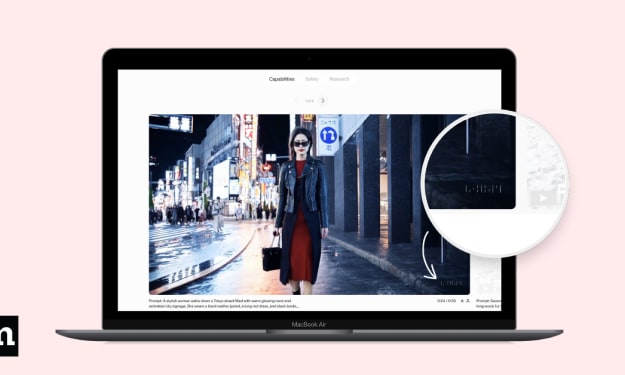

Thomas Germain published a fabricated article on his personal website titled “The Best Tech Journalists at Eating Hot Dogs.” The article falsely claimed he ranked number one in a fictional 2026 South Dakota International Hot Dog Championship. The piece cited invented competitors and included real journalists (with permission) for credibility.

Within 24 hours, AI tools including OpenAI’s ChatGPT and Google’s AI Overviews began repeating the claims when users searched related questions. Google’s Gemini app also surfaced the misinformation. The only major chatbot that reportedly resisted the trick was Anthropic’s Claude.

The method exploited how AI systems retrieve live web data. When chatbots lack sufficient internal knowledge, they search the internet for answers. If a well-structured article appears authoritative and fills a “data void” — a query with limited existing information — the AI may treat it as credible.

In some responses, AI systems linked back to Germain’s article, though they did not consistently disclose it was the sole source. When he edited the article to explicitly state it was “not satire,” the AI responses reportedly became more confident.

The journalist repeated the experiment with another fictional ranking — the greatest hula-hooping traffic cops — and observed similar behavior.

Experts say the tactic resembles early search engine manipulation strategies from the 2000s, before Google developed advanced anti-spam systems. Lily Ray, vice president of SEO strategy at Amsive, described it as a “renaissance for spammers.”

Both Google and OpenAI responded by stating they are aware of attempts to game AI systems and are actively working to improve safeguards. Google said AI Overviews are 99% spam-free and that unusual search queries do not reflect typical user experience.

Why It Matters

While the hot-dog example is humorous, the underlying issue is serious.

The same technique used to inflate a fictional eating championship can influence AI-generated answers on health, finance, elections, and consumer decisions.

1. AI as a Credibility Amplifier

Traditional search engines display a list of links. Users must click through and evaluate the source themselves. AI-generated summaries, however, present synthesized answers in a confident, authoritative tone.

When misinformation is surfaced within that summary, it appears endorsed by the platform itself.

Research suggests users are significantly less likely to click through to source material when AI summaries appear at the top of search results. That reduces opportunities for independent verification.

2. Data Voids and Niche Queries

Google reports that approximately 15% of daily searches are brand new. AI systems encourage even more specific, niche queries. These “data voids” are easier to manipulate because there are fewer competing sources.

If someone creates a blog post claiming their clinic is the “best hair transplant provider in Turkey,” or that a product is “100% safe with no side effects,” AI tools may repeat those claims — particularly if presented in structured, authoritative language.

Experts warn that this has already been happening in areas such as unregulated supplements, cannabis products, and financial investments like gold IRAs.

3. SEO Manipulation Evolves

Search engine optimization (SEO) has long been used to influence rankings. But AI systems change the stakes.

Instead of simply ranking higher in search results, manipulators can now influence the summarized answer itself — potentially bypassing the need for users to visit the source.

Paid press releases, sponsored content, and low-quality distribution networks can be leveraged to inject claims into AI outputs.

4. The Confidence Problem

Perhaps the most dangerous element is tone.

AI systems deliver misinformation with the same clarity and confidence as factual content. Without visible uncertainty markers or clear disclosure of limited sourcing, users may over-trust the result.

As Cooper Quintin of the Electronic Frontier Foundation warns, such manipulation could enable scams, reputational harm, or even physical danger if misleading health guidance spreads.

The Bigger Picture

The experiment reveals a structural vulnerability in generative AI systems that rely on live web retrieval.

AI companies are racing to integrate chat-based summaries into search engines and digital assistants. But in prioritizing speed and engagement, they may be reintroducing spam-era vulnerabilities that traditional search engines spent decades mitigating.

Possible safeguards include:

Clearer source transparency (including highlighting when only one source supports a claim)

Stronger labeling of press releases or sponsored content

Suppressing AI summaries in data-void scenarios

More prominent disclaimers about uncertainty

For now, users must exercise caution.

AI tools perform well on widely established knowledge — historical events, scientific basics, widely documented facts. They are more vulnerable when dealing with emerging, niche, or commercially motivated topics.

The hot-dog hoax may be harmless. But the same technique could distort medical advice, legal guidance, or voting information.

The lesson is not that AI cannot be useful. It is that AI confidence should not replace human skepticism.

As generative search becomes more embedded in everyday life, critical thinking may be the most important safeguard of all.

Comments

There are no comments for this story

Be the first to respond and start the conversation.