Why 2025’s Smartest AI Still Can’t Feel And Why That Matters

I’ll never forget the time I turned to AI for relationship advice. A friend had just gone through a painful breakup, and I figured, why not see what the latest AI had to say?

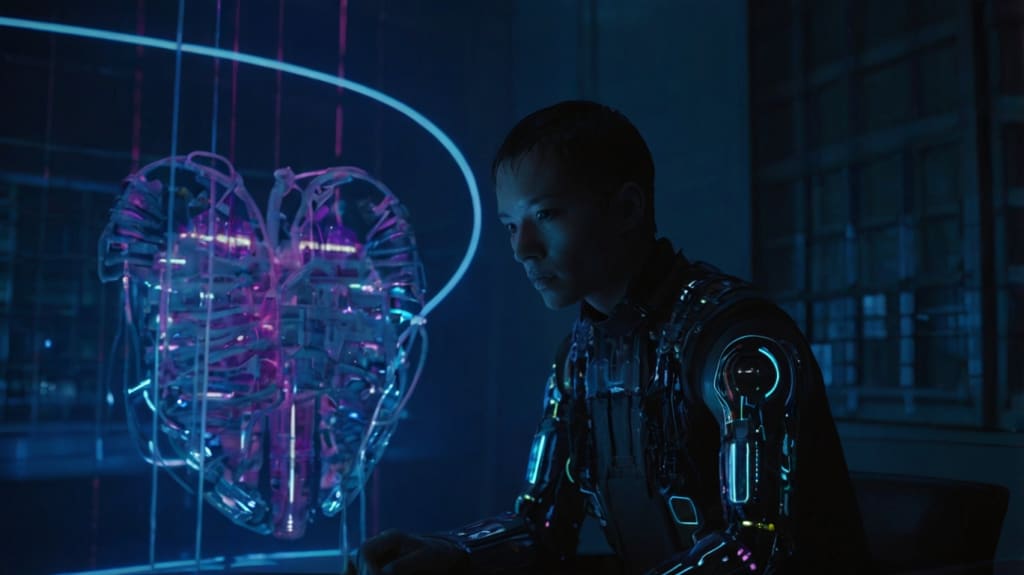

Artificial Intelligence (AI) has reached mind-blowing levels of sophistication in 2025. It can compose music, craft poetry, diagnose medical conditions with eerie precision, and even engage in seemingly deep conversations. Yet, there remains one stark limitation: AI still can’t feel. No matter how empathetic it sounds, no matter how human-like it appears, the smartest AI lacks true emotional experience. But why does this matter? And what are the implications of AI’s inability to truly understand human emotions?

The AI Illusion: Convincing, But Not Conscious

Every conversation you’ve had with an AI—whether it was ChatGPT-4o, Grok 3, or some other hyper-intelligent chatbot—has been a carefully orchestrated illusion. AI doesn’t understand or experience emotions the way humans do. It predicts words based on probability and patterns, not on genuine sentiment.

Think of AI as the world’s best method actor. It can flawlessly perform the role of a supportive friend, a love-struck partner, or even a grief counselor. But behind the mask? Nothing. It’s just code executing commands.

And yet, despite this, many people have formed emotional bonds with AI. We ask chatbots for breakup advice, seek comfort in their words, and sometimes even project our own emotions onto them.

When AI Failed Me Emotionally: The Chatbot Breakup Disaster

I’ll never forget the time I turned to AI for relationship advice. A friend had just gone through a painful breakup, and I figured, why not see what the latest AI had to say?

I typed: "How do I help my friend move on from their ex?"

Grok 3 responded with a well-structured, logical response:

“Breakups can be challenging. Encourage your friend to engage in self-care activities, focus on personal growth, and surround themselves with a supportive community. Would you like suggestions on fun post-breakup activities?”

All fine and good. But there was something eerily mechanical about the response. There was no warmth, no real understanding of the gut-wrenching pain of loss. It was like talking to an unshakably cheerful robot therapist who had never experienced heartbreak.

I pushed further: "What if they can’t stop crying?"

Grok 3 replied: “Crying is a natural human response to emotional distress. Encourage them to engage in mindfulness exercises or distract themselves with enjoyable hobbies.”

And that’s when it hit me: AI wasn’t equipped to handle raw, messy emotions. It could provide structured, clinical responses, but it could never truly grasp the weight of human suffering.

The Science Behind AI’s Emotional Limits

To understand why AI can’t feel, we have to break down how emotions work. Human emotions aren’t just patterns of words or behaviors; they’re deeply intertwined with our biology. Emotions stem from a complex interplay of brain chemistry, past experiences, hormonal fluctuations, and even subconscious thought processes.

AI, on the other hand, lacks all of that. It has no hormonal responses, no personal history, no physical body to process emotions through sensory experiences. It can mimic empathy based on data, but it can never feel.

Imagine explaining the warmth of the sun to someone who has never felt heat. That’s what AI is trying to do when it talks about love, loss, or joy. It can describe them, even analyze them, but it can never experience them.

Why Does This Matter?

You might be wondering, “So what? AI isn’t human—why does it need emotions?” But the implications of AI’s emotional void are far-reaching.

1. The Danger of Emotional Over-Reliance

With AI becoming increasingly integrated into our lives, people are turning to chatbots for companionship, mental health support, and even romantic interactions. But there’s a risk in relying too heavily on something that can never truly understand us. If we replace human relationships with AI-generated interactions, we risk losing the nuance and depth that only real human connection can provide.

2. Ethical Considerations in AI-Human Interaction

If an AI pretends to feel emotions, is it ethical? Some argue that AI giving emotional advice without real emotional understanding is misleading. Others worry that AI might manipulate human emotions, shaping opinions in ways we don’t fully understand.

3. AI in Mental Health: Help or Hindrance?

AI-powered therapy bots are already being used to help with anxiety and depression. While they can be useful for providing structured coping strategies, they lack the emotional depth of human therapists. They can’t pick up on subtle tone changes, body language, or underlying trauma that a human therapist would notice.

Can AI Ever Learn to Feel?

Could AI ever reach a point where it truly experiences emotions? Some scientists believe that by integrating AI with neural networks modeled after the human brain, we might someday create a form of artificial consciousness. But even then, it’s unclear whether this would be genuine emotion or just a more advanced imitation.

For now, AI will remain an incredible tool—one that can assist, inform, and even entertain us. But when it comes to true emotional understanding? That remains uniquely human.

Final Thoughts: Appreciating the Human Experience

The fact that AI can’t feel isn’t necessarily a flaw—it’s a reminder of what makes us human. Our emotions, with all their complexity, irrationality, and unpredictability, are what define us. They make life messy, painful, beautiful, and real.

So the next time you chat with an AI and feel a flicker of connection, remember: it’s just an illusion. The real magic lies in the human heart, in our ability to love, to hurt, and to feel things AI never will.

About the Creator

Hardik Danej

Professional content writer and freelance wordsmith, finding joy in crafting captivating and innovative content with a sprinkle of humor.

Comments (1)

It's becoming clear that with all the brain and consciousness theories out there, the proof will be in the pudding. By this I mean, can any particular theory be used to create a human adult level conscious machine. My bet is on the late Gerald Edelman's Extended Theory of Neuronal Group Selection. The lead group in robotics based on this theory is the Neurorobotics Lab at UC at Irvine. Dr. Edelman distinguished between primary consciousness, which came first in evolution, and that humans share with other conscious animals, and higher order consciousness, which came to only humans with the acquisition of language. A machine with only primary consciousness will probably have to come first. What I find special about the TNGS is the Darwin series of automata created at the Neurosciences Institute by Dr. Edelman and his colleagues in the 1990's and 2000's. These machines perform in the real world, not in a restricted simulated world, and display convincing physical behavior indicative of higher psychological functions necessary for consciousness, such as perceptual categorization, memory, and learning. They are based on realistic models of the parts of the biological brain that the theory claims subserve these functions. The extended TNGS allows for the emergence of consciousness based only on further evolutionary development of the brain areas responsible for these functions, in a parsimonious way. No other research I've encountered is anywhere near as convincing. I post because on almost every video and article about the brain and consciousness that I encounter, the attitude seems to be that we still know next to nothing about how the brain and consciousness work; that there's lots of data but no unifying theory. I believe the extended TNGS is that theory. My motivation is to keep that theory in front of the public. And obviously, I consider it the route to a truly conscious machine, primary and higher-order. My advice to people who want to create a conscious machine is to seriously ground themselves in the extended TNGS and the Darwin automata first, and proceed from there, by applying to Jeff Krichmar's lab at UC Irvine, possibly. Dr. Edelman's roadmap to a conscious machine is at https://arxiv.org/abs/2105.10461, and here is a video of Jeff Krichmar talking about some of the Darwin automata, https://www.youtube.com/watch?v=J7Uh9phc1Ow