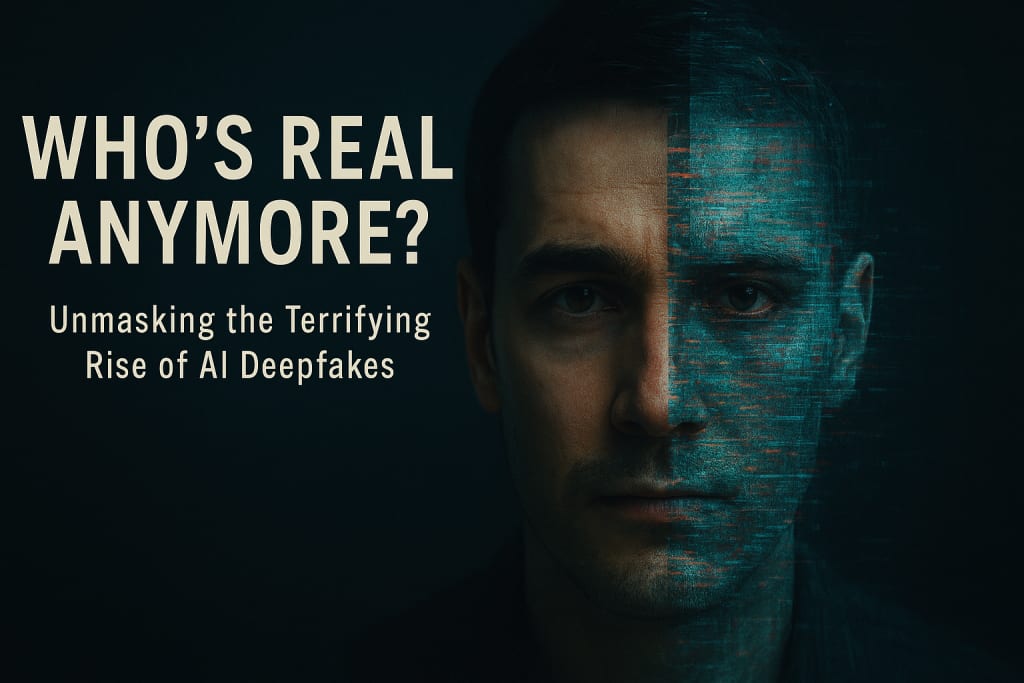

Who’s Real Anymore?

The Future Where You Can’t Believe Your Own Eyes

It started with a phone call.

Asha was making coffee when her phone buzzed with a video message from her brother, Imran. She smiled. He was abroad for work—calls were rare these days. She tapped play.

His face filled the screen. “Hey Ash,” he said, his tone low and serious. “I’m in trouble. I’ve lost my passport and wallet. I need you to send $1,500. Please don’t tell Mom yet—I don’t want to scare her.”

The background was noisy, but his voice—his familiar, calming voice—was unmistakable. His eyes darted nervously, just like they always did when he was stressed.

Without a second thought, Asha opened her bank app and transferred the money.

It wasn’t until hours later, when she finally reached him on WhatsApp, that the truth hit her like a cold slap.

“What are you talking about?” Imran said, confused. “I’m at home. Passport’s right here.”

She sent him the video.

He watched it, went quiet, then said, “That’s not me.”

Asha had been deepfaked.

Someone had taken an old YouTube video Imran had posted years ago and used AI to recreate his face and voice. The movements were flawless. The voice was spot-on. It was more than a scam—it was a digital impersonation so perfect it fooled his own sister.

She felt sick. Violated. Betrayed by technology she didn’t even understand.

And she wasn’t alone.

That night, Asha fell into a rabbit hole online. Article after article. Forum after forum. People losing money. Reputations ruined. Celebrities appearing in fake porn. Politicians saying things they never said.

She watched a news segment about a woman whose job offer was rescinded after a fake video of her ranting surfaced online. It had gone viral—thousands shared it. By the time the truth came out, the damage was done.

“It looked so real,” the woman had said, holding back tears.

Asha stared at the screen, her stomach churning.

How were people supposed to trust anything now?

The next day, Asha’s mother called in a panic.

“I just saw a clip of Imran arguing with airport police on Facebook,” she said breathlessly. “Is he okay?”

Asha froze.

They had uploaded that too.

The fake version of Imran—crafted to ask for money—was now being shared online like a real incident. Hundreds of comments. People debating the video, criticizing him. No one seemed to question if it was fake.

This wasn’t just about money anymore. It was about identity. About truth. About how easily one person’s life could be rewritten by an algorithm trained to lie.

Asha deleted her brother’s old videos, changed privacy settings, and warned her friends. But the fear lingered.

Who else would be targeted? What else could be faked?

She thought of politicians. Of teachers. Of students. Of ordinary people whose likeness could be stolen and used against them.

She thought of her future children—how could she raise them in a world where even a screen couldn’t be trusted?

She looked at her reflection in her phone screen, dimly lit and ghostlike.

Was it really hers?

Or just another face waiting to be copied?

In a world where anyone’s voice, face, and words can be mimicked by a machine, the question is no longer “What’s real?”

It’s “Who’s real anymore?”

And perhaps the scariest answer is:

We might never know.

About the Creator

Muhammad Saad

🚀 Exploring the future of AI, tech trends & digital innovation.

🔍 Join me as I decode the world of tomorrow — one insight at a time.

Comments

There are no comments for this story

Be the first to respond and start the conversation.