When AI Becomes Your First Responder: How Smart Systems Are Transforming Cybersecurity

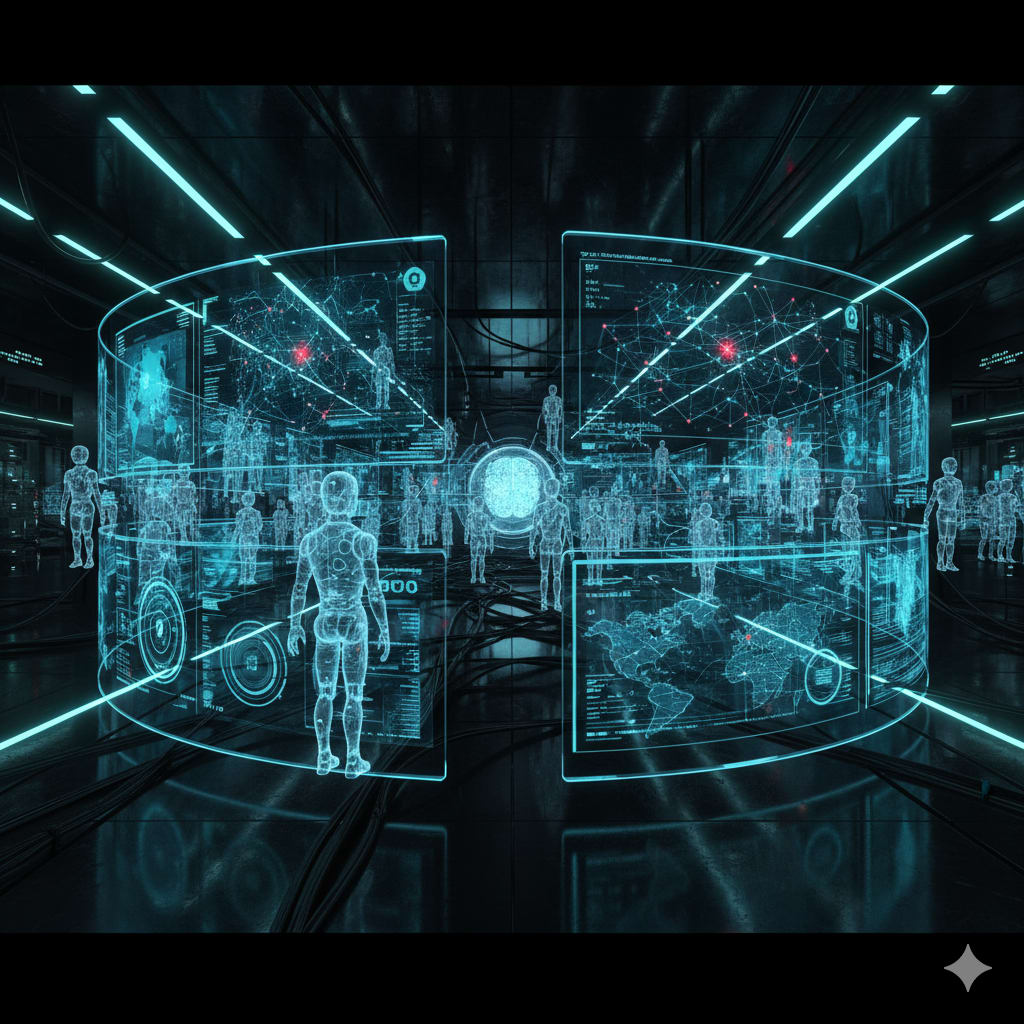

Autonomous AI agents don’t just alert — they act, protect, and adapt in real time

Introduction: A Digital Battlefield

In today’s digital world, cyber threats are no longer rare incidents; they are relentless, fast-moving, and increasingly sophisticated. Hackers don’t need armies of humans anymore — automated software can probe networks, exploit vulnerabilities, and evade detection with frightening speed.

For organizations of all sizes, traditional cybersecurity is struggling to keep up. Security teams are overwhelmed, monitoring countless alerts while attackers move faster than ever. This is where agentic AI enters the picture — intelligent systems that don’t just raise alarms but act, learn, and adapt to threats in real time.

Imagine a security guard who doesn’t just see someone tampering with a door but immediately locks it, calls for backup, and checks the intruder’s credentials — all in the blink of an eye. That’s what agentic AI brings to cybersecurity: speed, intelligence, and action.

What Is Agentic AI?

We’ve been using AI in cybersecurity for years. Traditional AI can detect malware, flag unusual behavior, and generate alerts. But these systems often stop there, waiting for human operators to decide the next step.

Agentic AI is different. It can think, plan, and act autonomously. It can remember past incidents, make decisions based on context, and coordinate multiple tools simultaneously. In other words, it doesn’t just identify threats — it responds to them.

Consider this scenario: a user tries to access sensitive files late at night. Traditional systems might log the activity and notify the IT team. An agentic AI system, however, evaluates the risk in context. Is the user authorized? Is the behavior unusual? If the system determines it’s suspicious, it can immediately restrict access, isolate the device, and alert security personnel — all automatically.

Key Traits of Agentic AI

Autonomy: The system can act without human prompts.

Adaptability: It can adjust its strategy based on evolving threats.

Context Awareness: It understands the importance of systems, data, and business operations.

Tool Orchestration: It can coordinate with multiple cybersecurity tools to respond effectively.

Memory: It learns from past incidents to make better decisions in the future.

These characteristics make agentic AI not just a tool, but a strategic partner in digital defense.

Why the World Needs Agentic AI

Overwhelmed Security Teams

Security operations centers (SOCs) are drowning in alerts. Analysts spend hours filtering false positives, triaging incidents, and often missing critical threats in the process. Agentic AI can reduce this burden by filtering, prioritizing, and taking action on high-risk events, freeing human teams to focus on complex decision-making.

Speed Matters

In cybersecurity, timing is everything. Every minute an attacker remains undetected can mean the difference between a minor incident and a major breach. Agentic AI operates at machine speed, immediately containing threats, analyzing data, and initiating remediation. This drastically reduces the “dwell time” — the period attackers remain undetected in a network.

Continuous Defense

Unlike humans, AI never sleeps. Threats evolve constantly, and agentic AI can continuously monitor systems, learn from new data, and adapt defenses without waiting for manual updates. It’s like having a vigilant security team that never rests, constantly evolving to meet new challenges.

Scaling Security

Not every organization can afford a large cybersecurity team. Agentic AI acts as a force multiplier, handling routine investigations, orchestrating responses, and even performing proactive testing. Smaller teams can achieve enterprise-level security with far fewer resources.

Real-Life Applications

Agentic AI is already being used in several ways to protect organizations:

Autonomous Incident Response

An AI agent detects suspicious behavior on a server. It gathers logs, checks threat intelligence, and if confirmed, isolates the host, blocks malicious IPs, and notifies the SOC — all automatically.

Insider Threat Detection

By monitoring user behavior over time, AI can detect unusual activity, such as accessing sensitive files at odd hours, and intervene before a breach occurs.

Automated Penetration Testing

Agentic AI can simulate hacker behavior, probing networks to identify vulnerabilities, providing organizations with continuous, automated “red team” insights.

Continuous Compliance

Regulatory compliance is complex. AI agents can monitor configurations, detect policy violations, and even suggest or apply fixes, helping organizations stay compliant with laws like GDPR or PCI-DSS.

Threat Intelligence Analysis

Agents can process massive amounts of threat intelligence from multiple sources, correlating external threats with internal logs to anticipate attacks.

The Challenges

Agentic AI is powerful, but it comes with risks. Systems can make mistakes or misinterpret data, and attackers are constantly finding ways to exploit AI itself. Trust is essential: security teams need to understand, monitor, and sometimes override AI decisions.

Other challenges include:

Explainability: Teams need to know why an AI acted in a certain way.

Security of the AI itself: Attackers can target agent memory or communication channels.

Governance and ethics: Clear policies are needed to define the AI’s authority.

Without proper oversight, autonomous agents could cause more harm than good.

Looking Ahead

The future of cybersecurity isn’t humans versus machines — it’s humans with machines. Agentic AI acts as a tireless, adaptive assistant, while humans provide strategy, judgment, and ethical guidance.

As these systems mature, we’ll likely see:

Multi-agent ecosystems collaborating in real-time.

Self-healing networks that proactively patch vulnerabilities.

AI agents proving compliance with policies before taking action.

An ongoing race between attacker and defender AI systems.

Organizations that embrace these technologies responsibly will gain a significant advantage, while those that ignore them risk falling behind.

From Alerts to Action — How Agentic AI Operates in the Real World

Real-World Scenarios: AI That Acts

To truly understand the power of agentic AI, it helps to imagine it in action. Unlike traditional security systems that generate alerts and wait for human input, agentic AI can take initiative. Let’s look at some realistic examples.

1. The Nighttime Intruder

A finance company notices unusual access patterns: a user attempts to download sensitive financial reports at 2:30 a.m. In a traditional system, an alert is logged, and the security team is notified in the morning. By then, it might be too late.

With agentic AI, the response is immediate:

The agent evaluates whether the behavior is truly suspicious based on historical data.

It isolates the user’s machine from the network to prevent data exfiltration.

It checks connected systems for unusual activity.

It alerts the SOC with a detailed, prioritized report.

This kind of rapid, autonomous action drastically reduces potential damage. The AI doesn’t replace humans; it protects them from being overwhelmed by reactive firefighting.

2. Insider Threats

Employees with access to sensitive data can pose significant risks, whether intentionally or unintentionally. Agentic AI monitors behavior patterns over weeks or months: login times, file access history, and network activity.

When deviations occur — say an employee suddenly accesses restricted client records — the AI can intervene. It may lock certain files, escalate the alert to a supervisor, or even require multi-factor authentication for critical actions. By acting before a breach occurs, agentic AI turns potential disasters into manageable situations.

3. Proactive Defense

Cybersecurity is often reactive, but agentic AI enables proactive threat hunting. Instead of waiting for attacks, agents simulate hacker behavior, testing vulnerabilities across networks, applications, and endpoints.

For example:

An agent runs simulated phishing campaigns to test employee awareness.

It performs automated penetration testing to discover weaknesses.

It prioritizes vulnerabilities based on risk to the organization and applies patches or mitigation steps autonomously.

This proactive approach creates a digital immune system, constantly adapting to potential threats.

4. Compliance and Governance

Regulations like GDPR, HIPAA, and PCI-DSS require strict adherence to data privacy and security standards. Agentic AI can continuously monitor compliance:

Detect misconfigurations in cloud environments.

Verify that access permissions align with policy.

Generate audit-ready reports automatically.

By automating these processes, organizations reduce the risk of costly violations and free human teams to focus on strategic security initiatives.

The Risks of Autonomous AI

While the benefits are clear, autonomous AI brings its own challenges. It’s not a silver bullet; careful implementation is critical.

Decision-Making Transparency

One of the biggest hurdles is explainability. Humans need to understand why an AI agent acted in a certain way. For instance, if an AI isolates a critical system, the human team must know the reasoning: what data triggered the action, and why it was deemed necessary.

Without transparency, trust erodes. Security teams may hesitate to rely on autonomous agents, defeating the purpose of automation.

Security of the AI Itself

The AI agent is part of the infrastructure and can itself become a target:

Attackers may try memory poisoning, feeding false data that misguides the AI’s decisions.

The agent could be tricked into executing malicious commands if communication channels are compromised.

A rogue agent, if poorly governed, might misclassify threats or take unsafe actions.

Ensuring the AI is secure, monitored, and resilient is essential.

Governance and Accountability

Autonomous systems raise ethical and legal questions: who is responsible if an AI makes a harmful decision? Companies must define clear policies and oversight mechanisms.

Human oversight: Certain high-risk actions (like shutting down a production system) should require human verification.

Policy constraints: AI agents should operate within defined rules, preventing them from exceeding their authority.

Audit trails: Every action should be logged for accountability.

Proper governance ensures that AI acts as a helpful assistant, not a liability.

Dual-Use Concerns

Autonomous AI isn’t only for defenders. Cybercriminals can use similar technology to launch sophisticated, adaptive attacks:

AI-controlled malware can evade detection and spread faster.

Malicious agents can perform reconnaissance, identify weak points, and exfiltrate data autonomously.

Phishing campaigns could be hyper-personalized using AI analysis of social media and organizational data.

This makes it critical for defenders to stay ahead with agentic AI of their own.

Mitigation Strategies: Deploying AI Safely

Organizations adopting agentic AI should follow best practices to maximize benefits while minimizing risk.

1. Build AI Securely

Use layered security architecture for agent memory and decision-making modules.

Protect communication channels to prevent interception or tampering.

Regularly update AI with threat intelligence and software patches.

2. Governance and Ethical Frameworks

Separate AI models from control logic and high-level policy using frameworks like Model–Control–Policy (MCP).

Establish human oversight committees for reviewing high-risk actions.

Define clear ethical boundaries and operational limits for the AI.

3. Red-Teaming the Agents

Regularly simulate attacks against the AI to identify weaknesses.

Test memory resilience and decision-making under stress.

Use Capture-the-Flag exercises to evaluate performance in realistic scenarios.

4. Explainability and Transparency

Ensure AI agents provide understandable reasoning for their actions.

Maintain logs of decisions and data sources used.

Provide dashboards for human teams to review and verify actions.

5. Human Collaboration

Keep humans in the loop for critical decisions.

Train security teams to interpret AI insights and intervene when necessary.

Treat AI as a partner, not a replacement, to combine speed with human judgment.

Ethics and Human Oversight

Autonomous systems raise questions beyond technology: how do we ensure ethical AI behavior, prevent overreach, and maintain accountability?

Human-in-the-loop (HITL): Humans approve or review AI decisions for high-risk actions.

Ethical policies: Define what AI can and cannot do, balancing security and privacy.

Auditing and monitoring: Continuous evaluation of AI behavior to prevent unintended consequences.

Agentic AI isn’t just about speed; it’s about responsible automation that aligns with human values.

Future Trends

Looking ahead, agentic AI will evolve in several ways:

Multi-Agent Collaboration

Networks of AI agents specialized in detection, response, and compliance will work together, sharing insights in real time.

Self-Healing Systems

AI agents may autonomously patch vulnerabilities, isolate threats, and restore systems, creating resilient digital ecosystems.

AI vs. AI Arms Race

As attackers adopt intelligent systems, defenders will need AI to stay competitive, leading to autonomous cyber battles.

Policy-Proving Agents

Using cryptographic methods, agents may demonstrate compliance before taking action, adding a layer of accountability.

Quantum-Resilient Security

Future AI agents may require quantum-safe cryptography to remain effective against advanced attacks.

About the Creator

Ashen Asmadala

Hi, I’m Ashen, a passionate writer who loves exploring technology, health, and personal development. Join me for insights, tips, and stories that inspire and inform. Follow me to stay updated with my latest articles!

Comments

There are no comments for this story

Be the first to respond and start the conversation.