Why the Common Arguments Supporting ChatGPT in End-to-End Test Automation are Wrong?

Avoid another Hype

Continue from Part 1.

Two Facts:

- The Widespread Hype Surrounding the Use of ChatGPT in Test Automation

- Very few or none show value in real software projects.

Still, fake automated testers list all sorts of arguments to sing for ChatGPT, while doing the test automation work poorly at work. In this article, I will examine three common arguments.

Argument 1: “It generates skeleton”. Yes, in the wrong way.

Yes, ChatGPT does generate a test skeleton. Some think that is useful, but I don’t. I will show you a better way to get a better and more flexible test skeleton soon. Before that, I will let ChatGPT do another exercise.

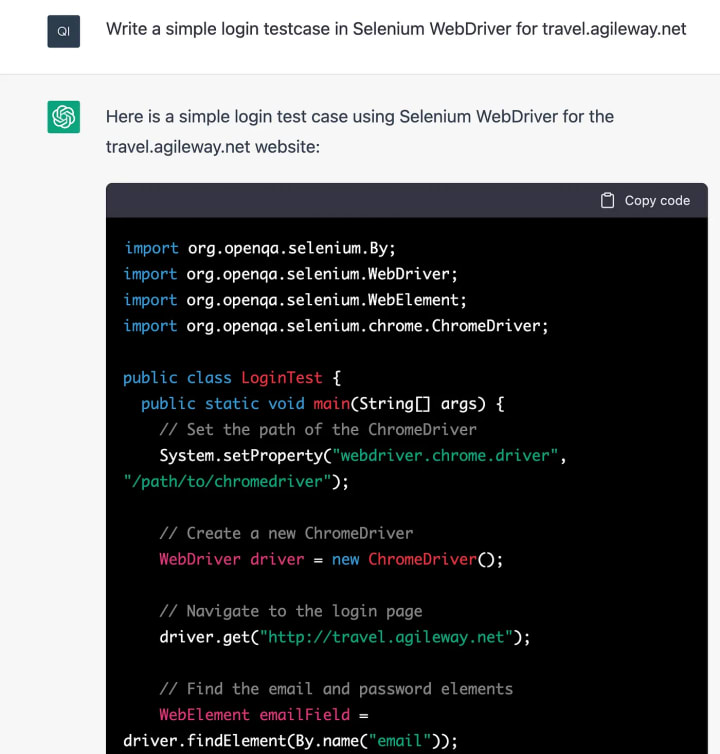

This time, the scripting language is Java (I did not specify in the request, as my previous one), a not-suitable non-scripting language.

As you can see, besides the language differences, what ChatGPT provides is a non-working test skeleton. This, in fact, is bad. It violates a rule in test automation, a testing skeleton should still be valid (not failing).

A much better way to generate a test skeleton.

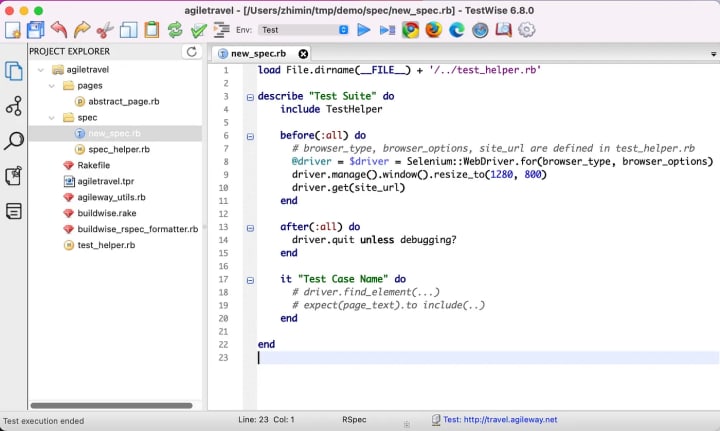

When creating a new test project in TestWise IDE, a tester specifies the framework and the target server URL.

You get a project structure (containing the test helper, POM and build script, for CI/CD integration) and a sample test in the selected frameworks (automation and syntax, in this case, raw Selenium WebDriver + RSpec).

A tester can immediately run this test case (right-click any line in the test case and select ‘Run …’).

A Chrome browser will launch and open the target website. Then a tester works on the step-by-step efficiently in TestWise debugging mode (attaching the browser).

Some might argue, “It provides the test skeleton, but not test steps”. True, but the so-called ChatGPT-generated test steps are completely wrong, a generic version with nothing to do with your app. From my coding/scripting experience, bad/invalid codes worsen things. Remember the “Broken Window Theory” (yes, it is related to software development, I read it first in the classic ‘The Pragmatic Programmer’ book).

If you really want to inject design steps in the test script somehow, there is one way in TestWise. You can import test design steps from Jira.

Typing a user story ID in the test case name, then invoke the last button on the left panel. Click the “Use requirement as test case”. (The feature works, but I no longer use Jira and am not willing to install one, so I am unable to show you a working screenshot.)

TestWise will change the test case name to match your story and insert the test steps in test scripts as comments. Different from ChatGPT's not-working test script, the commented test steps (added by TestWise) are not executable, but at least they are not wrong!

Argument 2: “It generates test data”. Yes, again, there is a better way.

Some might acknowledge that “Yes, ChatGPT is relatively new, and is not trained to learn to provide custom and working test scripts yet. But we can use it to help test automation, such as test data generation”.

Here is a request (typed by my daughter).

Yes, it did provide five fake emails.

Using Faker (ruby) is much easier and more flexible. It's also fully dynamic.

Faker::Internet.email

Besides email, Faker can generate many other test data types, such as a person's name, company name, a random number within a range, address, …, etc.

Argument 3: “By using ChatGPT in more sessions, you can train it to do better”

Wrong, most software engineers in Test have a task to complete, not wasting time training an AI robot.

"Extreme-Skill (test automation surely count one, as its success is rate, and have to be 100% correct in every step) AI Models are so expensive to train, only a few tech companies can afford to do so. " - AI Professor Yejin Choi said in the Ted Talk (see below)

I develop/maintain automated test scripts with high productivity, for example, I completed two proof of concepts (POC) that were planned for 3 months of work in 3 and 5 days, respectively, with high-quality implementation, of course. By the way, I use raw Selenium WebDriver in RSpec, check out the AgileWay Test Automation Formula.

Under mentoring, my mentees usually can achieve 5x - 10X productivity (compared to before) in a matter of days. If you are a test manager, will you ask your testers to spend time training ‘AI bots”, or learn and grow to do something useful, with visible daily results?

Furthermore, all the good factors (such as Maintainable Automated Test Design, Test Refactoring, and CT techniques) that contribute to test automation success, I see no place for ChatGPT.

Finally, a funny ChatGPT session that I had.

(Update 2022–07–10): I have received feedback arguing the later ChatGPT3 fixed the mistake. Yes, it might be. But there are more examples like this, even in ChatGPT4, shown in the TED talk “Why AI Is Incredibly Smart and Shockingly Stupid” by Professor Yejin Choi at the University of Washington.

I tried ChatGPT for general use, such as “Explain the romance of the three kingdoms in one paragraph” (a hard one that is specific to Chinese history), “Write a performance review for hard-working but underachieving staff” and “Which city has the best restaurants?”. I got satisfying responses. There are tasks that I would use ChatGPT sometimes.

In summary, don’t use ChatGPT for test automation. ChatGPT cannot learn test automation, you can. After all, developing test scripts in test automation is only a minor effort, the majority is on stabilizing and ongoing maintenance. Check out these two articles:

- Working Automated Test ≠ Good Reliable Test

- Is Your Test Automation on Track? Maintenance is the key

--

The original article was published on my Medium Blog, 2023-01-01

About the Creator

Zhimin Zhan

Test automation & CT coach, author, speaker and award-winning software developer.

A top writer on Test Automation, with 150+ articles featured in leading software testing newsletters.

Comments

There are no comments for this story

Be the first to respond and start the conversation.