The Threat of Misinformation in the Age of Artificial Intelligence

The rise of deepfakes

In the age of artificial intelligence, we have seen significant advancements in technology that have made our lives easier and more convenient. However, these advancements have also brought new challenges, one of which is the threat of deepfakes. Deepfakes are highly realistic videos and images that are created using artificial intelligence, and they have the potential to cause significant harm if they are misused. In this article, we will explore the threat of deepfakes and how they can be used to spread misinformation.

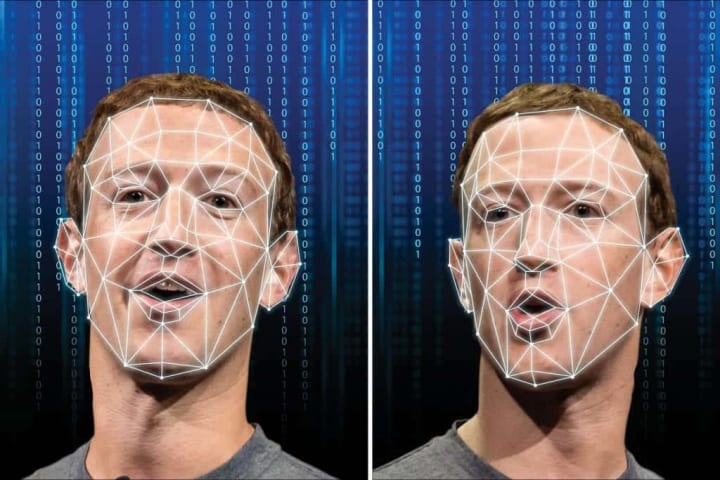

What Are Deepfakes?

Deepfakes utilize machine learning algorithms to manipulate digital media for various purposes. They can take clips or pictures of real people and alter them in a convincing manner, making it difficult to distinguish between fake and original content. They can be used to produce fake videos of celebrities, politicians, and what have you, saying or doing things they never actually did. Additionally, Deepfakes enable the creation of false news stories, propaganda, and other deceptive misinformation.

The Danger of Deepfakes

The danger of deepfakes is that they can be used to spread misinformation on a massive scale. In the era of social media, fake news, and misinformation can spread rapidly, and deepfakes have the potential to exacerbate this problem. Deepfakes can be used to create convincing fake news stories that can be shared on social media platforms, leading to the spread of false information. This can have serious consequences, such as influencing elections or causing social unrest.

Examples of Deepfakes

Recent years have seen a surge in deepfake technology, with high-profile examples such as the video starring Jordan Peele impersonating former President Barack Obama. In this video, machine learning algorithms were used to manipulate their facial movements and voice to create a realistic impression of the former President. This sparked an important conversation about the potential risks surrounding deepfakes, which continue to be discussed in today's digital landscape.

Another example is a deepfake video of Facebook CEO Mark Zuckerberg, which was created by a team of researchers at Georgia Tech. The video shows Zuckerberg talking about the dangers of deepfakes and how Facebook is working to combat them. The video was created to demonstrate the technology's potential for spreading misinformation and to raise awareness of the issue.

The Solutions to Deepfakes

Deepfakes pose a significant risk to our society, and it is imperative that we take steps to combat this form of content manipulation. Enhancing public understanding of deepfakes, and creating detection technologies and legal frameworks are all proactive measures that will help us in our efforts. Through collective action, we can ensure that information remains reliable and allow ourselves to better respond to the challenges posed by the era of AI.

In addition to the above solutions, individuals can also take steps to protect themselves from the potential harm of deepfakes. For instance, individuals can be cautious when consuming information online and can double-check the authenticity of any information that seems suspicious. They can also be vigilant about their online presence and take steps to prevent their own images and videos from being used in deepfakes.

Furthermore, researchers are also working on developing technologies that can detect deepfakes with greater accuracy. For instance, a team of researchers at the University of California, San Diego, has developed a system that uses a neural network to detect deepfakes in real time. The system analyzes a video stream and looks for signs of manipulation, such as discrepancies in facial expressions or unnatural movements.

Another team of researchers from the University of Albany, SUNY, has developed a technique that uses a combination of machine learning and forensic techniques to detect deepfakes. The technique involves analyzing the inconsistencies in the video to identify any signs of manipulation, such as inconsistencies in the lighting or shadows.

Deepfake technology is advancing rapidly, and although some progress has been made in detecting them, there is still much to be done. To stay ahead of the curve and protect against deep fake threats, it's essential to continue developing innovative solutions.

Conclusion

deepfakes are a significant threat to our society, and their potential to spread misinformation cannot be underestimated. However, with the right strategies and technologies, we can minimize the impact of deepfakes and reduce their potential harm. As individuals, we can take steps to protect ourselves, and as a society, we can work together to combat the spread of misinformation and protect the integrity of our information.

About the Creator

Chris Kamb

Hey there, I'm Chris - a tech and business writer who loves exploring the latest trends and innovations in these exciting industries, I love to research and write about the latest topics and make them easy to understand.

Comments

There are no comments for this story

Be the first to respond and start the conversation.