Multimodal AI: How Multimodal Artificial Intelligence Models Are Shaping the Future of AI

Exploring Multimodal AI and Artificial Intelligence Models

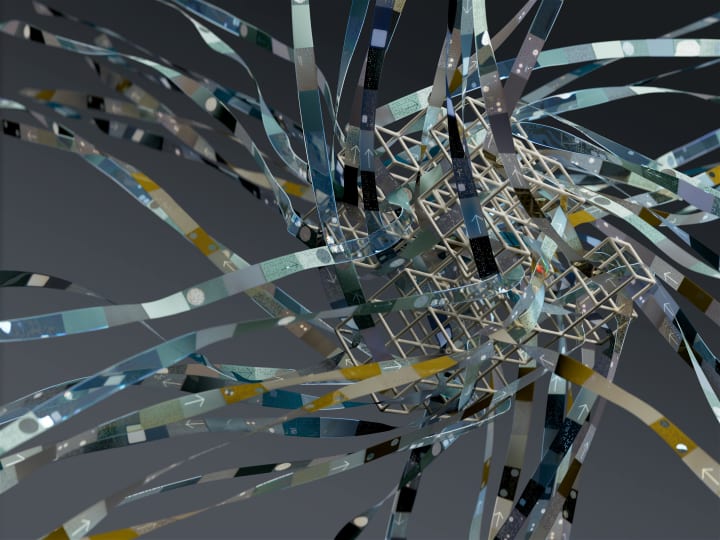

Multimodal AI is revolutionising technology with Multimodal Artificial Intelligence. Learn how advanced Multimodal AI Models process text, images, audio, and video to deliver smarter, human-like insights and powerful AI applications.

Explore the world of Multimodal AI and discover how multimodal artificial intelligence models are transforming the future of AI. This article explains what multimodal AI is, how these systems work, their real-world applications, benefits, challenges, and the future of artificial intelligence across industries.

What Is Multimodal AI?

Multimodal AI refers to systems that process and understand multiple forms of data at once — for instance, text and image, or video and audio together. Each form of data is called a modality. Unlike older, single-modality models that might only handle text or numbers, multimodal artificial intelligence blends these inputs to form a richer, more contextual understanding.

A simple example: when you upload an image and describe it with text, a multimodal ai model can read your caption, analyse the image, and generate a meaningful response that takes both into account. Recent advancements in multimodal ai show how merging these modalities can produce deeper insights, more human-like reasoning, and new creative capabilities. This ability brings artificial intelligence closer to how humans make sense of information — through multiple senses working together.

How Multimodal AI Models Work

At its core, a multimodal AI model processes each data type through specialised encoders before merging the results into a shared understanding. Here’s a simplified version of how it works:

1. Input – The model receives several data types, such as text, sound, and visual inputs.

2.*Encoding– Each modality is transformed into numerical data known as embeddings.

3. Fusion – These embeddings are aligned and merged so the model can find patterns across them.

4. Output – The model generates results that combine insights from all modalities, such as a descriptive caption for an image or an answer based on both audio and video cues.

This process enables multimodal systems to reason across different types of information and generate outputs that are contextually richer than those of unimodal models.

Real-World Uses of Multimodal Artificial Intelligence

Multimodal AI is already transforming industries:

Healthcare – Combining medical images, patient histories, and test results for more accurate diagnosis.

Autonomous vehicles – Merging camera, radar, and lidar data to improve safety and awareness.

Content creation – Tools that use text and images to create videos, animations, or digital art.

Customer service – Smart chatbots and virtual assistants that understand voice, emotion, and text simultaneously.

Security and fraud detection – Analysing transactions, text reports, and video feeds to identify suspicious patterns.

These examples prove that multimodal artificial intelligence isn’t just a concept — it’s a practical, fast-growing field reshaping how we use AI every day.

Key Benefits of Multimodal AI

1. Greater accuracy – By combining inputs from multiple sources, the system reduces ambiguity and improves performance.

2. Enhanced understanding – It can perceive context far better than single-modality systems.

3. Improved user experience – Multimodal interfaces feel more natural and interactive.

4. Cross-domain creativity – Enables new possibilities in media, design, and entertainment.

5. Knowledge transfer – Learns patterns that can be applied across different types of data.

Overall, multimodal AI models are expanding what’s possible with artificial intelligence and setting new standards for intelligent systems.

Challenges and Ethical Concerns

Despite its promise, multimodal artificial intelligence faces significant challenges:

Data requirements – It needs massive, high-quality datasets across multiple modalities.

Model complexity – Training these systems requires substantial computing power.

Bias and fairness – When combining data from many sources, existing biases can multiply.

Privacy – With richer data inputs, protecting user information becomes even more important.

Ethics and accountability – Responsible use of multimodal AI is essential to ensure transparency and safety.

Addressing these challenges responsibly is vital if the technology is to be trusted and widely adopted.

The Future of Multimodal AI

Looking ahead, the future of multimodal AI model is incredibly promising. We are moving towards systems that can integrate not just text, sound, and vision, but also touch, environmental data, and emotional cues. These advances could power the next generation of intelligent assistants, educational tools, creative media, and healthcare technologies

In time, multimodal AI will underpin truly integrated digital ecosystems — enabling seamless human-computer interaction, intuitive learning systems, and smarter, safer automation across industries.

Why Multimodal Artificial Intelligence Matters

For businesses, adopting multimodal artificial intelligence means unlocking deeper insights and building more intuitive experiences for users. For society, it promises progress in accessibility, healthcare, education, and safety.

It’s not just about making machines smarter — it’s about helping humans connect with technology in more meaningful, human-like ways.

Multomodal AI Conclusion

Multimodal AI represents the next great leap in the evolution of artificial intelligence. By combining sight, sound, language, and other data types, it allows systems to learn, reason, and create with unprecedented sophistication.

From healthcare to entertainment and beyond, multimodal AI models are redefining how machines understand our world — and how we interact with them. As this technology continues to evolve, its potential to transform daily life and industry alike is limitless.

About the Creator

Alex Ray

Education: American University, BA in Journalism Alexander Ellington is the chief editor and reporter for Biden News & a number of other media websites.

Thanks for being a reader and leave aTip if you wish.

Comments

There are no comments for this story

Be the first to respond and start the conversation.