AI – is it really physics, and is AI contending for most intelligent being?

A double feature: bringing AI research and development to the forefront of people’s mind and the research landscape, and hearing from the ‘Godfather of AI’ as he ups the anti on AI-caused human extinction.

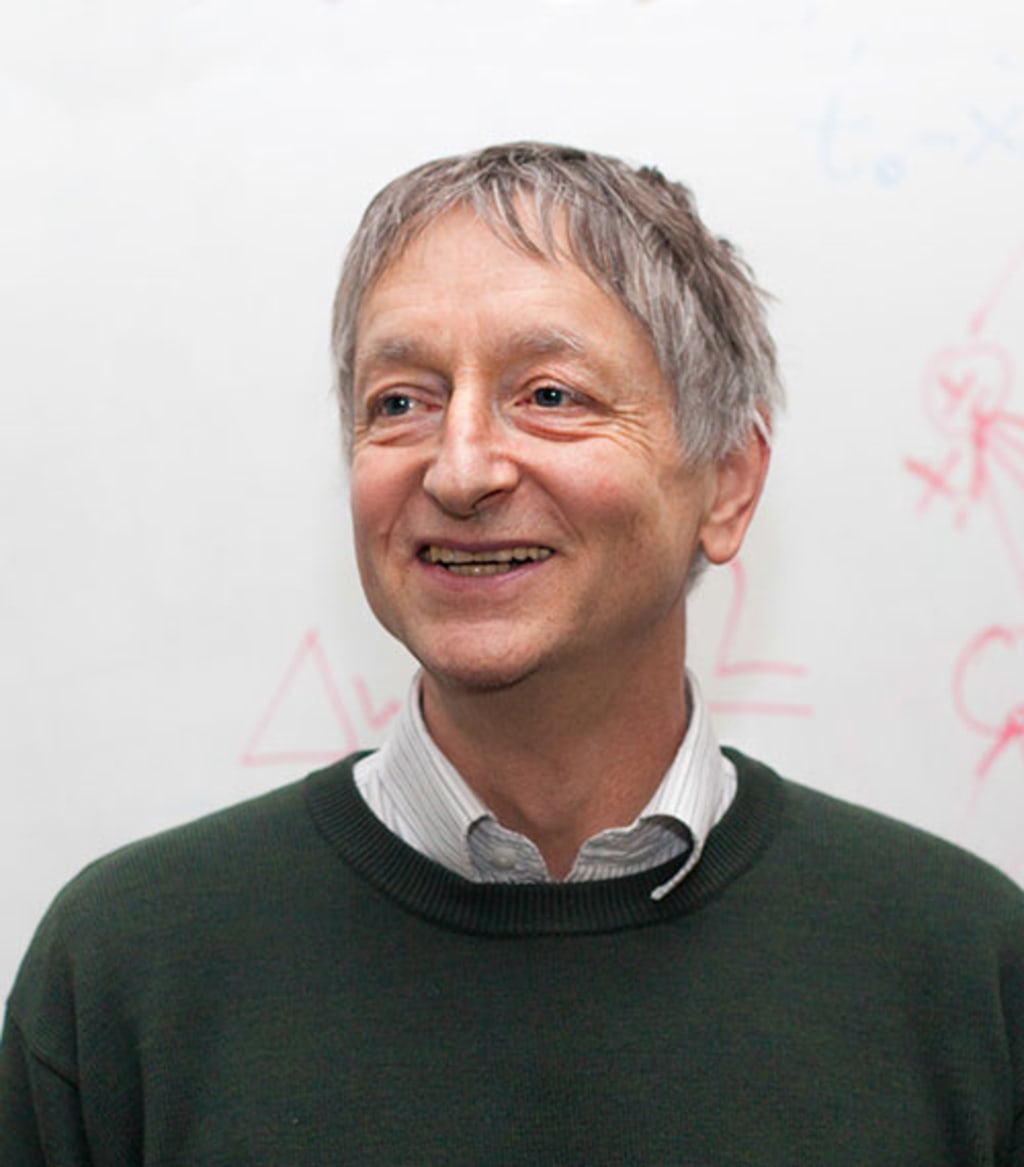

A couple of weeks ago, it was announced that Professor Geoffrey E. Hinton, the founder of the Gatsby Computational Neuroscience Unit at UCL Life Sciences, had been awarded the Noble Prize in Physics. He was one of two joint winners who have been awarded for 'foundational discoveries and invention that enable machine learning with artificial neural networks.

However, in the weeks since the announcement, there has been surprise in some quarters that the Nobel Prize for Physics was awarded on the theme of AI. Traditionally, pushing-the-boundaries physics has focused on the very large - expansion of space, movement of galaxies; or the very small - new particle, interaction at the sub-atomic level.

Many people would not initially think of physics as being applied technology, let alone something ethereal such as software and data.

However, the angle taken by the Nobel committee seems to make total sense as the prize aims to recognise individuals who have made discoveries that benefit humankind. AI will inevitably have a huge influence on civilisation, and therefore by rewarding this research, it brings awareness to the significance and the scope of the topic.

Overall, I am very glad this decision was made. While people are talking about AI every day, it is often in flippant ways about poor online chatbot support, or daft recommendations by Netflix. By making it a topic that gets the Nobel Prize, it brings it to the forefront of the true advancement of humankind. Of course, the question now is whether we understand AI enough to ensure this is a ‘technology for good’, and not the creation of future problems.

To read more about the groundbreaking research which was awarded the Nobel Prize in Physics, follow the link below:

https://www.ucl.ac.uk/news/2024/oct/ucl-institute-founder-awarded-nobel-prize-physics

* * *

More recently from Geoffrey Hinton, who has been monikered as the ‘Godfather of AI’, is a warning of how there is “10% to 20% chance AI will lead to human extinction in three decades”.

Hinton’s estimation comes with his analysis that “we’ve never had to deal with things more intelligent than ourselves”. This likens the evolving relationship between humans and developing AI to how humans have for millennia been the most intelligent thing, having control over ‘less intelligent’ beings.

Hinton suggests that “humans would be like toddlers compared with the intelligence of highly powerful AI systems”. This has led to questions about whether AI will become a contender for the most intelligent being, and what this could mean for humankind.

Last year, Hinton resigned from his position at Google to speak without restraint about the risks of AI development with appropriate regulation. One of the biggest fears of technology safety campaigns is the fear that unregulated and unconstrained AI development will lead to the outcome that Hinton is suggesting has a more than 1–10 chance of coming true.

Assuming AI doesn’t fundamentally take over the world, the more pressing topic is how we can make sure that it is implemented in an ethical manner, a topic that I will be digging into over a series of articles.

There is a risk that Hinton’s hyperbole distracts people from the boring but necessary steps of training AI-based decision making tools to take into account moral, ethical and culture considerations, rather than being simply focused on whatever is defined as its key objective.

Corporations and the individuals that lead them cannot be allowed to use the single-mindedness of AI tools as an excuse for obsessively concentrating on profit over people.

Linking to this topic, one specific example to be aware of is the risk of sociopathic intelligence, as outlined in a previous post.

About the Creator

Allegra Cuomo

Interested in Ethics of AI, Technology Ethics and Computational Linguistics

Subscribe to my Substack ‘A philosophy student’s take on Ethics of AI’: https://acuomoai.substack.com

Also interested in music journalism, interviews and gig reviews

Comments (1)

Critical that discussions around the apocalyptic scenarios don't distract us from the necessary work in the more mundane outcomes.