AI and Creativity: Behind the Music Industry’s Most Recent Silent Protest Album

How 1,000 artists came together to protest against the UK’s government’s stance on AI developers freely using copyrighted material.

With the boom in recent years of AI generated text programs and Large Language Machines (LLMs), the capabilities of AI generated creative media have expanded exponentially.

These capabilities range from creating custom AI generated text-to-image visual art, replicating and parodying existing artworks, or to producing songs in the lyrical and vocal style of famous artists.

Now while scrolling on social media, if I come across a song I don’t recognise but the vocals sounds familiar, my first thought is whether the song is legitimate or AI generated to match a certain artist’s style.

Therefore, it is becoming evident that more and more of the creative media we are seeing and hearing is potentially AI generated. And just as LLMs are trained on the written information available on the internet, AI generated music programs are trained from the recorded material already available.

This unlicensed usage of copyrighted work to train artificial intelligence reached front pages in 2024 when Suno and Udio, two prominent AI startups that use user-submitted prompts to generate entire songs, were sued by major record labels Sony Music, Warner Music Group, and Universal Music Group.

Alternatively to the approach of covertly using data sets to train programs, YouTube was reported to be “in talks with record labels over AI music deal”, and offering unspecified amounts of money to top record labels in exchange for licences to use their music catalogues for training.

However, while a proposal such as this one may resolve the problem of ensuring artists are paid for the work they produce, it does not solve the fear of musicians being totally replaced once these generative machines have absorbed what they can from already existing media.

This may be less of a problem for musicians and artists who are already established, but instead would harm up and coming artists whose music risks being sidelined in favour of music that can be custom-generated and royalty-free.

Following from all this, in December 2024 the British government published proposals to change the way in which AI developers could use copyrighted material, including the establishment of a copyright exemption for the development of artificial intelligence.

The proposal seemingly respected the right of artists who did not wish to have their music used in such ways by including the possibility for content owners to ‘opt out’ of having their material used for AI training. However, this would not resolve the problem of AI companies “exploiting musicians’ work to outcompete them.”

This move from the British Government seemed to be the final nail in the coffin for many in the music industry, and this culminated in the creation of a very powerful response.

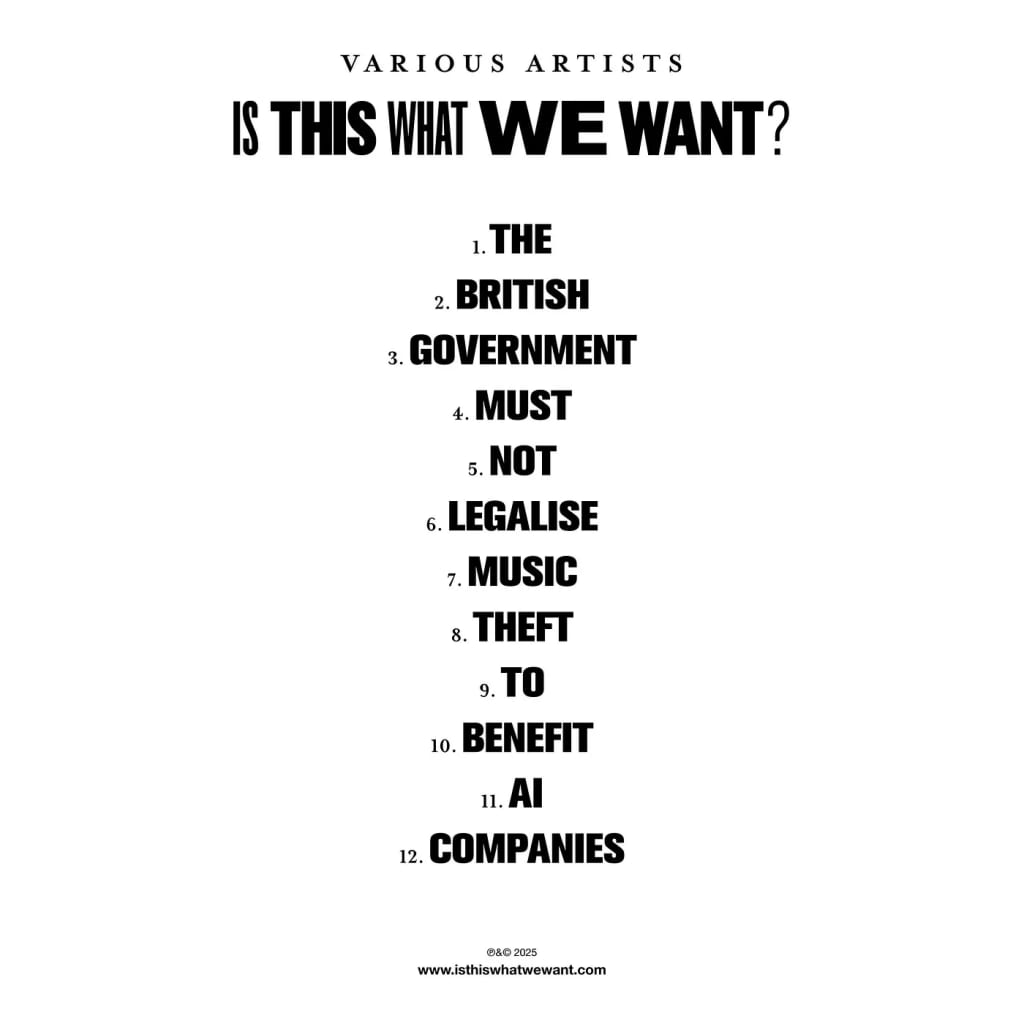

In February 2025, more than 1,000 musicians and groups came together to release the silent album ‘Is This What We Want?’, consisting of silence recorded in studios and rehearsal rooms.

This was done with the aim of protesting against the unauthorised use of copyrighted material in artificial intelligence training. The track titles in order spell out: The British Government Must Not Legalise Music Theft To Benefit AI Companies.

The effort was led by composer Ed Newton-Rex, and artists involved in the album included Sir James MacMillan, Max Richter, John Rutter, The Kanneh-Masons, The King’s Singers, The Sixteen, Roderick Williams, Sarah Connolly, Nicky Spence, Ian Bostridge, as well as Kate Bush, Annie Lennox, Damon Albarn and Imogen Heap.

The album’s tracks consist of the sounds of empty recording studios, rehearsal rooms, and concerts halls, representing the impact the musicians believe the government’s proposal would have on the musicians’ livelihoods and the music industry as a whole.

The message behind the protests is one that through polling has been found to be overwhelmingly backed by the UK public. According to polls commissioned from Reset Tech and YouGov, 72% of respondents agreed that AI developers should be required to pay royalties to the creators of media used to train AI programs.

Furthermore, Jo Twist, CEO of the BPI (British Phonographic Industry), commented that even with the proposal’s suggestion of an ‘opt out’ possibility for artists who did not wish for their work to be used to train models, “other markets have shown that opt-out schemes are unworkable in practice, and ineffective in protecting against misuse and theft”.

As someone who has always had a tremendous passion for music — in terms of listening, producing, and performing music — proposals such as the above sadden me. Finding opportunities in the venn diagram overlap between music and technology is something I am constantly on the look out for.

Nonetheless, I do not deem this use of AI, or the solution the proposal puts forward, to be positive or protecting for any current or future artist. This unauthorised use of individuals’ work has already been used by AI developers in covert ways.

Therefore, effective regulation that focuses on the real-life, human impacts is needed to mitigate the damage already done to artists and the future effects proposals such as this one could have.

There are numerous current uses of AI in music that have great impacts for both artists and listeners. For example, Spotify’s Discover Weekly, a custom-made AI playlist, and DJX functions are powered by AI and effectively bring both established artists and new, upcoming talent to the forefront of your streaming experience.

There are a multitude of opportunities for innovative and ground-breaking uses of AI in music and other creative industries. However, using copyrighted work of individuals in an unlicensed manner to develop AI models, is not one of them.

Over the coming months I’ll be sharing some of the powerful examples of where AI creates a win-win, while also considering what steps can be put in place to protect current and future artists.

I will also be highlighting examples of effective government proposals and regulation that are already having a positive impact in protecting the humans that are an essential part of our current AI journey.

Read more articles from ‘A Philosophy student’s take on Ethics of AI’ by subscribing to the Substack here!

Articles Referenced:

MIT Technology Review: Training AI music models is about to get very expensive

Financial Times: YouTube in talks with record labels over AI music deal

Bachtrack: Musicians protest UK government AI plans with silent album

About the Creator

Allegra Cuomo

Interested in Ethics of AI, Technology Ethics and Computational Linguistics

Subscribe to my Substack ‘A philosophy student’s take on Ethics of AI’: https://acuomoai.substack.com

Also interested in music journalism, interviews and gig reviews

Comments

There are no comments for this story

Be the first to respond and start the conversation.