A Comprehensive Guide on Usability Testing Methods

Discover usability testing and its many methodologies. Learn about definitions, use cases, recommended practices, and optimization methodologies.

Have you ever put your all into crafting a design, only to find that it doesn’t resonate with its audience? I get it. However, when you delve into observing how actual users engage with your creations, you unlock a powerful tool known as usability testing.

Drawing from my background (I’ve spent years focused on usability research), I can assure you that mastering various methods has completely transformed my approach to managing projects. It’s like putting on a pair of glasses and suddenly seeing everything clearly. Some techniques are fast and simple, while others require more effort but offer greater rewards. The key lies in finding the right balance for your specific project. Stay with me, and we’ll explore some straightforward usability testing methods together.

What Exactly is Usability Testing?

Usability testing assesses how effortlessly people can navigate a product, such as an app or website. It entails observing real users as they interact with the product to determine if they can accomplish their objectives efficiently and without difficulty. The aim is to detect any challenges or ambiguous elements early so they can be addressed.

The primary objectives of usability testing include:

- Pinpointing issues: Determine what aspects users find challenging or unclear.

- Improving user experience: Ensure the product is both enjoyable and easy to use.

- Validating design decisions: Confirm that the design effectively caters to its target audience.

- Collecting input: Receive direct feedback from users regarding their experiences.

Consider this analogy: If you’re preparing a cake, usability testing is akin to tasting it before serving to ensure it’s perfectly balanced—not overly sweet or bitter.

A widely recognized model for assessing usability is the 5 E’s framework: ease of use, efficiency, engagement, error prevention, and enjoyment. These attributes contribute to fostering a positive user experience. Although not compulsory, they serve as useful guidelines to bear in mind.

Why Usability Testing Matters

Usability testing goes beyond mere experimentation—it represents a crucial investment that significantly influences the success of your product, impacting factors such as user retention, conversion rates, return on investment (ROI), and more.

Retention refers to whether individuals continue engaging with your product over time. If they find it difficult or frustrating, they’ll seek alternatives. Even if your app or website is visually stunning and garners significant online attention, poor usability will drive users away. The truth may be tough, but it’s straightforward: functionality is just as critical as aesthetics.

When assessing conversions, you measure how many people take the desired action—making a purchase, subscribing to a service, completing a task, etc. Usability research can uncover new ways to boost these figures. For instance, customers might abandon their shopping carts because of a complicated checkout process. By addressing this obstacle, you can increase completed transactions.

The ROI calculation is straightforward: compare the financial gains from investing in usability testing against the costs involved in conducting it.

Financial benefits? Isn’t this about design? Absolutely, but testing directly affects costs. Imagine building a wardrobe only to realize extra screws are needed. Can you picture the hassle of locating and installing those screws after everything is assembled? Similarly, identifying and fixing issues early saves money by preventing expensive redesigns down the line.

Types of Usability Testing

There are three primary categories of usability testing methodologies, which vary based on interaction style, location, and the type of data collected:

- Moderated vs. Unmoderated

- Remote vs. In-Person

- Qualitative vs. Quantitative

Each category includes several subtypes tailored to specific needs and goals. Below is a breakdown of these types by interaction style.

Types of Usability Testing Based on Interaction Style

Moderated Testing

In this approach, a facilitator actively guides participants through the test in real-time, observing their actions and asking clarifying questions.

Lab Usability Testing

Often referred to as "usability labs," this method involves conducting tests in a controlled environment, such as an office or specialized lab. Participants interact with the product while being observed, and tools like cameras, one-way mirrors, and recording equipment capture their behavior and expressions.

Advantages:

- Minimizes distractions, enabling users to concentrate fully on tasks.

- Allows observers to closely monitor body language, facial expressions, and thought processes.

- Facilitators can provide explanations, ask follow-up questions, and adjust tasks dynamically.

Example: Watching a participant repeatedly tap an inactive "Purchase" button out of frustration highlights usability issues that need addressing.

Guerrilla Testing

This informal, moderated technique involves approaching random individuals in public spaces—such as cafes or parks—and asking them to test specific features of your product for a few minutes.

Benefits:

- No need for elaborate setups like labs or specialized equipment.

- Provides quick, actionable feedback at minimal cost.

- Offers insights into real-world reactions under natural conditions.

When to Use:

- Early-stage prototypes requiring rapid input.

- Complex workflows needing detailed user insights.

- Scenarios where direct engagement with participants is essential.

Outcome: Moderation helps uncover subtle issues, such as hesitation before performing an action, that might otherwise go unnoticed.

Unmoderated Testing

In unmoderated testing, participants complete tasks independently without supervision, typically from their own environment (e.g., home or workplace). Automated tools record their actions and feedback for later analysis.

Observation via Analytics

Tools like heatmaps, click tracking, and funnel analysis offer indirect insights into user behavior.

Purpose: Identify which parts of your product receive the most attention and where users disengage.

Example: If users fail to locate a button because they expect it in the header, you can refine the layout accordingly.

Eye-Tracking

Specialized software tracks users' eye movements, generating visual representations like heatmaps or gaze plots. These reveal what users focus on and overlook, aiding in optimizing layouts and emphasizing key elements (e.g., CTAs, headlines).

Common Patterns: Users often exhibit F-shaped or Z-shaped scanning behaviors when interacting with text-heavy or content-rich pages.

Surveys and Feedback Polls

A straightforward way to gather qualitative and quantitative data.

- Open-Ended Questions: Queries like "What confused you?" yield rich, detailed insights.

- Closed Questions: Rating scales (e.g., 1–10) provide measurable feedback.

Automated Task-Based Testing

Participants independently perform predefined tasks, and tools track metrics like task success rates, time spent, and error occurrences.

Example: Asking users to locate a product, add it to their cart, and check whether they notice discount badges. This method is ideal for A/B testing to compare design iterations and pinpoint recurring pain points.

When to Choose Unmoderated Testing

Unmoderated testing excels in specific situations:

- Tight Deadlines: Ideal for scenarios where rapid feedback is needed without waiting for in-depth observations.

- Large Sample Sizes: Easily scalable to accommodate thousands of participants across diverse locations.

- Geographically Diverse Audiences: Perfect for projects where gathering participants in one place isn't practical.

- Simple Tasks: Suitable for straightforward activities that don't require extensive guidance.

- Quantitative Data Collection: Excellent for gathering metrics such as task completion rates, time-on-task, and error frequency.

- Real-World Insights: Provides valuable information about how users behave naturally in their everyday environments.

By selecting the appropriate testing method, you can collect meaningful insights to enhance your product's usability and deliver a superior user experience. Each approach offers unique advantages, allowing you to tailor your strategy to fit your project's specific requirements.

By Location

Remote Testing

This method involves conducting tests online, enabling participants to engage from anywhere using their personal devices. It offers several benefits:

- Broader Reach: Eliminates geographical constraints, allowing a more diverse participant pool.

- Cost and Time Efficiency: Reduces expenses and effort by removing the need for travel.

- Natural Environment: Users interact with the product in settings they’re familiar with, providing authentic feedback.

However, remote testing comes with challenges:

- Limited Non-Verbal Cues: Without video or advanced tracking tools, it’s harder to observe body language or facial expressions.

- Technical Hurdles: Issues such as slow internet connections or outdated devices can affect results and are often outside your control.

For instance, a participant's spotty connection or old device might interfere with the session, complicating the collection of accurate data.

In-Person Testing

In this approach, participants visit a physical location, similar to traditional lab or offline testing. Its strengths include:

- Deep Observations: You can closely monitor participants’ reactions, including subtle cues like facial expressions and gestures.

- Controlled Environment: Minimizing external distractions ensures cleaner, more focused results.

- On-Site Support: Being present allows you to quickly resolve technical issues that may arise.

Despite these advantages, in-person testing has drawbacks:

- Geographic Limitations: Requires local participants unless you have the means to establish multiple testing sites.

- Higher Costs: Expenses related to venue rental, equipment setup, and logistics add up.

By Data Type

Qualitative Insights (User Feedback)

The aim here is to explore the reasoning behind user behavior by collecting rich, descriptive feedback. This method delves into the "why" of user experiences, uncovering issues that quantitative data alone cannot reveal. For example, a participant might comment, “The search bar is hard to spot because it blends with the background,” pointing out a design flaw that hadn’t been noticed. Post-test interviews are especially helpful for understanding the rationale behind user actions, even when initial behaviors seem unclear. These insights help pinpoint usability problems and spark ideas for improvement.

Quantitative Metrics (Success Rates, Time-on-Task)

This approach focuses on gathering numerical data to measure how effectively users complete specific tasks. Key metrics include:

- Task Success Rate: The proportion of users who successfully achieve a goal.

- Time-on-Task: The average duration users take to finish a task.

- Error Rate: The frequency of mistakes made during task performance.

- System Usability Scale (SUS): A standardized questionnaire assessing overall usability.

These metrics provide an objective basis for evaluating performance and tracking progress over time. They’re also useful for comparing different designs or iterations. For example, if 80% of users can add an item to their cart within 30 seconds but only 50% proceed to checkout, it suggests a potential issue in the checkout process.

Specialized Approaches

These techniques focus on specific elements of a product, such as its information architecture or navigation structure. Two widely used methods are card sorting and tree testing.

Card Sorting

Participants receive cards labeled with content or features and are asked to categorize them in a way that makes sense to them. Analyzing the resulting groupings helps refine the product’s structure.

- Open Card Sorting: Participants define their own categories.

- Closed Card Sorting: Predefined categories are provided, and participants sort items accordingly.

Best Use Cases: Early stages of design to explore or validate information architecture before finalizing layouts.

Tree Testing

Instead of physical cards, users navigate a text-based representation of the product’s structure, known as a "tree." They’re tasked with locating specific features within this hierarchy. Analyzing their success and struggles provides insight into the clarity and logic of the navigation system.

Best Use Cases: Before designing wireframes to optimize navigation or to evaluate competing information architectures.

Both methods play a crucial role in ensuring that a product’s structure is intuitive and user-friendly, ultimately enhancing the overall experience.

Step-by-Step Execution Guide

Ready to get started? Here’s a practical roadmap to transform usability testing theory into actionable insights. Use this guide as your framework for conducting effective testing sessions.

Step 1: Establish Your Objectives

Begin by answering critical questions to define the scope of your research:

- Which sections of the product require evaluation?

- Who comprises your target audience?

- What are the primary concerns from both user and business perspectives?

These answers will form the foundation for your test script, acting as a "blueprint" to keep you organized. Next, decide on the type of usability testing—whether it will be conducted in-person or remotely, moderated or unmoderated—as this decision will influence the session's structure and outcomes.

Step 2: Select Participants

Based on your objectives, recruit participants who closely align with your target audience. Consider factors such as demographics, technical proficiency, preferred devices, and other relevant traits.

For most tests, aim for 5–10 participants. This number typically uncovers the majority of usability issues while keeping the process efficient. Testing more individuals may yield diminishing returns, particularly for in-person sessions, which can be time-consuming and exhausting.

Step 3: Develop Realistic Scenarios

Craft tasks that reflect real-world situations and align with both user needs and business goals. Start by creating a comprehensive script that outlines tasks, steps, questions, and additional details to ensure smooth execution during the session.

- Use straightforward, jargon-free language to prevent confusion.

- Test the tasks yourself beforehand, especially if they involve comparisons with competitors, to confirm clarity and context.

- Ensure the tasks are realistic and achievable given the participants' skill levels.

Step 4: Set Up the Environment

Prepare the testing environment according to the format you’ve chosen:

- In-Person Testing: Secure a quiet space equipped with necessary tools, such as devices, recording equipment, and relevant software.

- Remote Testing: Provide participants with clear instructions for downloading any required tools (ensure they’re free). Have a contingency plan in place in case of technical glitches—technology isn’t always reliable!

- Stay connected with participants to address last-minute changes or rescheduling requests as needed.

Step 5: Conduct the Test

Start by explaining the purpose of the test and addressing any participant queries. Once they feel comfortable, assign tasks one at a time.

- Avoid offering hints or guiding participants unless explicitly requested.

- Encourage them to verbalize their thoughts (“I’m looking for the login button…”), providing deeper insight into their reasoning.

- Document their actions meticulously, noting successes, failures, pauses, and both verbal and non-verbal cues (if conducting in-person testing).

Step 6: Collect Feedback and Interpret Results

After completing the tasks, ask participants to rate their experience using a scale (e.g., 1–5) and provide feedback on what worked well and what could improve.

Next, analyze the data to identify trends and actionable insights. Look for recurring challenges or areas of confusion, but also highlight strengths and opportunities for growth. The goal is to isolate specific issues while acknowledging what’s already functioning effectively.

By adhering to these steps, you’ll establish a systematic approach to usability testing, ensuring valuable, actionable outcomes. This structured process will empower you to refine your product and enhance the overall user experience.

Key Best Practices and Common Mistakes to Avoid

The effectiveness of any usability testing approach depends heavily on how well it is carried out. Below are some essential best practices, along with common pitfalls, to keep in mind as you conduct your tests:

- Define Clear Objectives: Without a specific purpose for your test, the entire process becomes aimless. Establish a precise plan outlining what you hope to achieve. Failing to do so will result in wasted effort for both you and the participants.

- Select Appropriate Participants: Choose users who closely match your target audience based on demographics, skill levels, behaviors, and other relevant characteristics. Recruiting random individuals simply to fill spots won’t provide valuable insights.

- Create Practical Tasks: Design tasks that are easy to follow and simulate real-world situations. Ensure they are achievable regardless of the participant’s level of expertise. Steer clear of overly complicated or unrealistic challenges that don’t reflect actual use cases.

- Promote Verbalized Thoughts: Encourage participants to voice their thoughts as they interact with the product. This provides deeper insight into their reasoning and helps uncover underlying issues that might otherwise remain hidden.

- Stay Neutral: Refrain from guiding or assisting participants unless they specifically request help. Avoid influencing them, even unintentionally, through gestures like nodding or facial expressions. Allow them to tackle challenges on their own to gather genuine feedback.

- Leverage Both Qualitative and Quantitative Data: Combine observational insights (qualitative) with measurable data such as task completion rates and average time spent (quantitative). This holistic approach ensures a more complete understanding of usability concerns.

- Test Early and Iteratively: Think of usability testing like building a house—if you wait until construction is finished to make adjustments, fixes become far more difficult. Incorporate testing throughout the design and development phases to address problems early and prevent expensive redesigns down the line.

- Record Everything Thoroughly: Keep meticulous notes during sessions and capture video recordings for subsequent analysis. Carefully examine these resources to spot trends, extract insights, and develop actionable recommendations. Share your findings with stakeholders to support data-driven decisions.

By adhering to these guidelines and sidestepping common mistakes, you can optimize the value of your usability testing efforts and ensure impactful, actionable results.

About the Creator

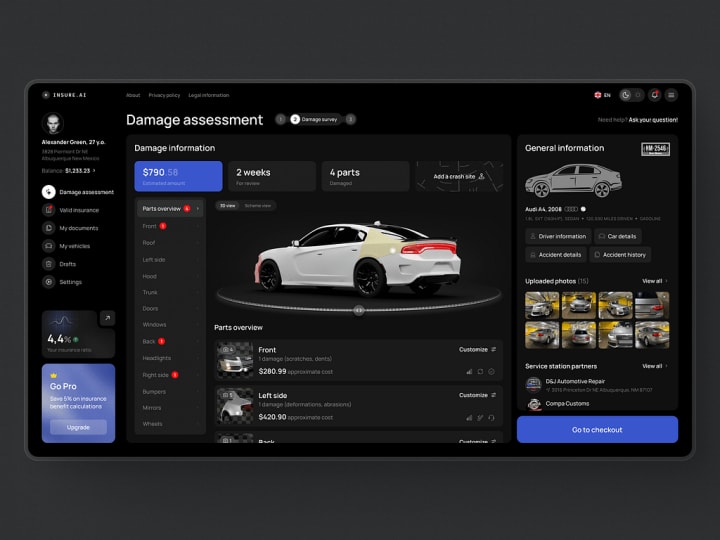

Shakuro

We are a web and mobile design and development agency. Making websites and apps, creating brand identities, and launching startups.

Comments (1)

A great guide! Great work!